您现在的位置是:首页 >技术交流 >Hadoop HA高可用搭建教程网站首页技术交流

Hadoop HA高可用搭建教程

简介Hadoop HA高可用搭建教程

-

安装jdk

-

安装zookeeper

-

启动zookeeper

-

Hadoop HA搭建

-

解压Hadoop && 环境

[root@master /]# tar -zxvf /opt/software/hadoop-3.1.3.tar.gz -C /opt/module/ [root@master /]# vim /etc/profile #hadoop export HADOOP_HOME=/opt/module/hadoop export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin -

配置 hadoop-env.sh 文件

[root@master hadoop]# vim hadoop-env.sh export JAVA_HOME=/opt/module/java export HADOOP_PERFIX=/opt/module/hadoop export HADOOP_OPTS="-Djava.library.path=$HADOOP_PERFIX/lib:$HADOOP_PERFIX/lib/native" export HDFS_NAMENODE_USER=root export HDFS_DATANODE_USER=root export HDFS_SECONDARYNAMENODE_USER=root export HDFS_ZKFC_USER=root export HDFS_JOURNALNODE_USER=root export YARN_RESOURCEMANAGER_USER=root export YARN_NODEMANAGER_USER=root -

配置 core-site.xml 文件

[root@master hadoop]# vim core-site.xml <property> <name>fs.defaultFS</name> <value>hdfs://ns</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/opt/module/hadoop/tmp</value> </property> <property> <name>ha.zookeeper.quorum</name> <value>master:2181,slave1:2181,slave2:2181</value> </property> <property> <name>ipc.client.connect.max.retries</name> <value>100</value> </property> <property> <name>ipc.client.connect.retry.interval</name> <value>10000</value> </property> -

配置 mapred-site.xml 文件

[root@master hadoop]# vim mapred-site.xml <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> -

配置 hdfs-site.xml 文件

[root@master hadoop]# vim hdfs-site.xml <property> <name>dfs.nameservices</name> <value>ns</value> </property> <property> <name>dfs.ha.namenodes.ns</name> <value>nn1,nn2</value> </property> <property> <name>dfs.namenode.rpc-address.ns.nn1</name> <value>master:9000</value> </property> <property> <name>dfs.namenode.http-address.ns.nn1</name> <value>master:9870</value> </property> <property> <name>dfs.namenode.rpc-address.ns.nn2</name> <value>slave1:9000</value> </property> <property> <name>dfs.namenode.http-address.ns.nn2</name> <value>slave1:9870</value> </property> <property> <name>dfs.namenode.shared.edits.dir</name> <value>qjournal://master:8485;slave1:8485;slave2:8485/ns</value> </property> <property> <name>dfs.ha.automatic-failover.enabled</name> <value>true</value> </property> <property> <name>dfs.journalnode.edits.dir</name> <value>/opt/module/hadoop/journal</value> </property> <property> <name>dfs.client.failover.proxy.provider.ns</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> </property> <property> <name>dfs.ha.fencing.methods</name> <value>shell(/bin/true)</value> </property> <property> <name>dfs.ha.fencing.ssh.private-key-files</name> <value>/root/.ssh/id_rsa</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>/opt/module/hadoop/dfs/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>/opt/module/hadoop/dfs/data</value> </property> <property> <name>dfs.replication</name> <value>3</value> </property> -

配置 yarn-site.xml 文件

[root@master hadoop]# vim yarn-site.xml <property> <name>yarn.resourcemanager.ha.enabled</name> <value>true</value> </property> <property> <name>yarn.resourcemanager.ha.rm-ids</name> <value>rm1,rm2</value> </property> <property> <name>yarn.resourcemanager.hostname.rm1</name> <value>master</value> </property> <property> <name>yarn.resourcemanager.hostname.rm2</name> <value>slave1</value> </property> <property> <name>yarn.resourcemanager.recovery.enabled</name> <value>true</value> </property> <property> <name>yarn.resourcemanager.store.class</name> <value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value> </property> <property> <name>yarn.resourcemanager.zk-address</name> <value>master:2181,slave1:2181,slave2:2181</value> </property> <property> <name>yarn.resourcemanager.cluster-id</name> <value>yarn-ha</value> </property> <property> <name>yarn.resourcemanager.hostname</name> <value>master</value> </property> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> -

配置 workers 文件

[root@master hadoop]# vim workers master slave1 slave2 -

分发Hadoop && 环境

[root@master hadoop]# scp -r /opt/module/hadoop/ slave1:/opt/module/ [root@master hadoop]# scp -r /opt/module/hadoop/ slave2vim:/opt/module/

-

Hadoop HA启动

-

启动JournalNode服务

root@master hadoop]# hadoop-daemons.sh start journalnode [root@master hadoop]# jps 1128 Jps 634 JournalNode 430 QuorumPeerMain -

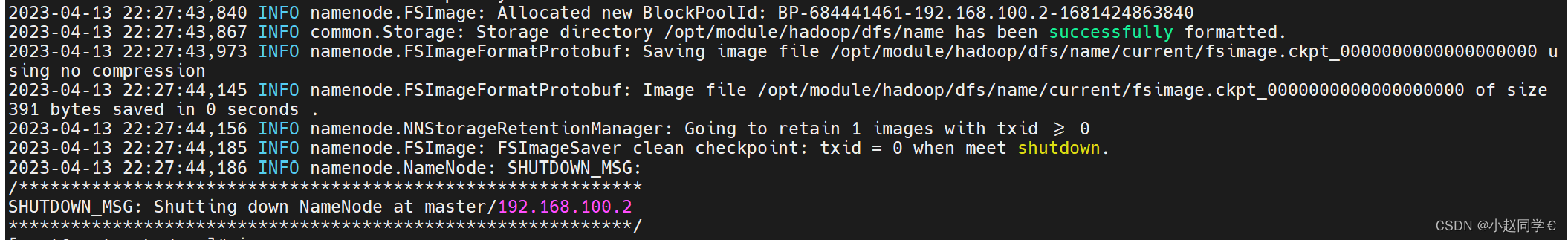

格式化 NameNode (active)节点 && 启动 namenode(active)

[root@master hadoop]# hdfs namenode -format [root@master hadoop]# hadoop-daemon.sh start namenode

-

格式化 NameNode(standby)节点 && 启动namenode(standby)

[root@slave1 /]# hdfs namenode -bootstrapStandby [root@slave1 /]# hadoop-daemon.sh start namenode

-

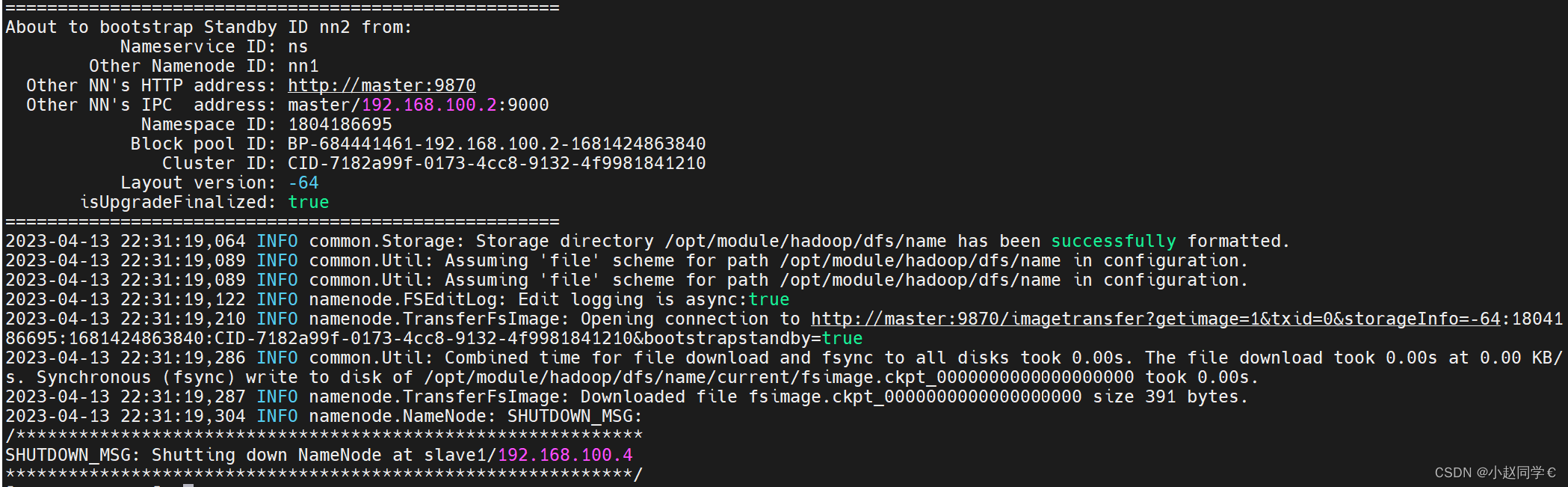

格式化 ZKFC

[root@master hadoop]# hdfs zkfc -formatZK

-

启动 HA 集群

//启动hdfs [root@master hadoop]# start-dfs.sh //启动yarn [root@master hadoop]# start-yarn.sh //作为一个守护进程启动独立的Web应用程序代理服务器 [root@master hadoop]# yarn-daemon.sh start proxyserver //启动MapReduce的历史服务器 [root@master hadoop]# mr-jobhistory-daemon.sh start historyserver -

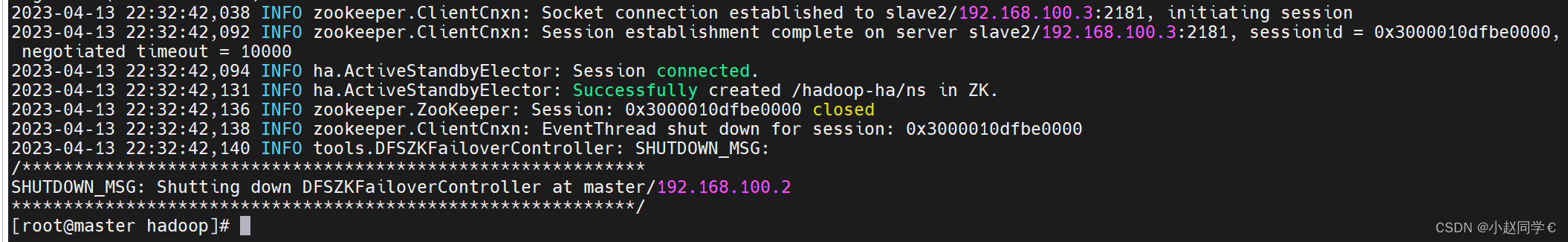

启动成功后查看三个节点jps情况

//master节点 [root@master hadoop]# jps 10051 DFSZKFailoverController 10723 NodeManager 10548 ResourceManager 5944 JobHistoryServer 9560 DataNode 11721 NameNode 12861 JournalNode 13261 Jps 591 QuorumPeerMain //slave1节点 [root@slave1 /]# jps 4896 DFSZKFailoverController 6275 JournalNode 4484 JobHistoryServer 4712 DataNode 6568 NameNode 6666 Jps 5052 ResourceManager 349 QuorumPeerMain 5167 NodeManager //slave2节点 [root@slave2 /]# jps 1400 DataNode 1610 NodeManager 2397 JournalNode 2494 Jps 175 QuorumPeerMain -

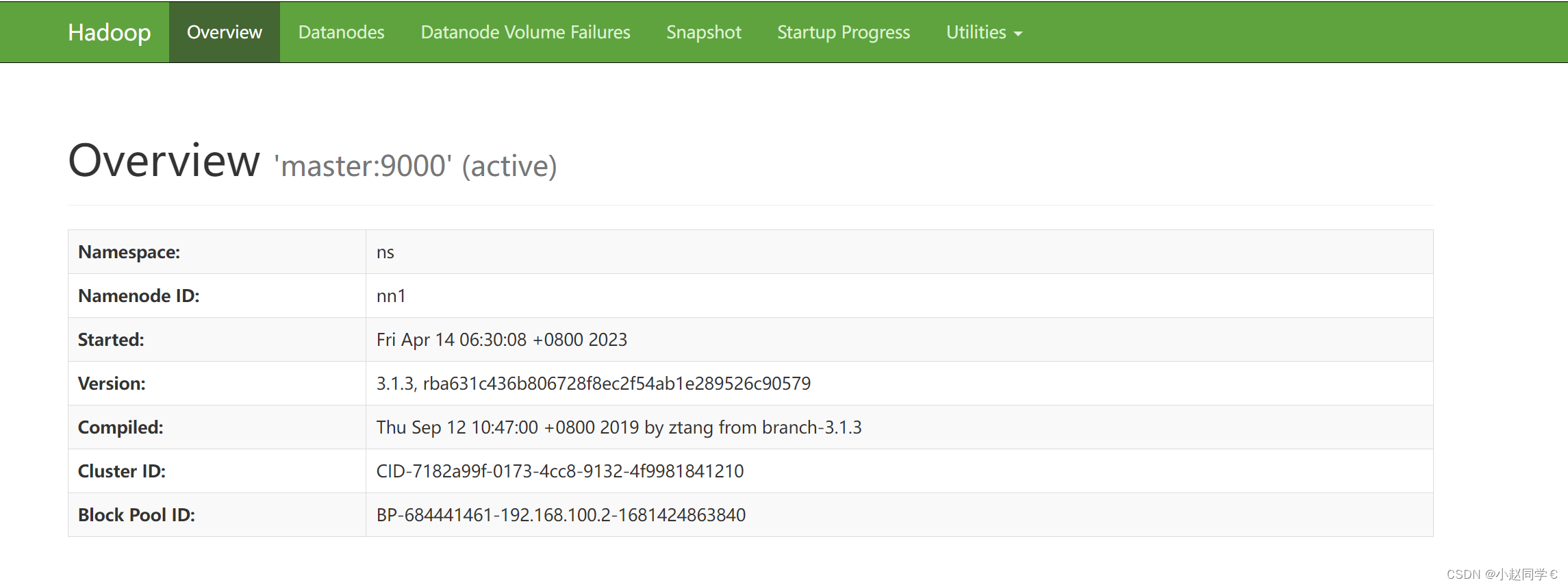

web端口查看

-

《~~~恭喜你搭建成功~~~》

风语者!平时喜欢研究各种技术,目前在从事后端开发工作,热爱生活、热爱工作。

U8W/U8W-Mini使用与常见问题解决

U8W/U8W-Mini使用与常见问题解决 stm32使用HAL库配置串口中断收发数据(保姆级教程)

stm32使用HAL库配置串口中断收发数据(保姆级教程) 分享几个国内免费的ChatGPT镜像网址(亲测有效)

分享几个国内免费的ChatGPT镜像网址(亲测有效) QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。...

QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。... SpringSecurity实现前后端分离认证授权

SpringSecurity实现前后端分离认证授权