您现在的位置是:首页 >技术教程 >【安卓源码】Binder机制2 -- addService 流程网站首页技术教程

【安卓源码】Binder机制2 -- addService 流程

0、binder 进程间通信原理

一次完整的 Binder IPC 通信过程通常是这样:

首先 Binder 驱动在内核空间创建一个数据接收缓存区; 接着在内核空间开辟一块内核缓存区,建立内核缓存区和内核中数据接收缓存区之间的映射关系,以及内核中数据接收缓存区和接收进程用户空间地址的映射关系; 发送方进程通过系统调用 copyfromuser() 将数据 copy 到内核中的内核缓存区,由于内核缓存区和接收进程的用户空间存在内存映射,因此也就相当于把数据发送到了接收进程的用户空间,这样便完成了一次进程间的通信。【因为存在映射关系,所以不用再拷贝一次】 如下图:

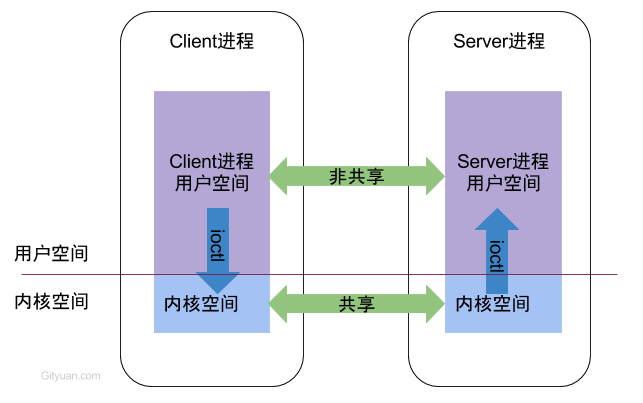

每个Android的进程,只能运行在自己进程所拥有的虚拟地址空间。对应一个4GB的虚拟地址空间,其中3GB是用户空间,1GB是内核空间,当然内核空间的大小是可以通过参数配置调整的。

对于用户空间,不同进程之间彼此是不能共享的,而内核空间却是可共享的。Client进程向Server进程通信,恰恰是利用进程间可共享的内核内存空间来完成底层通信工作的,Client端与Server端进程往往采用ioctl等方法跟内核空间的驱动进行交互。

安卓10 的 addService 的流程,以mediaplayerservice 为例子:

/frameworks/av/media/mediaserver/main_mediaserver.cpp

int main(int argc __unused, char **argv __unused)

{

signal(SIGPIPE, SIG_IGN);

// 1. 获取 ProcessState 对象

sp<ProcessState> proc(ProcessState::self());

sp<IServiceManager> sm(defaultServiceManager());

ALOGI("ServiceManager: %p", sm.get());

AIcu_initializeIcuOrDie();

// 2. MediaPlayerService 的addService 方法

MediaPlayerService::instantiate();

ResourceManagerService::instantiate();

registerExtensions();

ProcessState::self()->startThreadPool();

IPCThreadState::self()->joinThreadPool();

}1. 获取 ProcessState 对象

ProcessState的单例模式的惟一性,获得ProcessState对象: 这也是单例模式,从而保证每一个进程只有一个ProcessState对象。因此一个进程只打开binder设备一次,其中ProcessState的成员变量mDriverFD记录binder驱动的fd,用于访问binder设备。BINDER_VM_SIZE = (1*1024*1024) - (4096 *2), binder分配的默认内存大小为1M-8k。DEFAULT_MAX_BINDER_THREADS = 15,binder默认的最大可并发访问的线程数为16。

/frameworks/native/libs/binder/ProcessState.cpp

sp<ProcessState> ProcessState::self()

{

Mutex::Autolock _l(gProcessMutex);

if (gProcess != nullptr) {

return gProcess;

}

gProcess = new ProcessState(kDefaultDriver);

return gProcess;

}单例模式,第一次走到构造函数,去打开设备驱动

ProcessState::ProcessState(const char *driver)

: mDriverName(String8(driver))

// 打开设备驱动 open_driver

, mDriverFD(open_driver(driver))

, mVMStart(MAP_FAILED)

, mThreadCountLock(PTHREAD_MUTEX_INITIALIZER)

, mThreadCountDecrement(PTHREAD_COND_INITIALIZER)

, mExecutingThreadsCount(0)

, mMaxThreads(DEFAULT_MAX_BINDER_THREADS)

, mStarvationStartTimeMs(0)

, mManagesContexts(false)

, mBinderContextCheckFunc(nullptr)

, mBinderContextUserData(nullptr)

, mThreadPoolStarted(false)

, mThreadPoolSeq(1)

, mCallRestriction(CallRestriction::NONE)

{

if (mDriverFD >= 0) {

// mmap the binder, providing a chunk of virtual address space to receive transactions.

// 采用内存映射函数mmap,给binder分配一块虚拟地址空间

mVMStart = mmap(nullptr, BINDER_VM_SIZE, PROT_READ, MAP_PRIVATE | MAP_NORESERVE, mDriverFD, 0);

if (mVMStart == MAP_FAILED) {

// *sigh*

ALOGE("Using %s failed: unable to mmap transaction memory.

", mDriverName.c_str());

close(mDriverFD);

mDriverFD = -1;

mDriverName.clear();

}

}

LOG_ALWAYS_FATAL_IF(mDriverFD < 0, "Binder driver could not be opened. Terminating.");

}打开设备驱动 open_driver

static int open_driver(const char *driver)

{

// 1-0) 打开设备驱动:"/dev/binder"

int fd = open(driver, O_RDWR | O_CLOEXEC);

if (fd >= 0) {

int vers = 0;

// 1-1)设置binder 版本

status_t result = ioctl(fd, BINDER_VERSION, &vers);

if (result == -1) {

ALOGE("Binder ioctl to obtain version failed: %s", strerror(errno));

close(fd);

fd = -1;

}

if (result != 0 || vers != BINDER_CURRENT_PROTOCOL_VERSION) {

ALOGE("Binder driver protocol(%d) does not match user space protocol(%d)! ioctl() return value: %d",

vers, BINDER_CURRENT_PROTOCOL_VERSION, result);

close(fd);

fd = -1;

}

size_t maxThreads = DEFAULT_MAX_BINDER_THREADS;

// 1-2)设置最大的线程数据 15

result = ioctl(fd, BINDER_SET_MAX_THREADS, &maxThreads);

if (result == -1) {

ALOGE("Binder ioctl to set max threads failed: %s", strerror(errno));

}

} else {

ALOGW("Opening '%s' failed: %s

", driver, strerror(errno));

}

return fd;

}1-0) 打开设备驱动:"/dev/binder"

int fd = open(driver, O_RDWR | O_CLOEXEC)

xref: /drivers/staging/android/binder.c

static int binder_open(struct inode *nodp, struct file *filp)

{

struct binder_proc *proc;

binder_debug(BINDER_DEBUG_OPEN_CLOSE, "binder_open: %d:%d

",

current->group_leader->pid, current->pid);

// 分配内存 proc

proc = kzalloc(sizeof(*proc), GFP_KERNEL);

if (proc == NULL)

return -ENOMEM;

get_task_struct(current);

proc->tsk = current;

// 初始化双向链表

INIT_LIST_HEAD(&proc->todo);

init_waitqueue_head(&proc->wait);

proc->default_priority = task_nice(current);

binder_lock(__func__);

binder_stats_created(BINDER_STAT_PROC);

// 将 proc->proc_node 增加到 binder_procs双向链表中

hlist_add_head(&proc->proc_node, &binder_procs);

proc->pid = current->group_leader->pid;

// 又初始化双向链表

INIT_LIST_HEAD(&proc->delivered_death);

// 设置 filp->private_data为 proc

filp->private_data = proc;

binder_unlock(__func__);

if (binder_debugfs_dir_entry_proc) {

char strbuf[11];

snprintf(strbuf, sizeof(strbuf), "%u", proc->pid);

proc->debugfs_entry = debugfs_create_file(strbuf, S_IRUGO,

binder_debugfs_dir_entry_proc, proc, &binder_proc_fops);

}

return 0;

}1-1)设置binder 版本

status_t result = ioctl(fd, BINDER_VERSION, &vers)

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

/*pr_info("binder_ioctl: %d:%d %x %lx

",

proc->pid, current->pid, cmd, arg);*/

trace_binder_ioctl(cmd, arg);

ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret)

goto err_unlocked;

binder_lock(__func__);

thread = binder_get_thread(proc);

if (thread == NULL) {

ret = -ENOMEM;

goto err;

}

switch (cmd) {

。。

case BINDER_VERSION: {

struct binder_version __user *ver = ubuf;

if (size != sizeof(struct binder_version)) {

ret = -EINVAL;

goto err;

}

// put_user 将结果 &ver->protocol_version传给用户空间。

if (put_user(BINDER_CURRENT_PROTOCOL_VERSION,

&ver->protocol_version)) {

ret = -EINVAL;

goto err;

}

break;

}其中 binder_get_thread 函数:

static struct binder_thread *binder_get_thread(struct binder_proc *proc)

{

struct binder_thread *thread = NULL;

struct rb_node *parent = NULL;

struct rb_node **p = &proc->threads.rb_node;

while (*p) {

parent = *p;

thread = rb_entry(parent, struct binder_thread, rb_node);

if (current->pid < thread->pid)

p = &(*p)->rb_left;

else if (current->pid > thread->pid)

p = &(*p)->rb_right;

else

break;

}

// 使用红黑树保存 thread

if (*p == NULL) {

// 创建一个 thread

thread = kzalloc(sizeof(*thread), GFP_KERNEL);

if (thread == NULL)

return NULL;

binder_stats_created(BINDER_STAT_THREAD);

thread->proc = proc;

thread->pid = current->pid;

init_waitqueue_head(&thread->wait);

INIT_LIST_HEAD(&thread->todo);

rb_link_node(&thread->rb_node, parent, p);

// 插入到红黑树中

rb_insert_color(&thread->rb_node, &proc->threads);

thread->looper |= BINDER_LOOPER_STATE_NEED_RETURN;

thread->return_error = BR_OK;

thread->return_error2 = BR_OK;

}

return thread;

}put_user 将结果 &ver->protocol_version传给用户空间

/frameworks/native/libs/binder/ProcessState.cpp

status_t result = ioctl(fd, BINDER_VERSION, &vers);

if (result == -1) {

ALOGE("Binder ioctl to obtain version failed: %s", strerror(errno));

close(fd);

fd = -1;

}

// 用户空间的binder version与返回的值对比

if (result != 0 || vers != BINDER_CURRENT_PROTOCOL_VERSION) {

ALOGE("Binder driver protocol(%d) does not match user space protocol(%d)! ioctl() return value: %d",

vers, BINDER_CURRENT_PROTOCOL_VERSION, result);

close(fd);

fd = -1;

}1-2)设置最大的线程数据 15

拷贝用户空间的数据copy_from_user:到 proc->max_threads

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

// 缓存下发的 arg

void __user *ubuf = (void __user *)arg;

/*pr_info("binder_ioctl: %d:%d %x %lx

",

proc->pid, current->pid, cmd, arg);*/

trace_binder_ioctl(cmd, arg);

ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret)

goto err_unlocked;

binder_lock(__func__);

thread = binder_get_thread(proc);

if (thread == NULL) {

ret = -ENOMEM;

goto err;

}

switch (cmd) {

case BINDER_SET_MAX_THREADS:

// 拷贝用户空间的数据到 proc->max_threads

if (copy_from_user(&proc->max_threads, ubuf, sizeof(proc->max_threads))) {

ret = -EINVAL;

goto err;

}

break;2. MediaPlayerService 的addService 方法

MediaPlayerService::instantiate函数把MediaPlayerService添加到Service Manger中

/frameworks/av/media/libmediaplayerservice/MediaPlayerService.cpp

void MediaPlayerService::instantiate() {

defaultServiceManager()->addService(

String16("media.player"), new MediaPlayerService());

}

由前一篇博客分析可以知道:defaultServiceManager() 的值为:

BpServiceManager(new BpBinder(0)) 调用客户端的方法

执行:addService(String16("media.player"), new MediaPlayerService());

/frameworks/native/libs/binder/IServiceManager.cpp

class BpServiceManager : public BpInterface<IServiceManager>

{

public:

explicit BpServiceManager(const sp<IBinder>& impl)

: BpInterface<IServiceManager>(impl)

{

}

virtual status_t addService(const String16& name, const sp<IBinder>& service,

bool allowIsolated, int dumpsysPriority) {

Parcel data, reply;

// 2-1)先看下 Parcel 序列化的一些方法

data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor());

data.writeString16(name);

// 2-2)data.writeStrongBinder(service) 方法

data.writeStrongBinder(service);

data.writeInt32(allowIsolated ? 1 : 0);

data.writeInt32(dumpsysPriority);

// 2-3)remote() 为 BpBinder,调用 transact

status_t err = remote()->transact(ADD_SERVICE_TRANSACTION, data, &reply);

return err == NO_ERROR ? reply.readExceptionCode() : err;

}data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor()),

其中:IServiceManager::getInterfaceDescriptor()的值为:"android.os.IServiceManager"

2-1)先看下 Parcel 序列化的一些方法

- writeString16 ("media.player")方法:

/frameworks/native/libs/binder/Parcel.cpp

status_t Parcel::writeString16(const String16& str)

{

return writeString16(str.string(), str.size());

}

=====

status_t Parcel::writeString16(const char16_t* str, size_t len)

{

if (str == nullptr) return writeInt32(-1);

// a)增长空间 writeInt32

// 假如是首次写入数据:"media.player", len = 12

status_t err = writeInt32(len);

if (err == NO_ERROR) {

// len的值为 12,假如 char16_t为 2 个字节

// len = 24

len *= sizeof(char16_t);

// b)找到要写入的指针位置 writeInplace(26)

// data 为 uint8_t* const data = mData+mDataPos;

uint8_t* data = (uint8_t*)writeInplace(len+sizeof(char16_t));

if (data) {

// 将 str 保存到 data 中

memcpy(data, str, len);

*reinterpret_cast<char16_t*>(data+len) = 0;

return NO_ERROR;

}

err = mError;

}

return err;

}// a)增长空间 writeInt32

status_t err = writeInt32(len)

// val 的值为 12

status_t Parcel::writeInt32(int32_t val)

{

return writeAligned(val);

}

======

template<class T>

status_t Parcel::writeAligned(T val) {

COMPILE_TIME_ASSERT_FUNCTION_SCOPE(PAD_SIZE_UNSAFE(sizeof(T)) == sizeof(T));

// 初始化Parcel 时调用 initState(),mDataCapacity的值为0,所以不满足下列的条件

if ((mDataPos+sizeof(val)) <= mDataCapacity) {

restart_write:

// 首次插入 mDataPos为 0

// 如果是首次插入,则设置 *reinterpret_cast<int32_t*>(mData+0) = 12

// *reinterpret_cast<int32_t*>(mData) = 12

// *mData = 12

*reinterpret_cast<T*>(mData+mDataPos) = val;

// 重新去设置mDataPos 和 mDataSize 的值

// mDataPos += 4;

return finishWrite(sizeof(val));

}

// 先走到增大数据

status_t err = growData(sizeof(val));

// 如果没有错误的化,则 跳转到 restart_write:

if (err == NO_ERROR) goto restart_write;

return err;

}

==========

status_t Parcel::growData(size_t len)

{

if (len > INT32_MAX) {

// don't accept size_t values which may have come from an

// inadvertent conversion from a negative int.

return BAD_VALUE;

}

// 4*3/2 = 6

size_t newSize = ((mDataSize+len)*3)/2;

return (newSize <= mDataSize)

? (status_t) NO_MEMORY

: continueWrite(newSize);

}

执行:continueWrite(6)

status_t Parcel::continueWrite(size_t desired)

{

if (desired > INT32_MAX) {

// don't accept size_t values which may have come from an

// inadvertent conversion from a negative int.

return BAD_VALUE;

}

。。。。。

} else {

// This is the first data. Easy!

// 分配 6 个字节的空间

uint8_t* data = (uint8_t*)malloc(desired);

if (!data) {

mError = NO_MEMORY;

return NO_MEMORY;

}

if(!(mDataCapacity == 0 && mObjects == nullptr

&& mObjectsCapacity == 0)) {

ALOGE("continueWrite: %zu/%p/%zu/%zu", mDataCapacity, mObjects, mObjectsCapacity, desired);

}

LOG_ALLOC("Parcel %p: allocating with %zu capacity", this, desired);

pthread_mutex_lock(&gParcelGlobalAllocSizeLock);

// 设置全局分配的空间

gParcelGlobalAllocSize += desired;

// 分配了多少次

gParcelGlobalAllocCount++;

pthread_mutex_unlock(&gParcelGlobalAllocSizeLock);

// 设置全局 mData指针 为 data

mData = data;

// 设置 mDataSize为 0

mDataSize = mDataPos = 0;

ALOGV("continueWrite Setting data size of %p to %zu", this, mDataSize);

ALOGV("continueWrite Setting data pos of %p to %zu", this, mDataPos);

// 设置data 容量为 6

mDataCapacity = desired;

}

return NO_ERROR;

}// b)找到要写入的指针位置 writeInplace(26)

uint8_t* data = (uint8_t*)writeInplace(len+sizeof(char16_t))

// 设置全局 mData指针 为 data

mData = data;// 设置 mDataSize为 0

mDataSize = mDataPos = 0;// 设置data 容量为 6

mDataCapacity = desired;

void* Parcel::writeInplace(size_t len)

{

if (len > INT32_MAX) {

// don't accept size_t values which may have come from an

// inadvertent conversion from a negative int.

return nullptr;

}

// pad_size(26) = 28

const size_t padded = pad_size(len);

// sanity check for integer overflow

if (mDataPos+padded < mDataPos) {

return nullptr;

}

if ((mDataPos+padded) <= mDataCapacity) {

restart_write:

//printf("Writing %ld bytes, padded to %ld

", len, padded);

uint8_t* const data = mData+mDataPos;

// Need to pad at end?

if (padded != len) {

#if BYTE_ORDER == BIG_ENDIAN

static const uint32_t mask[4] = {

0x00000000, 0xffffff00, 0xffff0000, 0xff000000

};

#endif

#if BYTE_ORDER == LITTLE_ENDIAN

static const uint32_t mask[4] = {

0x00000000, 0x00ffffff, 0x0000ffff, 0x000000ff

};

#endif

//printf("Applying pad mask: %p to %p

", (void*)mask[padded-len],

// *reinterpret_cast<void**>(data+padded-4));

*reinterpret_cast<uint32_t*>(data+padded-4) &= mask[padded-len];

}

finishWrite(padded);

return data;

}

》》 继续增加空间 growData(28)

status_t err = growData(padded);

if (err == NO_ERROR) goto restart_write;

return nullptr;

}获取padded 的值 28 :

// PAD_SIZE_UNSAFE(26) =

#define PAD_SIZE_UNSAFE(s) (((s)+3)&~3)

static size_t pad_size(size_t s) {

if (s > (SIZE_T_MAX - 3)) {

abort();

}

return PAD_SIZE_UNSAFE(s);

}》》 继续增加空间 growData(28)

status_t Parcel::growData(size_t len)

{

if (len > INT32_MAX) {

// don't accept size_t values which may have come from an

// inadvertent conversion from a negative int.

return BAD_VALUE;

}

// 28*3/2 = 42

size_t newSize = ((mDataSize+len)*3)/2;

return (newSize <= mDataSize)

? (status_t) NO_MEMORY

// 执行continueWrite(42)

: continueWrite(newSize);

}// 执行continueWrite(42)

status_t Parcel::continueWrite(size_t desired)

{

if (desired > INT32_MAX) {

// don't accept size_t values which may have come from an

// inadvertent conversion from a negative int.

return BAD_VALUE;

}

。。。

} else if (mData) {

if (objectsSize < mObjectsSize) {

// Need to release refs on any objects we are dropping.

const sp<ProcessState> proc(ProcessState::self());

for (size_t i=objectsSize; i<mObjectsSize; i++) {

const flat_binder_object* flat

= reinterpret_cast<flat_binder_object*>(mData+mObjects[i]);

if (flat->hdr.type == BINDER_TYPE_FD) {

// will need to rescan because we may have lopped off the only FDs

mFdsKnown = false;

}

release_object(proc, *flat, this, &mOpenAshmemSize);

}

if (objectsSize == 0) {

free(mObjects);

mObjects = nullptr;

mObjectsCapacity = 0;

} else {

binder_size_t* objects =

(binder_size_t*)realloc(mObjects, objectsSize*sizeof(binder_size_t));

if (objects) {

mObjects = objects;

mObjectsCapacity = objectsSize;

}

}

mObjectsSize = objectsSize;

mNextObjectHint = 0;

mObjectsSorted = false;

}

// We own the data, so we can just do a realloc().

if (desired > mDataCapacity) {

// 重新给 mData分配 42 字节的空间

uint8_t* data = (uint8_t*)realloc(mData, desired);

if (data) {

LOG_ALLOC("Parcel %p: continue from %zu to %zu capacity", this, mDataCapacity,

desired);

pthread_mutex_lock(&gParcelGlobalAllocSizeLock);

gParcelGlobalAllocSize += desired;

gParcelGlobalAllocSize -= mDataCapacity;

pthread_mutex_unlock(&gParcelGlobalAllocSizeLock);

// 重新设置 mData

mData = data;

// 设置 mDataCapacity 为42

mDataCapacity = desired;

} else {

mError = NO_MEMORY;

return NO_MEMORY;

}

} else {

if (mDataSize > desired) {

mDataSize = desired;

ALOGV("continueWrite Setting data size of %p to %zu", this, mDataSize);

}

if (mDataPos > desired) {

mDataPos = desired;

ALOGV("continueWrite Setting data pos of %p to %zu", this, mDataPos);

}

}》》 finishWrite(28) 更新 mDataPos的值

status_t Parcel::finishWrite(size_t len)

{

if (len > INT32_MAX) {

// don't accept size_t values which may have come from an

// inadvertent conversion from a negative int.

return BAD_VALUE;

}

//printf("Finish write of %d

", len);

mDataPos += len;

ALOGV("finishWrite Setting data pos of %p to %zu", this, mDataPos);

if (mDataPos > mDataSize) {

mDataSize = mDataPos;

ALOGV("finishWrite Setting data size of %p to %zu", this, mDataSize);

}

//printf("New pos=%d, size=%d

", mDataPos, mDataSize);

return NO_ERROR;

}2-2)data.writeStrongBinder(service) 方法

status_t Parcel::writeStrongBinder(const sp<IBinder>& val)

{

return flatten_binder(ProcessState::self(), val, this);

}

=============

status_t flatten_binder(const sp<ProcessState>& /*proc*/,

const sp<IBinder>& binder, Parcel* out)

{

// flat_binder_object 保存binder 的结构体

flat_binder_object obj;

if (IPCThreadState::self()->backgroundSchedulingDisabled()) {

/* minimum priority for all nodes is nice 0 */

obj.flags = FLAT_BINDER_FLAG_ACCEPTS_FDS;

} else {

/* minimum priority for all nodes is MAX_NICE(19) */

obj.flags = 0x13 | FLAT_BINDER_FLAG_ACCEPTS_FDS;

}

if (binder != nullptr) {

// 获取binder

BBinder *local = binder->localBinder();

if (!local) {

BpBinder *proxy = binder->remoteBinder();

if (proxy == nullptr) {

ALOGE("null proxy");

}

const int32_t handle = proxy ? proxy->handle() : 0;

obj.hdr.type = BINDER_TYPE_HANDLE;

obj.binder = 0; /* Don't pass uninitialized stack data to a remote process */

obj.handle = handle;

obj.cookie = 0;

} else {

if (local->isRequestingSid()) {

obj.flags |= FLAT_BINDER_FLAG_TXN_SECURITY_CTX;

}

obj.hdr.type = BINDER_TYPE_BINDER;

// flat_binder_object 的binder 设置为 弱引用

obj.binder = reinterpret_cast<uintptr_t>(local->getWeakRefs());

// cookie 为BBinder

obj.cookie = reinterpret_cast<uintptr_t>(local);

}

} else {

obj.hdr.type = BINDER_TYPE_BINDER;

obj.binder = 0;

obj.cookie = 0;

}

return finish_flatten_binder(binder, obj, out);

}主要设置如下:

// flat_binder_object 的binder 设置为 弱引用

obj.binder = reinterpret_cast<uintptr_t>(local->getWeakRefs());

// cookie 为BBinder

obj.cookie = reinterpret_cast<uintptr_t>(local);

将Binder对象扁平化,转换成flat_binder_object对象。

- 对于Binder实体,则cookie记录Binder实体的指针;

- 对于Binder代理,则用handle记录Binder代理的句柄;

// 将flat_binder_object 结构体写入到 Parcel中

inline static status_t finish_flatten_binder(

const sp<IBinder>& /*binder*/, const flat_binder_object& flat, Parcel* out)

{

return out->writeObject(flat, false);

}2-3)remote() 为 BpBinder,调用 transact

status_t err = remote()->transact(ADD_SERVICE_TRANSACTION, data, &reply);

/frameworks/native/libs/binder/BpBinder.cpp

status_t BpBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

// Once a binder has died, it will never come back to life.

if (mAlive) {

status_t status = IPCThreadState::self()->transact(

mHandle, code, data, reply, flags);

if (status == DEAD_OBJECT) mAlive = 0;

return status;

}

return DEAD_OBJECT;

}mHandle = 0;

code = ADD_SERVICE_TRANSACTION

flags = 0

/frameworks/native/libs/binder/IPCThreadState.cpp

具体的transact 的工作是给到 IPCThreadState 类,看下其self 方法

IPCThreadState* IPCThreadState::self()

{

if (gHaveTLS) {

restart:

const pthread_key_t k = gTLS;

IPCThreadState* st = (IPCThreadState*)pthread_getspecific(k);

if (st) return st;

return new IPCThreadState;

}

if (gShutdown) {

ALOGW("Calling IPCThreadState::self() during shutdown is dangerous, expect a crash.

");

return nullptr;

}

pthread_mutex_lock(&gTLSMutex);

if (!gHaveTLS) {

int key_create_value = pthread_key_create(&gTLS, threadDestructor);

if (key_create_value != 0) {

pthread_mutex_unlock(&gTLSMutex);

ALOGW("IPCThreadState::self() unable to create TLS key, expect a crash: %s

",

strerror(key_create_value));

return nullptr;

}

gHaveTLS = true;

}

pthread_mutex_unlock(&gTLSMutex);

goto restart;

}TLS是指Thread local storage(线程本地储存空间),每个线程都拥有自己的TLS,并且是私有空间,线程之间不会共享。通过pthread_getspecific/pthread_setspecific函数可以获取/设置这些空间中的内容。从线程本地存储空间中获得保存在其中的IPCThreadState对象。

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

status_t err;

// TF_ACCEPT_FDS = 0x10 == flags

flags |= TF_ACCEPT_FDS;

// 2-3-1) writeTransactionData 写入传入的data

err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, nullptr);

if (err != NO_ERROR) {

if (reply) reply->setError(err);

return (mLastError = err);

}

// TF_ONE_WAY = 0x01,满足下列的条件

if ((flags & TF_ONE_WAY) == 0) {

if (reply) {

// 2-3-2) 等待回复

err = waitForResponse(reply);

} else {

Parcel fakeReply;

err = waitForResponse(&fakeReply);

}

} else {

err = waitForResponse(nullptr, nullptr);

}

return err;

}2-3-1) writeTransactionData 写入传入的data

err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, nullptr);

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags,

int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

{

// 保存 transaction_data

binder_transaction_data tr;

tr.target.ptr = 0; /* Don't pass uninitialized stack data to a remote process */

tr.target.handle = handle;

tr.code = code;

tr.flags = binderFlags;

tr.cookie = 0;

tr.sender_pid = 0;

tr.sender_euid = 0;

const status_t err = data.errorCheck();

if (err == NO_ERROR) {

tr.data_size = data.ipcDataSize();

// 保存ipc 的data值指针

tr.data.ptr.buffer = data.ipcData();

tr.offsets_size = data.ipcObjectsCount()*sizeof(binder_size_t);

// binder 对象的指针

tr.data.ptr.offsets = data.ipcObjects();

} else if (statusBuffer) {

tr.flags |= TF_STATUS_CODE;

*statusBuffer = err;

tr.data_size = sizeof(status_t);

tr.data.ptr.buffer = reinterpret_cast<uintptr_t>(statusBuffer);

tr.offsets_size = 0;

tr.data.ptr.offsets = 0;

} else {

return (mLastError = err);

}

// Parcel mOut;

// 将cmd:BC_TRANSACTION 写入

mOut.writeInt32(cmd);

// 写入到 parcel 中 mOut

mOut.write(&tr, sizeof(tr));

return NO_ERROR;

}写入到tr.data.ptr.buffer的内容相当于下面的内容:

writeInt32(IPCThreadState::self()->getStrictModePolicy() |

STRICT_MODE_PENALTY_GATHER);

writeString16("android.os.IServiceManager");

writeString16("media.player");

writeStrongBinder(new MediaPlayerService());transact过程,先写完binder_transaction_data数据,其中Parcel data的重要成员变量:

- mDataSize:保存再data_size,binder_transaction的数据大小;

- mData: 保存在ptr.buffer, binder_transaction的数据的起始地址;

- mObjectsSize:保存在ptr.offsets_size,记录着flat_binder_object结构体的个数;

- mObjects: 保存在offsets, 记录着flat_binder_object结构体在数据偏移量;

2-3-2) 等待回复 waitForResponse

err = waitForResponse(reply);

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

uint32_t cmd;

int32_t err;

while (1) {

// 2-3-2-1)执行 talkWithDriver 方法

if ((err=talkWithDriver()) < NO_ERROR) break;

cmd = (uint32_t)mIn.readInt32();

// 2-3-2-2)等待servicemanager进程回复消息

switch (cmd) {

case BR_TRANSACTION_COMPLETE:

if (!reply && !acquireResult) goto finish;

break;

。。。。

case BR_REPLY:

{

binder_transaction_data tr;

err = mIn.read(&tr, sizeof(tr));

ALOG_ASSERT(err == NO_ERROR, "Not enough command data for brREPLY");

if (err != NO_ERROR) goto finish;

if (reply) {

if ((tr.flags & TF_STATUS_CODE) == 0) {

reply->ipcSetDataReference(

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t),

freeBuffer, this);

} else {

err = *reinterpret_cast<const status_t*>(tr.data.ptr.buffer);

freeBuffer(nullptr,

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t), this);

}

} else {

freeBuffer(nullptr,

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t), this);

continue;

}

}

goto finish;

2-3-2-1)执行 talkWithDriver 方法

主要调用了talkWithDriver函数来与Binder驱动程序进行交互

头文件为:

status_t talkWithDriver(bool doReceive=true);status_t IPCThreadState::talkWithDriver(bool doReceive)

{

// 是否有binder 驱动的fd 文件描述符

if (mProcess->mDriverFD <= 0) {

return -EBADF;

}

binder_write_read bwr;

// Is the read buffer empty?

const bool needRead = mIn.dataPosition() >= mIn.dataSize();

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

bwr.write_size = outAvail;

// 写入buffer 的值为 传入的parcel 的data

bwr.write_buffer = (uintptr_t)mOut.data();

// 2个值都是true

if (doReceive && needRead) {

// 256

bwr.read_size = mIn.dataCapacity();

bwr.read_buffer = (uintptr_t)mIn.data();

} else {

bwr.read_size = 0;

bwr.read_buffer = 0;

}

if ((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR;

bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

do {

#if defined(__ANDROID__)

// 与binder驱动交互,传入 binder_write_read结构体地址

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)

err = NO_ERROR;

else

err = -errno;

#endif

} while (err == -EINTR);

if (err >= NO_ERROR) {

if (bwr.write_consumed > 0) {

if (bwr.write_consumed < mOut.dataSize())

mOut.remove(0, bwr.write_consumed);

else {

mOut.setDataSize(0);

processPostWriteDerefs();

}

}

if (bwr.read_consumed > 0) {

mIn.setDataSize(bwr.read_consumed);

mIn.setDataPosition(0);

}

return NO_ERROR;

}

return err;

}// 与binder驱动交互,传入 binder_write_read结构体地址

/drivers/staging/android/binder.c

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

/*pr_info("binder_ioctl: %d:%d %x %lx

",

proc->pid, current->pid, cmd, arg);*/

trace_binder_ioctl(cmd, arg);

ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret)

goto err_unlocked;

binder_lock(__func__);

thread = binder_get_thread(proc);

if (thread == NULL) {

ret = -ENOMEM;

goto err;

}

switch (cmd) {

case BINDER_WRITE_READ:

ret = binder_ioctl_write_read(filp, cmd, arg, thread);

if (ret)

goto err;

break;执行 binder_ioctl_write_read 函数

static int binder_ioctl_write_read(struct file *filp,

unsigned int cmd, unsigned long arg,

struct binder_thread *thread)

{

int ret = 0;

struct binder_proc *proc = filp->private_data;

unsigned int size = _IOC_SIZE(cmd);

// 缓存传递过来的参数

void __user *ubuf = (void __user *)arg;

struct binder_write_read bwr;

// 将用户空间传递过来的参数缓存到 binder_write_read 结构体

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

// 有前面的参数看满足下列条件

if (bwr.write_size > 0) {

// 2-3-2-1-1)调用 binder_thread_write 写入参数

ret = binder_thread_write(proc, thread,

bwr.write_buffer,

bwr.write_size,

&bwr.write_consumed);

trace_binder_write_done(ret);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

// read size 也是大于0 的

if (bwr.read_size > 0) {

// 调用 binder_thread_read 读入参数【忽略】

ret = binder_thread_read(proc, thread, bwr.read_buffer,

bwr.read_size,

&bwr.read_consumed,

filp->f_flags & O_NONBLOCK);

trace_binder_read_done(ret);

if (!list_empty(&proc->todo))

wake_up_interruptible(&proc->wait);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

// 2-3-2-1-2)copy_to_user将bwr的内容拷贝回到用户传进来的缓冲区

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

out:

return ret;

}/ 调用 binder_thread_write 写入参数

ret = binder_thread_write(proc, thread,

bwr.write_buffer,

bwr.write_size,

&bwr.write_consumed);bwr.write_buffer == (uintptr_t)mOut.data() bwr.write_size == mOut.dataSize()

static int binder_thread_write(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed)

{

uint32_t cmd;

// 将 mOut.data() 设置为 buffer

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

// *consumed为 0

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

while (ptr < end && thread->return_error == BR_OK) {

// 从用户空间获取到 cmd:BC_TRANSACTION

if (get_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

// 移动指针 ptr

ptr += sizeof(uint32_t);

trace_binder_command(cmd);

if (_IOC_NR(cmd) < ARRAY_SIZE(binder_stats.bc)) {

binder_stats.bc[_IOC_NR(cmd)]++;

proc->stats.bc[_IOC_NR(cmd)]++;

thread->stats.bc[_IOC_NR(cmd)]++;

}

switch (cmd) {

。。。。

case BC_TRANSACTION:

case BC_REPLY: {

struct binder_transaction_data tr;

// 将用户空间的值保存到 binder_transaction_data 结构体

if (copy_from_user(&tr, ptr, sizeof(tr)))

return -EFAULT;

// 移动buffer指针

ptr += sizeof(tr);

binder_transaction(proc, thread, &tr, cmd == BC_REPLY);

break;

}执行: binder_transaction(proc, thread, &tr, false)

static void binder_transaction(struct binder_proc *proc,

struct binder_thread *thread,

struct binder_transaction_data *tr, int reply)

{

struct binder_transaction *t;

struct binder_work *tcomplete;

binder_size_t *offp, *off_end;

binder_size_t off_min;

struct binder_proc *target_proc;

struct binder_thread *target_thread = NULL;

struct binder_node *target_node = NULL;

struct list_head *target_list;

wait_queue_head_t *target_wait;

struct binder_transaction *in_reply_to = NULL;

struct binder_transaction_log_entry *e;

uint32_t return_error;

e = binder_transaction_log_add(&binder_transaction_log);

e->call_type = reply ? 2 : !!(tr->flags & TF_ONE_WAY);

e->from_proc = proc->pid;

e->from_thread = thread->pid;

e->target_handle = tr->target.handle;

e->data_size = tr->data_size;

e->offsets_size = tr->offsets_size;

if (reply) {

。。。 reply 为false

} else {

if (tr->target.handle) {

// servicemanager 的handle 为 0

} else {

// 设置 目标节点为 servicemanager 的node:binder_context_mgr_node

target_node = binder_context_mgr_node;

if (target_node == NULL) {

return_error = BR_DEAD_REPLY;

goto err_no_context_mgr_node;

}

}

e->to_node = target_node->debug_id;

// 设置 target_proc

target_proc = target_node->proc;

if (target_proc == NULL) {

return_error = BR_DEAD_REPLY;

goto err_dead_binder;

}

if (security_binder_transaction(proc->tsk, target_proc->tsk) < 0) {

return_error = BR_FAILED_REPLY;

goto err_invalid_target_handle;

}

if (!(tr->flags & TF_ONE_WAY) && thread->transaction_stack) {

struct binder_transaction *tmp;

tmp = thread->transaction_stack;

if (tmp->to_thread != thread) {

binder_user_error("%d:%d got new transaction with bad transaction stack, transaction %d has target %d:%d

",

proc->pid, thread->pid, tmp->debug_id,

tmp->to_proc ? tmp->to_proc->pid : 0,

tmp->to_thread ?

tmp->to_thread->pid : 0);

return_error = BR_FAILED_REPLY;

goto err_bad_call_stack;

}

while (tmp) {

if (tmp->from && tmp->from->proc == target_proc)

target_thread = tmp->from;

tmp = tmp->from_parent;

}

}

}

if (target_thread) {

e->to_thread = target_thread->pid;

target_list = &target_thread->todo;

target_wait = &target_thread->wait;

} else {

target_list = &target_proc->todo;

target_wait = &target_proc->wait;

}

e->to_proc = target_proc->pid;

/* TODO: reuse incoming transaction for reply */

// 分配了一个待处理事务t

t = kzalloc(sizeof(*t), GFP_KERNEL);

if (t == NULL) {

return_error = BR_FAILED_REPLY;

goto err_alloc_t_failed;

}

binder_stats_created(BINDER_STAT_TRANSACTION);

// 分配一个待完成工作项tcomplete

tcomplete = kzalloc(sizeof(*tcomplete), GFP_KERNEL);

if (tcomplete == NULL) {

return_error = BR_FAILED_REPLY;

goto err_alloc_tcomplete_failed;

}

binder_stats_created(BINDER_STAT_TRANSACTION_COMPLETE);

t->debug_id = ++binder_last_id;

e->debug_id = t->debug_id;

。。。。

if (!reply && !(tr->flags & TF_ONE_WAY))

t->from = thread;

else

t->from = NULL;

t->sender_euid = task_euid(proc->tsk);

t->to_proc = target_proc;

t->to_thread = target_thread;

t->code = tr->code;

t->flags = tr->flags;

t->priority = task_nice(current);

trace_binder_transaction(reply, t, target_node);

t->buffer = binder_alloc_buf(target_proc, tr->data_size,

tr->offsets_size, !reply && (t->flags & TF_ONE_WAY));

if (t->buffer == NULL) {

return_error = BR_FAILED_REPLY;

goto err_binder_alloc_buf_failed;

}

t->buffer->allow_user_free = 0;

t->buffer->debug_id = t->debug_id;

t->buffer->transaction = t;

// target_node 为: binder_context_mgr_node-> proc

t->buffer->target_node = target_node;

trace_binder_transaction_alloc_buf(t->buffer);

if (target_node)

binder_inc_node(target_node, 1, 0, NULL);

offp = (binder_size_t *)(t->buffer->data +

ALIGN(tr->data_size, sizeof(void *)));

// 将用户空间的tr->data.ptr.buffer 拷贝到 t->buffer->data

if (copy_from_user(t->buffer->data, (const void __user *)(uintptr_t)

tr->data.ptr.buffer, tr->data_size)) {

。。

}

if (copy_from_user(offp, (const void __user *)(uintptr_t)

tr->data.ptr.offsets, tr->offsets_size)) {

。。

}

if (!IS_ALIGNED(tr->offsets_size, sizeof(binder_size_t))) {

binder_user_error("%d:%d got transaction with invalid offsets size, %lld

",

proc->pid, thread->pid, (u64)tr->offsets_size);

return_error = BR_FAILED_REPLY;

goto err_bad_offset;

}

off_end = (void *)offp + tr->offsets_size;

off_min = 0;

。。。 for (; offp < off_end; offp++) {

struct flat_binder_object *fp;

fp = (struct flat_binder_object *)(t->buffer->data + *offp);

off_min = *offp + sizeof(struct flat_binder_object);

switch (fp->type) {

// type 的值为:obj.hdr.type = BINDER_TYPE_BINDER;

case BINDER_TYPE_BINDER:

case BINDER_TYPE_WEAK_BINDER: {

struct binder_ref *ref;

struct binder_node *node = binder_get_node(proc, fp->binder);

// 首次 node 为空

if (node == NULL) {

// 分配节点:fp->binder 为:BBinder弱引用;fp->cookie为 BBinder

node = binder_new_node(proc, fp->binder, fp->cookie);

if (node == NULL) {

return_error = BR_FAILED_REPLY;

goto err_binder_new_node_failed;

}

node->min_priority = fp->flags & FLAT_BINDER_FLAG_PRIORITY_MASK;

node->accept_fds = !!(fp->flags & FLAT_BINDER_FLAG_ACCEPTS_FDS);

}

if (security_binder_transfer_binder(proc->tsk, target_proc->tsk)) {

return_error = BR_FAILED_REPLY;

goto err_binder_get_ref_for_node_failed;

}

ref = binder_get_ref_for_node(target_proc, node);

if (ref == NULL) {

return_error = BR_FAILED_REPLY;

goto err_binder_get_ref_for_node_failed;

}

// 重新设置 type 为 BINDER_TYPE_HANDLE

if (fp->type == BINDER_TYPE_BINDER)

fp->type = BINDER_TYPE_HANDLE;

else

fp->type = BINDER_TYPE_WEAK_HANDLE;

fp->handle = ref->desc;

binder_inc_ref(ref, fp->type == BINDER_TYPE_HANDLE,

&thread->todo);

trace_binder_transaction_node_to_ref(t, node, ref);

} break;

case BINDER_TYPE_HANDLE:

case BINDER_TYPE_WEAK_HANDLE: {

// 。。。。。

} else if (!(t->flags & TF_ONE_WAY)) {

BUG_ON(t->buffer->async_transaction != 0);

t->need_reply = 1;

t->from_parent = thread->transaction_stack;

thread->transaction_stack = t;

} else {

BUG_ON(target_node == NULL);

BUG_ON(t->buffer->async_transaction != 1);

if (target_node->has_async_transaction) {

target_list = &target_node->async_todo;

target_wait = NULL;

} else

target_node->has_async_transaction = 1;

}

// 待处理事务加入到target_list列表中去

t->work.type = BINDER_WORK_TRANSACTION;

list_add_tail(&t->work.entry, target_list);

// 并且把待完成工作项加入到本线程的todo等待执行列表中去

tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE;

list_add_tail(&tcomplete->entry, &thread->todo);

// 唤醒Service Manager进程

if (target_wait)

wake_up_interruptible(target_wait);

return;

err_get_unused_fd_failed:

// 处理异常

.。。。其中 t为:binder_transaction

// 分配了一个待处理事务t,为结构体:binder_transaction

t = kzalloc(sizeof(*t), GFP_KERNEL);

结构体为:

struct binder_transaction {

int debug_id;

struct binder_work work;

struct binder_thread *from;

struct binder_transaction *from_parent;

struct binder_proc *to_proc;

struct binder_thread *to_thread;

struct binder_transaction *to_parent;

unsigned need_reply:1;

/* unsigned is_dead:1; */ /* not used at the moment */

struct binder_buffer *buffer;

unsigned int code;

unsigned int flags;

long priority;

long saved_priority;

kuid_t sender_euid;

};

============

// binder_work 结构体为如下:

struct binder_work {

struct list_head entry;

enum {

BINDER_WORK_TRANSACTION = 1,

BINDER_WORK_TRANSACTION_COMPLETE,

BINDER_WORK_NODE,

BINDER_WORK_DEAD_BINDER,

BINDER_WORK_DEAD_BINDER_AND_CLEAR,

BINDER_WORK_CLEAR_DEATH_NOTIFICATION,

} type;

};参数 buffer 为指针 binder_buffer:

// 分配buffer,tr为:mOut.data()

t->buffer = binder_alloc_buf(target_proc, tr->data_size,

tr->offsets_size, !reply && (t->flags & TF_ONE_WAY));

===

将用户空间的 tr->data.ptr.buffer 拷贝到 t->buffer->data。【servicemanager进程可以读取该值】

// 将用户空间的tr->data.ptr.buffer 拷贝到 t->buffer->data

if (copy_from_user(t->buffer->data, (const void __user *)(uintptr_t)

tr->data.ptr.buffer, tr->data_size)) {

// 在 函数中,有增加要处理的entry

t->work.type = BINDER_WORK_TRANSACTION;

// target_list为: &target_thread->todo

list_add_tail(&t->work.entry, target_list);

由于要把这个Binder实体MediaPlayerService交给target_proc,也就是Service Manager来管理,也就是说Service Manager要引用这个MediaPlayerService了,于是通过binder_get_ref_for_node为MediaPlayerService创建一个引用,并且通过binder_inc_ref来增加这个引用计数,防止这个引用还在使用过程当中就被销毁。注意,到了这里的时候,t->buffer中的flat_binder_obj的type已经改为BINDER_TYPE_HANDLE,handle已经改为ref->desc,跟原来不一样了,因为这个flat_binder_obj是最终是要传给Service Manager的,而Service Manager只能够通过句柄值来引用这个Binder实体。

3. 唤醒Service Manager进程,缓存内核空间传来的值

Service Manager正在binder_thread_read函数中调用wait_event_interruptible进入休眠状态。

static int binder_thread_read(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed, int non_block)

{

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

int ret = 0;

int wait_for_proc_work;

if (*consumed == 0) {

if (put_user(BR_NOOP, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

}

retry:

wait_for_proc_work = thread->transaction_stack == NULL &&

list_empty(&thread->todo);Service Manager被唤醒之后,就进入while循环开始处理事务了。这里wait_for_proc_work等于1,并且proc->todo不为空

while (1) {

uint32_t cmd;

struct binder_transaction_data tr;

struct binder_work *w;

struct binder_transaction *t = NULL;

if (!list_empty(&thread->todo)) {

w = list_first_entry(&thread->todo, struct binder_work,

entry);

// 获取到 proc->todo.work 结构体 binder_work

} else if (!list_empty(&proc->todo) && wait_for_proc_work) {

w = list_first_entry(&proc->todo, struct binder_work,

entry);

} else {

/* no data added */

if (ptr - buffer == 4 &&

!(thread->looper & BINDER_LOOPER_STATE_NEED_RETURN))

goto retry;

break;

}

if (end - ptr < sizeof(tr) + 4)

break;

switch (w->type) {

case BINDER_WORK_TRANSACTION: {

// 获取到write 的 binder_transaction

t = container_of(w, struct binder_transaction, work);

} break;往下执行:

if (t->buffer->target_node) {

struct binder_node *target_node = t->buffer->target_node;

tr.target.ptr = target_node->ptr;

tr.cookie = target_node->cookie;

t->saved_priority = task_nice(current);

if (t->priority < target_node->min_priority &&

!(t->flags & TF_ONE_WAY))

binder_set_nice(t->priority);

else if (!(t->flags & TF_ONE_WAY) ||

t->saved_priority > target_node->min_priority)

binder_set_nice(target_node->min_priority);

// 设置 cmd 为 BR_TRANSACTION

cmd = BR_TRANSACTION;

} else {

。。。

}

// tr 也是局部变量 binder_transaction_data,t 也是 binder_transaction_data

tr.code = t->code;

tr.flags = t->flags;

tr.sender_euid = from_kuid(current_user_ns(), t->sender_euid);

if (t->from) {

struct task_struct *sender = t->from->proc->tsk;

tr.sender_pid = task_tgid_nr_ns(sender,

task_active_pid_ns(current));

} else {

tr.sender_pid = 0;

}

tr.data_size = t->buffer->data_size;

tr.offsets_size = t->buffer->offsets_size;

t->buffer->data所指向的地址是内核空间的,现在要把数据返回给Service Manager进程的用户空间,而Service Manager进程的用户空间是不能访问内核空间的数据的,所以这里要作一下处理。

Binder机制用的是类似浅拷贝的方法,通过在用户空间分配一个虚拟地址,然后让这个用户空间虚拟地址与 t->buffer->data这个内核空间虚拟地址指向同一个物理地址,这样就可以实现浅拷贝了。

怎么样用户空间和内核空间的虚拟地址同时指向同一个物理地址呢?这里只要将t->buffer->data加上一个偏移值proc->user_buffer_offset就可以得到t->buffer->data对应的用户空间虚拟地址了。

// 这里有一个非常重要的地方,是Binder进程间通信机制的精髓所在:

tr.data.ptr.buffer = (binder_uintptr_t)(

(uintptr_t)t->buffer->data +

proc->user_buffer_offset);

tr.data.ptr.offsets = tr.data.ptr.buffer +

ALIGN(t->buffer->data_size,

sizeof(void *));

if (put_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

// tr的内容拷贝到用户传进来的缓冲区去了,指针ptr指向这个用户缓冲区的地址:

if (copy_to_user(ptr, &tr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);把它从todo事务列表中删除:

list_del(&t->work.entry);

t->buffer->allow_user_free = 1;

// 满足下列的条件

if (cmd == BR_TRANSACTION && !(t->flags & TF_ONE_WAY)) {

t->to_parent = thread->transaction_stack;

t->to_thread = thread;

thread->transaction_stack = t;

} else {

t->buffer->transaction = NULL;

kfree(t);

binder_stats_deleted(BINDER_STAT_TRANSACTION);

}

break;

}2-3-2-1-2)copy_to_user将bwr的内容拷贝回到用户传进来的缓冲区

执行完 2-3-2-1-1)的 binder_thread_write函数后,执行以下:

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

把本地变量struct binder_write_read bwr的内容拷贝回到用户传进来的缓冲区中

-

servicemanager 进程主线程一直在死循环执行binder_looper函数

/frameworks/native/cmds/servicemanager/service_manager.c

int main(int argc, char** argv)

{

struct binder_state *bs;

union selinux_callback cb;

char *driver;

if (argc > 1) {

driver = argv[1];

} else {

driver = "/dev/binder";

}

bs = binder_open(driver, 128*1024);

。。。。。。。。

// 执行 binder_loop

binder_loop(bs, svcmgr_handler);

return 0;

}

// 执行 binder_loop

/frameworks/native/cmds/servicemanager/binder.c

void binder_loop(struct binder_state *bs, binder_handler func)

{

int res;

struct binder_write_read bwr;

uint32_t readbuf[32];

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer = 0;

readbuf[0] = BC_ENTER_LOOPER;

binder_write(bs, readbuf, sizeof(uint32_t));

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (uintptr_t) readbuf;

// 线程阻塞,等待客户端请求

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

// 执行 binder_parse 解析binder消息函数

res = binder_parse(bs, 0, (uintptr_t) readbuf, bwr.read_consumed, func);

}// 执行 binder_parse 解析binder消息函数

返回来的数据都放在readbuf中

int binder_parse(struct binder_state *bs, struct binder_io *bio,

uintptr_t ptr, size_t size, binder_handler func)

{

int r = 1;

uintptr_t end = ptr + (uintptr_t) size;

while (ptr < end) {

uint32_t cmd = *(uint32_t *) ptr;

ptr += sizeof(uint32_t);

#if TRACE

fprintf(stderr,"%s:

", cmd_name(cmd));

#endif

switch(cmd) {

。。。。。

case BR_TRANSACTION_SEC_CTX:

case BR_TRANSACTION: {

struct binder_transaction_data_secctx txn;

if (cmd == BR_TRANSACTION_SEC_CTX) {

。。。。。

} else /* BR_TRANSACTION */ {

// 将返回来的数据保存到 txn.transaction_data

memcpy(&txn.transaction_data, (void*) ptr, sizeof(struct binder_transaction_data));

ptr += sizeof(struct binder_transaction_data);

txn.secctx = 0;

}

binder_dump_txn(&txn.transaction_data);

if (func) {

unsigned rdata[256/4];

struct binder_io msg;

struct binder_io reply;

int res;

// 1)bio_init初始化 binder_io 结构体

bio_init(&reply, rdata, sizeof(rdata), 4);

// 2)bio_init_from_txn

bio_init_from_txn(&msg, &txn.transaction_data);

// 3)回调函数 svcmgr_handler

res = func(bs, &txn, &msg, &reply);

if (txn.transaction_data.flags & TF_ONE_WAY) {

binder_free_buffer(bs, txn.transaction_data.data.ptr.buffer);

} else {

// 4)回复binder驱动

binder_send_reply(bs, &reply, txn.transaction_data.data.ptr.buffer, res);

}

}

break;

}1)bio_init初始化reply结构体 【binder_io 结构体 】

bio_init(&reply, rdata, sizeof(rdata), 4);

void bio_init(struct binder_io *bio, void *data,

size_t maxdata, size_t maxoffs)

{

size_t n = maxoffs * sizeof(size_t);

if (n > maxdata) {

bio->flags = BIO_F_OVERFLOW;

bio->data_avail = 0;

bio->offs_avail = 0;

return;

}

bio->data = bio->data0 = (char *) data + n;

bio->offs = bio->offs0 = data;

bio->data_avail = maxdata - n;

bio->offs_avail = maxoffs;

bio->flags = 0;

}2)bio_init_from_txn 初始化msg变量

bio_init_from_txn(&msg, &txn.transaction_data);

void bio_init_from_txn(struct binder_io *bio, struct binder_transaction_data *txn)

{

// data 为指向内核空间和用户空间对应的地址

bio->data = bio->data0 = (char *)(intptr_t)txn->data.ptr.buffer;

bio->offs = bio->offs0 = (binder_size_t *)(intptr_t)txn->data.ptr.offsets;

bio->data_avail = txn->data_size;

bio->offs_avail = txn->offsets_size / sizeof(size_t);

bio->flags = BIO_F_SHARED;

}3)回调函数 svcmgr_handler

func(bs, &txn, &msg, &reply);

/frameworks/native/cmds/servicemanager/service_manager.c

int svcmgr_handler(struct binder_state *bs,

struct binder_transaction_data_secctx *txn_secctx,

struct binder_io *msg,

struct binder_io *reply)

{

struct svcinfo *si;

uint16_t *s;

size_t len;

uint32_t handle;

uint32_t strict_policy;

int allow_isolated;

uint32_t dumpsys_priority;

struct binder_transaction_data *txn = &txn_secctx->transaction_data;

。。。。。

strict_policy = bio_get_uint32(msg);

bio_get_uint32(msg); // Ignore worksource header.

s = bio_get_string16(msg, &len);

if (s == NULL) {

return -1;

}

switch(txn->code) {

case SVC_MGR_ADD_SERVICE:

s = bio_get_string16(msg, &len);

if (s == NULL) {

return -1;

}

handle = bio_get_ref(msg);

allow_isolated = bio_get_uint32(msg) ? 1 : 0;

dumpsys_priority = bio_get_uint32(msg);

// 增加service 处理:do_add_service

if (do_add_service(bs, s, len, handle, txn->sender_euid, allow_isolated, dumpsys_priority,

txn->sender_pid, (const char*) txn_secctx->secctx))

return -1;

break;

。。。

// 将一个错误码0写到reply变量中去,表示一切正常:

bio_put_uint32(reply, 0);

return 0;

}传给驱动的参数为如下:

writeInt32(IPCThreadState::self()->getStrictModePolicy() | STRICT_MODE_PENALTY_GATHER);

writeString16("android.os.IServiceManager");

writeString16("media.player");

writeStrongBinder(new MediaPlayerService());

// 为 getStrictModePolicy

strict_policy = bio_get_uint32(msg);

bio_get_uint32(msg); // Ignore worksource header.

// 为 "android.os.IServiceManager"

s = bio_get_string16(msg, &len);

case SVC_MGR_ADD_SERVICE:

// 为:"media.player"

s = bio_get_string16(msg, &len);

// 为:new MediaPlayerService()

handle = bio_get_ref(msg);bio_get_ref实现:

uint32_t bio_get_ref(struct binder_io *bio)

{

struct flat_binder_object *obj;

obj = _bio_get_obj(bio);

if (!obj)

return 0;

if (obj->hdr.type == BINDER_TYPE_HANDLE)

return obj->handle;

return 0;

}结构体为如下:

struct flat_binder_object {

struct binder_object_header hdr;

__u32 flags;

union {

binder_uintptr_t binder;

__u32 handle;

};

binder_uintptr_t cookie;

};// 增加service 处理:do_add_service

把MediaPlayerService这个Binder实体的引用写到一个struct svcinfo结构体中,主要是它的名称和句柄值,然后插入到链接svclist的头部去。这样,Client来向Service Manager查询服务接口时,只要给定服务名称,Service Manger就可以返回相应的句柄值了。

// s为:"media.player"

int do_add_service(struct binder_state *bs, const uint16_t *s, size_t len, uint32_t handle,

uid_t uid, int allow_isolated, uint32_t dumpsys_priority, pid_t spid, const char* sid) {

struct svcinfo *si;

// 找到是否保存了handle

si = find_svc(s, len);

if (si) {

} else {

si = malloc(sizeof(*si) + (len + 1) * sizeof(uint16_t));

// 保存handle

si->handle = handle;

si->len = len;

// 保存名字:"media.player"

memcpy(si->name, s, (len + 1) * sizeof(uint16_t));

si->name[len] = '�';

si->death.func = (void*) svcinfo_death;

si->death.ptr = si;

si->allow_isolated = allow_isolated;

si->dumpsys_priority = dumpsys_priority;

si->next = svclist;

svclist = si;

}

binder_acquire(bs, handle);

binder_link_to_death(bs, handle, &si->death);

return 0;

}4)回复binder驱动

binder_send_reply(bs, &reply, txn.transaction_data.data.ptr.buffer, res);

告诉Binder驱动程序,它完成了Binder驱动程序交给它的任务了。

void binder_send_reply(struct binder_state *bs,

struct binder_io *reply,

binder_uintptr_t buffer_to_free,

int status)

{

struct {

uint32_t cmd_free;

binder_uintptr_t buffer;

uint32_t cmd_reply;

struct binder_transaction_data txn;

} __attribute__((packed)) data;

// 去free buffer

data.cmd_free = BC_FREE_BUFFER;

data.buffer = buffer_to_free;

data.cmd_reply = BC_REPLY;

data.txn.target.ptr = 0;

data.txn.cookie = 0;

data.txn.code = 0;

if (status) {

。。。。

} else {

data.txn.flags = 0;

data.txn.data_size = reply->data - reply->data0;

data.txn.offsets_size = ((char*) reply->offs) - ((char*) reply->offs0);

data.txn.data.ptr.buffer = (uintptr_t)reply->data0;

data.txn.data.ptr.offsets = (uintptr_t)reply->offs0;

}

binder_write(bs, &data, sizeof(data));

}2-3-2-2)等待servicemanager进程回复消息

/frameworks/native/libs/binder/IPCThreadState.cpp

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

uint32_t cmd;

int32_t err;

while (1) {

// 上述代码执行了 talkWithDriver 方法

if ((err=talkWithDriver()) < NO_ERROR) break;

err = mIn.errorCheck();

if (err < NO_ERROR) break;

if (mIn.dataAvail() == 0) continue;

cmd = (uint32_t)mIn.readInt32();

IF_LOG_COMMANDS() {

alog << "Processing waitForResponse Command: "

<< getReturnString(cmd) << endl;

}

switch (cmd) {

。。。

// 收到 servicemanager 服务端的回复

case BR_REPLY:

{

binder_transaction_data tr;

err = mIn.read(&tr, sizeof(tr));

ALOG_ASSERT(err == NO_ERROR, "Not enough command data for brREPLY");

if (err != NO_ERROR) goto finish;

if (reply) {

// 执行以下程序

if ((tr.flags & TF_STATUS_CODE) == 0) {

reply->ipcSetDataReference(

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t),

freeBuffer, this);

QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。...

QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。... U8W/U8W-Mini使用与常见问题解决

U8W/U8W-Mini使用与常见问题解决 stm32使用HAL库配置串口中断收发数据(保姆级教程)

stm32使用HAL库配置串口中断收发数据(保姆级教程) 分享几个国内免费的ChatGPT镜像网址(亲测有效)

分享几个国内免费的ChatGPT镜像网址(亲测有效) Allegro16.6差分等长设置及走线总结

Allegro16.6差分等长设置及走线总结