您现在的位置是:首页 >技术教程 >K8s部署网站首页技术教程

K8s部署

小知识你知道吗

<标准输入 <<结束输入 <<<模拟用户交互 >标准输出 >>覆盖追加输出 --选项终止符号 cat<<自定义结束符号

>标准输出 >>覆盖追加输出 --选项终止符号 cat<<自定义结束符号

cat >1.txt<<yhl tee从标准输入读取数据,并重定向到标准标准输出

kubernetes舵手 领航员(站在前方为你领航!!) Google brog版本 14年6月份开源

k8s是一个容器集群管理系统,可以实现集群的自动化部署、自动扩缩容、自动维护等功能

大量跨主机容器需要管理

快速部署应用

节省资源,优化硬件资源的使用

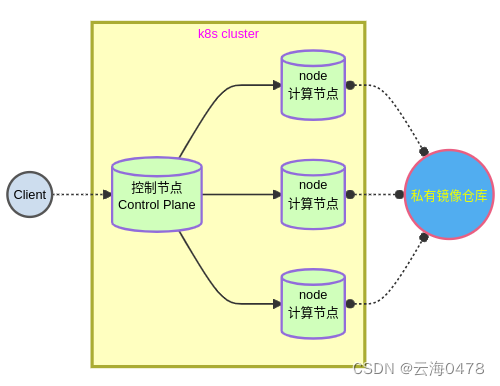

集群图例

核心架构

master(管理节点) node(计算节点) image(镜像仓库)

Master功能

提供对集群的控制

对集群进行全局策略

检测和集群响应事件

Master核心组件

API Server 整个系统提供对外的接口,6443,供客户端调用

scheduler 集群内部资源调度、分配10251

ControllerManager 管理控制器、大总管10252

etcd 数据库(key:value) 2379、2380

Node功能

提供运行容器的实际节点

提供运行环境

再多个节点上运行

水平扩展

Node核心组件

Kubelet 管理容器10250

kube-proxy

Runtime CRI运行标准

只有当kubelet驱动与docker驱动一至时才可以调用

/etc/docker/daemon.json中的"exec-opts":[native.cgroupdriver=systemd]

image(镜像仓库)

为node中的容器提供镜像

仓库组件:Registry或Harbor

官网地址KubernetesKubernetes, also known as K8s, is an open-source system for automating deployment, scaling, and management of containerized applications.It groups containers that make up an application into logical units for easy management and discovery. Kubernetes builds upon 15 years of experience of running production workloads at Google, combined with best-of-breed ideas and practices from the community.Planet Scale Designed on the same principles that allow Google to run billions of containers a week, Kubernetes can scale without increasing your operations team. https://kubernetes.io/

https://kubernetes.io/

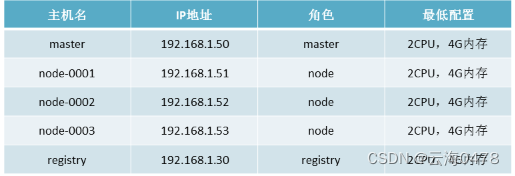

部署环境要求

内核版本>=3.10

最低配置2CPU,2G内存

节点之中不可以有重复的主机名、MAC地址或product_uuid

卸载防火墙firewalld-*

禁用swap、selinux

部署

1.初始化私有仓库

[root@registry ~]# vim /etc/hosts

192.168.1.30 registry

[root@registry ~]# yum install -y docker-distribution

[root@registry ~]# systemctl enable --now docker-distribution

[root@registry ~]# curl -s http://registry:5000/v2/_catalog

{"repositories":[]}2.kube-master安装

独立完成禁用 selinux,禁用 swap,卸载 firewalld-*

3.安装软件包(master)

[root@master ~]# yum install -y kubeadm kubelet kubectl docker-ce

[root@master ~]# mkdir -p /etc/docker

[root@master ~]# vim /etc/docker/daemon.json

{

"exec-opts":["native.cgroupdriver=systemd"],

"registry-mirrors":["http://registry:5000"],

"insecure-registries":["registry:5000","192.168.1.30:5000"]

}

[root@master ~]# vim /etc/hosts

192.168.1.30 registry

192.168.1.50 master

192.168.1.51 node-0001

192.168.1.52 node-0002

192.168.1.53 node-0003

[root@master ~]# systemctl enable --now docker kubelet

[root@master ~]# docker info |grep Cgroup

Cgroup Driver: systemd

Cgroup Version: 14.镜像导入私有仓库

[root@master ~]# docker load -i init/v1.22.5.tar.xz

[root@master ~]# docker images|while read i t _;do

[[ "${t}" == "TAG" ]] && continue

docker tag ${i}:${t} registry:5000/k8s/${i##*/}:${t}

docker push registry:5000/k8s/${i##*/}:${t}

docker rmi ${i}:${t} registry:5000/k8s/${i##*/}:${t}

done

# 查看验证

[root@master ~]# curl -s http://registry:5000/v2/_catalog|python -m json.tool

{

"repositories": [

"k8s/coredns",

"k8s/etcd",

"k8s/kube-apiserver",

"k8s/kube-controller-manager",

"k8s/kube-proxy",

"k8s/kube-scheduler",

"k8s/pause"

]

}5. Tab键设置

[root@master ~]# source <(kubeadm completion bash|tee /etc/bash_completion.d/kubeadm)

[root@master ~]# source <(kubectl completion bash|tee /etc/bash_completion.d/kubectl)6.安装代理软件包

[root@master ~]# yum install -y ipvsadm ipset

7、配置内核参数

[root@master ~]# for i in overlay br_netfilter;do

modprobe ${i}

echo "${i}" >>/etc/modules-load.d/containerd.conf

done

[root@master ~]# cat >/etc/sysctl.d/99-kubernetes-cri.conf<<EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

[root@master ~]# sysctl --system8、使用kubeadm部署

[root@master ~]# kubeadm init --config=init/kubeadm-init.yaml --dry-run

# 输出信息若干,没有 Error 和 Warning 就是正常

[root@master ~]# rm -rf /etc/kubernetes/tmp

[root@master ~]# kubeadm init --config=init/kubeadm-init.yaml |tee init/init.log

# 根据提示执行命令

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config9、验证安装结果

[root@master init]# kubectl cluster-info

Kubernetes control plane is running at https://192.168.1.50:6443

CoreDNS is running at https://192.168.1.50:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

[root@master init]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 90s v1.22.5计算节点安装

1、获取token

# 查看 token

[root@master ~]# kubeadm token list

TOKEN TTL EXPIRES

abcdef.0123456789abcdef 23h 2022-04-12T14:04:34Z

# 删除 token

[root@master ~]# kubeadm token delete abcdef.0123456789abcdef

bootstrap token "abcdef" deleted

# 创建 token

[root@master ~]# kubeadm token create --ttl=0 --print-join-command

kubeadm join 192.168.1.50:6443 --token fhf6gk.bhhvsofvd672yd41 --discovery-token-ca-cert-hash sha256:ea07de5929dab8701c1bddc347155fe51c3fb6efd2ce8a4177f6dc03d5793467

# 获取token_hash

# 1、查看安装日志 2、在创建token时候显示 3、使用 openssl 计算得到

[root@master ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt |openssl rsa -pubin -outform der |openssl dgst -sha256 -hex2、node安装

ansible安装

- name: Join K8S cluster

vars:

master: "192.168.1.50:6443"

token: "#####you token #####"

token_hash: "sha256:##### you token ca hash #####"

hosts: nodes

tasks:

- name: install k8s node packages

yum:

name: kubeadm,kubelet,ipvsadm,ipset,nfs-utils,docker-ce

state: latest

update_cache: yes

- name: create a directory if it does not exist

file:

path: /etc/docker

state: directory

mode: '0755'

- name: create daemon.json

copy:

dest: /etc/docker/daemon.json

owner: root

group: root

mode: '0644'

content: |

{

"exec-opts":["native.cgroupdriver=systemd"],

"registry-mirrors":["http://192.168.1.30:5000","http://registry:5000","https://hub-mirror.c.163.com"],

"insecure-registries":["192.168.1.30:5000","registry:5000"]

}

- name: create containerd.conf

copy:

dest: /etc/modules-load.d/containerd.conf

owner: root

group: root

mode: '0644'

content: |

overlay

br_netfilter

- name: Add the overlay,br_netfilter module

modprobe:

name: "{{ item }}"

state: present

loop:

- overlay

- br_netfilter

- name: create 99-kubernetes-cri.conf

sysctl:

name: "{{ item }}"

value: "1"

sysctl_set: yes

sysctl_file: /etc/sysctl.d/99-kubernetes-cri.conf

loop:

- net.ipv4.ip_forward

- net.bridge.bridge-nf-call-iptables

- net.bridge.bridge-nf-call-ip6tables

- name: set /etc/hosts

copy:

dest: /etc/hosts

owner: root

group: root

mode: '0644'

content: |

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

192.168.1.30 registry

192.168.1.50 master

{% for i in groups.all %}

{{ hostvars[i].ansible_eth0.ipv4.address }} {{ hostvars[i].ansible_hostname }}

{% endfor %}

- name: enable k8s kubelet,runtime service

service:

name: "{{ item }}"

state: started

enabled: yes

loop:

- docker

- kubelet

- name: check node state

stat:

path: /etc/kubernetes/kubelet.conf

register: result

- name: node join cluster

shell: |

kubeadm join '{{ master }}' --token '{{ token }}' --discovery-token-ca-cert-hash '{{ token_hash }}'

args:

executable: /bin/bash

when: result.stat.exists == False

3、验证安装

# 验证节点工作状态

[root@master flannel]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 26h v1.22.5

node-0001 Ready <none> 151m v1.22.5

node-0002 Ready <none> 152m v1.22.5

node-0003 Ready <none> 153m v1.22.5

# 验证容器工作状态

[root@master ~]# kubectl -n kube-system get podsflannel插件

修改配置文件并安装

[root@master flannel]# sed 's,^(s+image: ).+/(.+),1registry:5000/plugins/2,' -i kube-flannel.yml

128: "Network": "10.244.0.0/16",

169: image: registry:5000/k8s/mirrored-flannelcni-flannel-cni-plugin:v1.0.0

180: image: registry:5000/k8s/mirrored-flannelcni-flannel:v0.16.1

194: image: registry:5000/k8s/mirrored-flannelcni-flannel:v0.16.1

[root@master flannel]# kubectl apply -f kube-flannel.yml验证结果

# 验证节点工作状态

[root@master flannel]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 26h v1.22.5

node-0001 Ready <none> 151m v1.22.5

node-0002 Ready <none> 152m v1.22.5

node-0003 Ready <none> 153m v1.22.5

# 验证容器工作状态

[root@master ~]# kubectl -n kube-system get pods

NAME READY STATUS RESTARTS AGE

U8W/U8W-Mini使用与常见问题解决

U8W/U8W-Mini使用与常见问题解决 QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。...

QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。... stm32使用HAL库配置串口中断收发数据(保姆级教程)

stm32使用HAL库配置串口中断收发数据(保姆级教程) 分享几个国内免费的ChatGPT镜像网址(亲测有效)

分享几个国内免费的ChatGPT镜像网址(亲测有效) Allegro16.6差分等长设置及走线总结

Allegro16.6差分等长设置及走线总结