您现在的位置是:首页 >技术交流 >OpenCV转换HDR图像与源码分析网站首页技术交流

OpenCV转换HDR图像与源码分析

我们常见的图像位深一般是8bit,颜色范围[0, 255],称为标准动态范围SDR(Standard Dynamic Range)。SDR的颜色值有限,如果要图像色彩更鲜艳,那么就需要10bit,甚至12bit,称为高动态范围HDR(High Dynamic Range)。OpenCV有提供SDR转HDR的方法,而逆转换是通过Tone mapping实现。

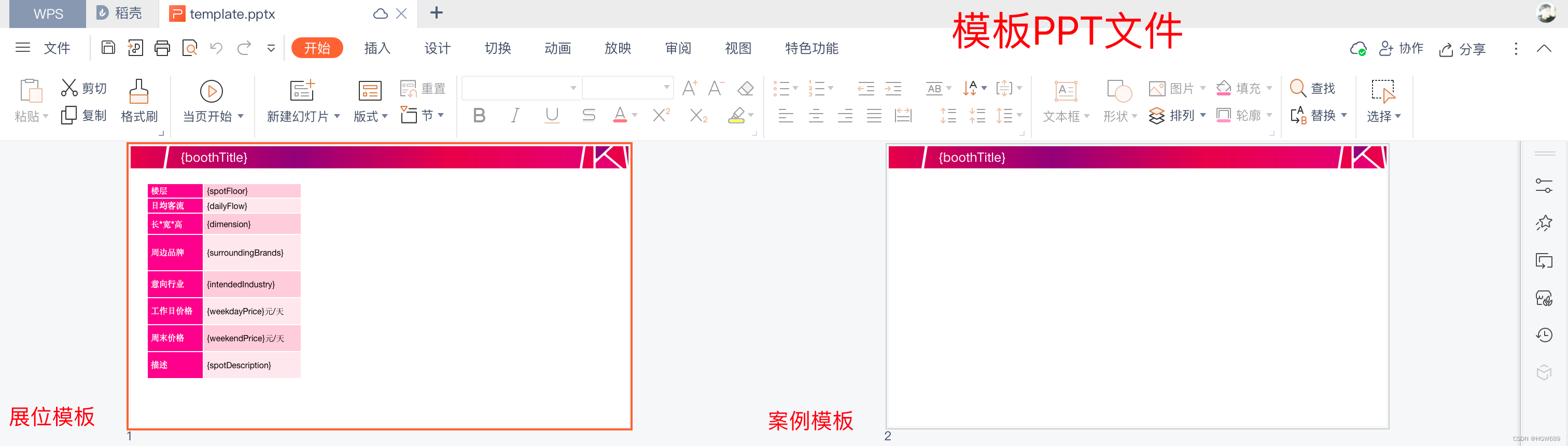

我们先看下SDR与HDR图像的对比,如下图所示:

一、核心函数

在OpenCV的photo模块提供SDR与HDR互转,还有图像曝光融合。

1、SDR转HDR

HDR算法需要CRF摄像头响应函数,计算CRF示例代码如下:

Mat image;

Mat response;

vector<float> times;

Ptr<CalibrateDebevec> calibrate = createCalibrateDebevec();

calibrate->process(image, response, times);得到CRF响应函数后,使用MergeDebevec函数来转换HDR图像,C++代码:

Mat hdr;

Ptr<MergeDebevec> merge_debevec = createMergeDebevec();

merge_debevec->process(image, hdr, times, response);java版本代码:

Mat hdr = new Mat();

MergeDebevec mergeDebevec = Photo.createMergeDebevec();

mergeDebevec.process(image, hdr, matTime);python版本代码:

merge_debevec = cv.createMergeDebevec()

hdr = merge_debevec.process(image, time, response)2、HDR转SDR

HDR逆转SDR是通过Tonemap函数实现,其中2.2为Gamma矫正系数,C++代码如下:

Mat sdr;

float gamma = 2.2f;

Ptr<Tonemap> tonemap = createTonemap(gamma);

tonemap->process(hdr, sdr);java版本代码:

Mat ldr = new Mat();

Tonemap tonemap = Photo.createTonemap(2.2f);

tonemap.process(hdr, ldr);python版本代码:

tonemap = cv.createTonemap(2.2)

ldr = tonemap.process(hdr)3、图像曝光

在OpenCV中,使用MergeMertens进行图像的曝光融合,C++代码:

Mat exposure;

Ptr<MergeMertens> merge_mertens = createMergeMertens();

merge_mertens->process(image, exposure);java版本代码:

Mat exposure = new Mat();

MergeMertens mergeMertens = Photo.createMergeMertens();

mergeMertens.process(image, exposure);python版本代码:

merge_mertens = cv.createMergeMertens()

exposure = merge_mertens.process(image)二、实现代码

1、SDR转HDR源码

HDR图像转换的源码位于opencv/modules/photo/src/merge.cpp,首先是createMergeDebevec函数使用makePtr智能指针包裹:

Ptr<MergeDebevec> createMergeDebevec()

{

return makePtr<MergeDebevecImpl>();

}核心代码在于MergeDebevecImpl类的process(),具体如下:

class MergeDebevecImpl CV_FINAL : public MergeDebevec

{

public:

MergeDebevecImpl() :

name("MergeDebevec"),

weights(triangleWeights())

{}

void process(InputArrayOfArrays src, OutputArray dst, InputArray _times, InputArray input_response) CV_OVERRIDE

{

CV_INSTRUMENT_REGION();

std::vector<Mat> images;

src.getMatVector(images);

Mat times = _times.getMat();

CV_Assert(images.size() == times.total());

checkImageDimensions(images);

CV_Assert(images[0].depth() == CV_8U);

int channels = images[0].channels();

Size size = images[0].size();

int CV_32FCC = CV_MAKETYPE(CV_32F, channels);

dst.create(images[0].size(), CV_32FCC);

Mat result = dst.getMat();

Mat response = input_response.getMat();

if(response.empty()) {

response = linearResponse(channels);

response.at<Vec3f>(0) = response.at<Vec3f>(1);

}

Mat log_response;

log(response, log_response);

CV_Assert(log_response.rows == LDR_SIZE && log_response.cols == 1 &&

log_response.channels() == channels);

Mat exp_values(times.clone());

log(exp_values, exp_values);

result = Mat::zeros(size, CV_32FCC);

std::vector<Mat> result_split;

split(result, result_split);

Mat weight_sum = Mat::zeros(size, CV_32F);

// 图像加权平均

for(size_t i = 0; i < images.size(); i++) {

std::vector<Mat> splitted;

split(images[i], splitted);

Mat w = Mat::zeros(size, CV_32F);

for(int c = 0; c < channels; c++) {

LUT(splitted[c], weights, splitted[c]);

w += splitted[c];

}

w /= channels;

Mat response_img;

LUT(images[i], log_response, response_img);

split(response_img, splitted);

for(int c = 0; c < channels; c++) {

result_split[c] += w.mul(splitted[c] - exp_values.at<float>((int)i));

}

weight_sum += w;

}

weight_sum = 1.0f / weight_sum;

for(int c = 0; c < channels; c++) {

result_split[c] = result_split[c].mul(weight_sum);

}

// 融合

merge(result_split, result);

// 求对数

exp(result, result);

}

protected:

String name;

Mat weights;

};这里MergeDebevecImpl继承MergeDebevec父类,最终是继承Algorithm抽象类,位于photo.hpp:

class CV_EXPORTS_W MergeExposures : public Algorithm

{

public:

CV_WRAP virtual void process(InputArrayOfArrays src, OutputArray dst,

InputArray times, InputArray response) = 0;

};

class CV_EXPORTS_W MergeDebevec : public MergeExposures

{

public:

CV_WRAP virtual void process(InputArrayOfArrays src, OutputArray dst,

InputArray times, InputArray response) CV_OVERRIDE = 0;

CV_WRAP virtual void process(InputArrayOfArrays src, OutputArray dst, InputArray times) = 0;

};2、HDR转SDR源码

前面我们有谈到,HDR转SDR是通过ToneMapping色调映射实现。位于photo模块的tonemap.cpp,入口是createTonemap(),也是使用智能指针包裹:

Ptr<Tonemap> createTonemap(float gamma)

{

return makePtr<TonemapImpl>(gamma);

}接着我们继续看TonemapImpl核心代码:

class TonemapImpl CV_FINAL : public Tonemap

{

public:

TonemapImpl(float _gamma) : name("Tonemap"), gamma(_gamma)

{}

void process(InputArray _src, OutputArray _dst) CV_OVERRIDE

{

Mat src = _src.getMat();

Mat dst = _dst.getMat();

double min, max;

// 获取图像像素最小值与最大值

minMaxLoc(src, &min, &max);

if(max - min > DBL_EPSILON) {

dst = (src - min) / (max - min);

} else {

src.copyTo(dst);

}

// 幂运算,指数为gamma的倒数

pow(dst, 1.0f / gamma, dst);

}

......

protected:

String name;

float gamma;

};同时还提供Drago、Reinhard、Mantiuk算法进行色调映射,大家感兴趣可以去阅读源码。

3、图像曝光源码

图像曝光的源码同样位于merge.cpp,入口是createMergeMertens(),同样使用智能指针包裹:

Ptr<MergeMertens> createMergeMertens(float wcon, float wsat, float wexp)

{

return makePtr<MergeMertensImpl>(wcon, wsat, wexp);

}核心源码在MergeMertensImpl类:

class MergeMertensImpl CV_FINAL : public MergeMertens

{

public:

MergeMertensImpl(float _wcon, float _wsat, float _wexp) :

name("MergeMertens"),

wcon(_wcon),

wsat(_wsat),

wexp(_wexp)

{}

void process(InputArrayOfArrays src, OutputArray dst) CV_OVERRIDE

{

......

parallel_for_(Range(0, static_cast<int>(images.size())), [&](const Range& range) {

for(int i = range.start; i < range.end; i++) {

Mat img, gray, contrast, saturation, wellexp;

std::vector<Mat> splitted(channels);

images[i].convertTo(img, CV_32F, 1.0f/255.0f);

if(channels == 3) {

cvtColor(img, gray, COLOR_RGB2GRAY);

} else {

img.copyTo(gray);

}

images[i] = img;

// 通道分离

split(img, splitted);

// 计算对比度:拉普拉斯变换

Laplacian(gray, contrast, CV_32F);

contrast = abs(contrast);

// 通道求均值

Mat mean = Mat::zeros(size, CV_32F);

for(int c = 0; c < channels; c++) {

mean += splitted[c];

}

mean /= channels;

// 计算饱和度

saturation = Mat::zeros(size, CV_32F);

for(int c = 0; c < channels; c++) {

Mat deviation = splitted[c] - mean;

pow(deviation, 2.0f, deviation);

saturation += deviation;

}

sqrt(saturation, saturation);

// 计算曝光量

wellexp = Mat::ones(size, CV_32F);

for(int c = 0; c < channels; c++) {

Mat expo = splitted[c] - 0.5f;

pow(expo, 2.0f, expo);

expo = -expo / 0.08f;

exp(expo, expo);

wellexp = wellexp.mul(expo);

}

pow(contrast, wcon, contrast);

pow(saturation, wsat, saturation);

pow(wellexp, wexp, wellexp);

weights[i] = contrast;

if(channels == 3) {

weights[i] = weights[i].mul(saturation);

}

weights[i] = weights[i].mul(wellexp) + 1e-12f;

AutoLock lock(weight_sum_mutex);

weight_sum += weights[i];

}

});

int maxlevel = static_cast<int>(logf(static_cast<float>(min(size.width, size.height))) / logf(2.0f));

std::vector<Mat> res_pyr(maxlevel + 1);

std::vector<Mutex> res_pyr_mutexes(maxlevel + 1);

parallel_for_(Range(0, static_cast<int>(images.size())), [&](const Range& range) {

for(int i = range.start; i < range.end; i++) {

weights[i] /= weight_sum;

std::vector<Mat> img_pyr, weight_pyr;

// 分别构建image、weight图像金字塔

buildPyramid(images[i], img_pyr, maxlevel);

buildPyramid(weights[i], weight_pyr, maxlevel);

for(int lvl = 0; lvl < maxlevel; lvl++) {

Mat up;

pyrUp(img_pyr[lvl + 1], up, img_pyr[lvl].size());

img_pyr[lvl] -= up;

}

for(int lvl = 0; lvl <= maxlevel; lvl++) {

std::vector<Mat> splitted(channels);

// 通道分离,然后与weight权重相乘

split(img_pyr[lvl], splitted);

for(int c = 0; c < channels; c++) {

splitted[c] = splitted[c].mul(weight_pyr[lvl]);

}

// 图像融合

merge(splitted, img_pyr[lvl]);

AutoLock lock(res_pyr_mutexes[lvl]);

if(res_pyr[lvl].empty()) {

res_pyr[lvl] = img_pyr[lvl];

} else {

res_pyr[lvl] += img_pyr[lvl];

}

}

}

});

for(int lvl = maxlevel; lvl > 0; lvl--) {

Mat up;

pyrUp(res_pyr[lvl], up, res_pyr[lvl - 1].size());

res_pyr[lvl - 1] += up;

}

dst.create(size, CV_32FCC);

res_pyr[0].copyTo(dst);

}

......

protected:

String name;

float wcon, wsat, wexp;

};

U8W/U8W-Mini使用与常见问题解决

U8W/U8W-Mini使用与常见问题解决 分享几个国内免费的ChatGPT镜像网址(亲测有效)

分享几个国内免费的ChatGPT镜像网址(亲测有效) stm32使用HAL库配置串口中断收发数据(保姆级教程)

stm32使用HAL库配置串口中断收发数据(保姆级教程) QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。...

QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。... SpringSecurity实现前后端分离认证授权

SpringSecurity实现前后端分离认证授权