您现在的位置是:首页 >学无止境 >Opencv项目实战:基于dlib的疲劳检测网站首页学无止境

Opencv项目实战:基于dlib的疲劳检测

简介Opencv项目实战:基于dlib的疲劳检测

文章目录

一、项目简介

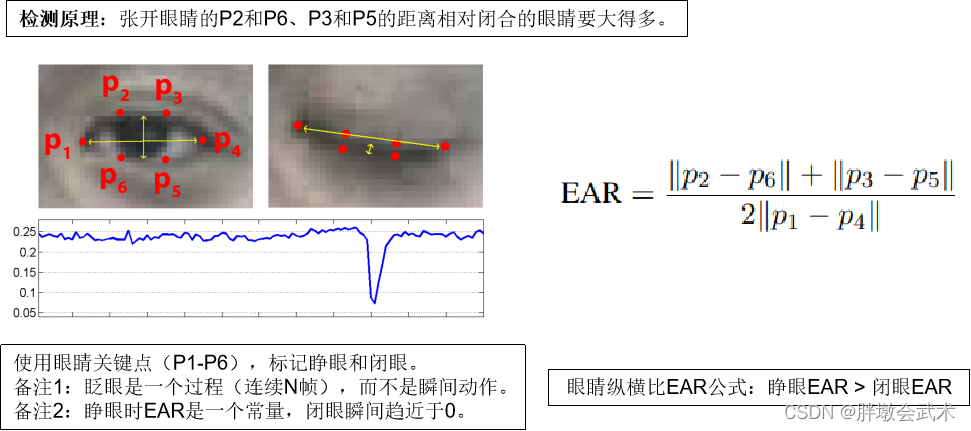

本项目基于dlib库提供的人脸检测器、关键点定位工具以及眼睛纵横比算法完成。通过分析摄像头或视频流中的人脸,实时计算眼睛纵横比EAR(Eye Aspect Ratio),以判断眼睛是否闭合。通过统计眨眼次数,可以检测出眨眼的频率和时长,用于评估用户的注意力水平或疲劳状态。

二、算法原理

论文地址:https://vision.fe.uni-lj.si/cvww2016/proceedings/papers/05.pdf

- 由于眨眼动作是一个过程,而不是一个帧图像就能瞬间完成。故设置

连续帧数的阈值(=3),即连续三帧图像计算得到的EAR值都小于EAR的阈值(=3),则表示眨眼一次。- 眨眼检测与疲劳检测的区别就是连续帧数的阈值设置,原理相同!

三、环境配置

dlib库在计算机视觉和人工智能领域有广泛的应用,包括人脸识别、人脸表情分析、人脸关键点检测、物体检测和追踪等任务。它的简单易用性、高性能和丰富的功能使其成为研究人员和开发者的首选库之一。

- dlib是一个开源的C++机器学习和计算机视觉库,提供了人脸检测、关键点定位、人脸识别等功能,以及支持向量机和其他机器学习算法的实现,具有高性能和跨平台支持。

dlib工具的python API下载地址:http://dlib.net/python/- dlib库包的介绍与使用:opencv+dlib人脸检测 + 人脸68关键点检测 + 人脸识别 + 人脸特征聚类 + 目标跟踪

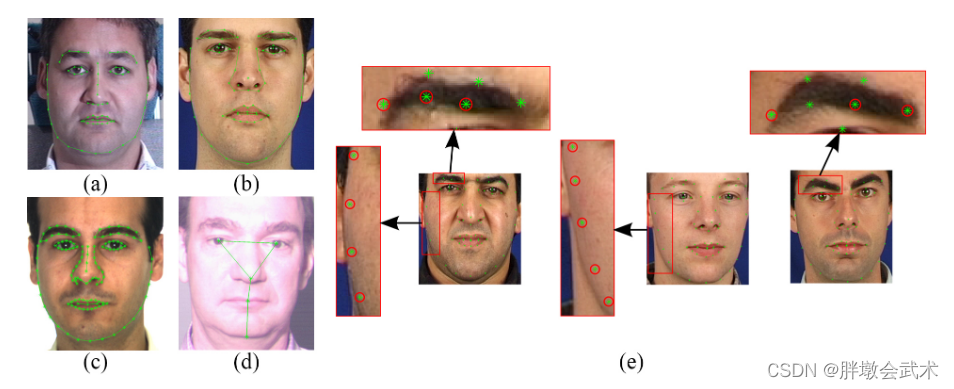

3.1、dlib人脸检测器:dlib.get_frontal_face_detector()

dlib官方详细说明:dlib.get_frontal_face_detector()

3.2、dlib关键点定位工具:shape_predictor_68_face_landmarks.dat

dlib官方预训练工具的下载地址:http://dlib.net/files/

(1)5个关键点检测:shape_predictor_5_face_landmarks.dat。五个点分别为:左右眼 + 鼻子 + 左右嘴角

(2)68个关键点检测:shape_predictor_68_face_landmarks.dat

脸部关键点注释详细请看:https://ibug.doc.ic.ac.uk/resources/facial-point-annotations/

四、项目实战(加载视频)

- 【参数配置】方式一:Pycharm + Terminal + 输入指令自动检测:

python detect_blinks.py --shape-predictor shape_predictor_68_face_landmarks.dat --video test.mp4- 【参数配置】方式二:Pycharm + 点击Edit Configuration,输入配置参数

--shape-predictor shape_predictor_68_face_landmarks.dat --video test.mp4,点击Run开始检测。

# 导入所需库

from scipy.spatial import distance as dist

from collections import OrderedDict

import numpy as np

import argparse

import time

import dlib

import cv2

# 定义脸部关键点索引

FACIAL_LANDMARKS_68_IDXS = OrderedDict([

("mouth", (48, 68)),

("right_eyebrow", (17, 22)),

("left_eyebrow", (22, 27)),

("right_eye", (36, 42)),

("left_eye", (42, 48)),

("nose", (27, 36)),

("jaw", (0, 17))

])

# 计算眼睛纵横比函数

def eye_aspect_ratio(eye):

# 计算垂直距离

A = dist.euclidean(eye[1], eye[5])

B = dist.euclidean(eye[2], eye[4])

# 计算水平距离

C = dist.euclidean(eye[0], eye[3])

# 计算眼睛纵横比EAR

ear = (A + B) / (2.0 * C)

return ear

# 解析命令行参数

ap = argparse.ArgumentParser()

ap.add_argument("-p", "--shape-predictor", required=True, help="面部地标预测器路径")

ap.add_argument("-v", "--video", type=str, default="", help="输入视频文件路径")

args = vars(ap.parse_args())

# 设置EAR阈值和连续帧数

EYE_AR_THRESH = 0.3

EYE_AR_CONSEC_FRAMES = 3

# 初始化计数器

COUNTER = 0 # 计算连续帧数3

TOTAL = 0 # 若连续帧数==3,则总眨眼次数+1

# 加载面部地标预测器

print("[INFO] 正在加载面部地标预测器...")

detector = dlib.get_frontal_face_detector()

predictor = dlib.shape_predictor(args["shape_predictor"])

# 分别获取左眼和右眼坐标索引

(lStart, lEnd) = FACIAL_LANDMARKS_68_IDXS["left_eye"]

(rStart, rEnd) = FACIAL_LANDMARKS_68_IDXS["right_eye"]

# 读取视频

print("[INFO] 开始视频流...")

vs = cv2.VideoCapture(args["video"])

time.sleep(1.0)

# 将shape对象转换为numpy数组

def shape_to_np(shape, dtype="int"):

# 创建一个dtype类型的空ndarray用于存储68个关键点的坐标

coords = np.zeros((shape.num_parts, 2), dtype=dtype)

# 遍历每个关键点,提取坐标并存储到ndarray中

for i in range(0, shape.num_parts):

coords[i] = (shape.part(i).x, shape.part(i).y)

return coords

# 不断循环处理每一帧图像

while True:

# 预处理

frame = vs.read()[1]

if frame is None:

break

# 调整图像大小

(h, w) = frame.shape[:2]

width = 1200 # 脸部大小会影响检测器的识别,太小可能会识别不到

r = width / float(w)

dim = (width, int(h * r))

frame = cv2.resize(frame, dim, interpolation=cv2.INTER_AREA)

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# 人脸检测

rects = detector(gray, 0)

# 遍历检测到的每个人脸

for rect in rects:

# 获取关键点坐标

shape = predictor(gray, rect)

shape = shape_to_np(shape)

# 提取左眼和右眼区域坐标

leftEye = shape[lStart:lEnd]

rightEye = shape[rStart:rEnd]

# 计算左右眼纵横比EAR

leftEAR = eye_aspect_ratio(leftEye)

rightEAR = eye_aspect_ratio(rightEye)

# 计算平均纵横比

ear = (leftEAR + rightEAR) / 2.0

# 绘制眼睛区域轮廓(凸包)

leftEyeHull = cv2.convexHull(leftEye)

rightEyeHull = cv2.convexHull(rightEye)

cv2.drawContours(frame, [leftEyeHull], -1, (0, 255, 0), 1)

cv2.drawContours(frame, [rightEyeHull], -1, (0, 255, 0), 1)

# 检查是否满足EAR阈值

if ear < EYE_AR_THRESH:

COUNTER += 1

else:

# 如果连续几帧都是闭眼的,增加总数

if COUNTER >= EYE_AR_CONSEC_FRAMES:

TOTAL += 1

# 重置计数器

COUNTER = 0

# 在图像中,显示眨眼次数和纵横比

cv2.putText(frame, "Blinks: {}".format(TOTAL), (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2)

cv2.putText(frame, "EAR: {:.2f}".format(ear), (300, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2)

# 显示当前帧图像

cv2.imshow("Frame", frame)

key = cv2.waitKey(10) & 0xFF

# 按下Esc键退出循环

if key == 27:

break

vs.release() # 释放视频流

cv2.destroyAllWindows() # 关闭所有窗口

五、项目实战(摄像头获取帧图像)

- 【参数配置】方式一:Pycharm + Terminal + 输入指令自动检测:

python detect_blinks.py --shape-predictor shape_predictor_68_face_landmarks.dat- 【参数配置】方式二:Pycharm + 点击Edit Configuration,输入配置参数

--shape-predictor shape_predictor_68_face_landmarks.dat,点击Run开始检测。

# 导入所需库

from scipy.spatial import distance as dist

from collections import OrderedDict

import numpy as np

import argparse

import time

import dlib

import cv2

# 定义脸部关键点索引

FACIAL_LANDMARKS_68_IDXS = OrderedDict([

("mouth", (48, 68)),

("right_eyebrow", (17, 22)),

("left_eyebrow", (22, 27)),

("right_eye", (36, 42)),

("left_eye", (42, 48)),

("nose", (27, 36)),

("jaw", (0, 17))

])

# 计算眼睛纵横比函数

def eye_aspect_ratio(eye):

# 计算垂直距离

A = dist.euclidean(eye[1], eye[5])

B = dist.euclidean(eye[2], eye[4])

# 计算水平距离

C = dist.euclidean(eye[0], eye[3])

# 计算眼睛纵横比EAR

ear = (A + B) / (2.0 * C)

return ear

# 解析命令行参数

ap = argparse.ArgumentParser()

ap.add_argument("-p", "--shape-predictor", required=True, help="面部地标预测器路径")

args = vars(ap.parse_args())

# 设置EAR阈值和连续帧数

EYE_AR_THRESH = 0.3

EYE_AR_CONSEC_FRAMES = 3

# 初始化计数器

COUNTER = 0 # 计算连续帧数3

TOTAL = 0 # 若连续帧数==3,则总眨眼次数+1

# 加载面部地标预测器

print("[INFO] 正在加载面部地标预测器...")

detector = dlib.get_frontal_face_detector()

predictor = dlib.shape_predictor(args["shape_predictor"])

# 分别获取左眼和右眼坐标索引

(lStart, lEnd) = FACIAL_LANDMARKS_68_IDXS["left_eye"]

(rStart, rEnd) = FACIAL_LANDMARKS_68_IDXS["right_eye"]

# 读取视频

print("[INFO] 开始视频流...")

vs = cv2.VideoCapture(0)

time.sleep(1.0)

# 将shape对象转换为numpy数组

def shape_to_np(shape, dtype="int"):

# 创建一个dtype类型的空ndarray用于存储68个关键点的坐标

coords = np.zeros((shape.num_parts, 2), dtype=dtype)

# 遍历每个关键点,提取坐标并存储到ndarray中

for i in range(0, shape.num_parts):

coords[i] = (shape.part(i).x, shape.part(i).y)

return coords

# 不断循环处理每一帧图像

while True:

# 预处理

ret, frame = vs.read()

if not ret:

break

# 调整图像大小

(h, w) = frame.shape[:2]

width = 1200 # 脸部大小会影响检测器的识别,太小可能会识别不到

r = width / float(w)

dim = (width, int(h * r))

frame = cv2.resize(frame, dim, interpolation=cv2.INTER_AREA)

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# 人脸检测

rects = detector(gray, 0)

# 遍历检测到的每个人脸

for rect in rects:

# 获取关键点坐标

shape = predictor(gray, rect)

shape = shape_to_np(shape)

# 提取左眼和右眼区域坐标

leftEye = shape[lStart:lEnd]

rightEye = shape[rStart:rEnd]

# 计算左右眼纵横比EAR

leftEAR = eye_aspect_ratio(leftEye)

rightEAR = eye_aspect_ratio(rightEye)

# 计算平均纵横比

ear = (leftEAR + rightEAR) / 2.0

# 绘制眼睛区域轮廓(凸包)

leftEyeHull = cv2.convexHull(leftEye)

rightEyeHull = cv2.convexHull(rightEye)

cv2.drawContours(frame, [leftEyeHull], -1, (0, 255, 0), 1)

cv2.drawContours(frame, [rightEyeHull], -1, (0, 255, 0), 1)

# 检查是否满足EAR阈值

if ear < EYE_AR_THRESH:

COUNTER += 1

else:

# 如果连续几帧都是闭眼的,增加总数

if COUNTER >= EYE_AR_CONSEC_FRAMES:

TOTAL += 1

# 重置计数器

COUNTER = 0

# 在图像中,显示眨眼次数和纵横比

cv2.putText(frame, "Blinks: {}".format(TOTAL), (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2)

cv2.putText(frame, "EAR: {:.2f}".format(ear), (300, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2)

# 显示当前帧图像

cv2.imshow("Frame", frame)

key = cv2.waitKey(1) & 0xFF

# 按下Esc键退出循环

if key == 27:

break

vs.release() # 释放视频流

cv2.destroyAllWindows() # 关闭所有窗口

风语者!平时喜欢研究各种技术,目前在从事后端开发工作,热爱生活、热爱工作。

U8W/U8W-Mini使用与常见问题解决

U8W/U8W-Mini使用与常见问题解决 QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。...

QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。... stm32使用HAL库配置串口中断收发数据(保姆级教程)

stm32使用HAL库配置串口中断收发数据(保姆级教程) 分享几个国内免费的ChatGPT镜像网址(亲测有效)

分享几个国内免费的ChatGPT镜像网址(亲测有效) Allegro16.6差分等长设置及走线总结

Allegro16.6差分等长设置及走线总结