您现在的位置是:首页 >其他 >PYtriton:从Python提供的Triton Inference Server网站首页其他

PYtriton:从Python提供的Triton Inference Server

env

-

sudo docker run -it --shm-size 8gb --rm --gpus=all -p 8126:8000 -v ${PWD}:/test nvcr.io/nvidia/pytorch:23.04-py3 bash -

sudo docker run -it --shm-size 8gb --rm --gpus=all -v ${PWD}:/test nvcr.io/nvidia/pytorch:23.04-py3 bash -

服务端Docker : sudo docker run -it --shm-size 8gb --rm --gpus=all -p 8126:8000 -v ${PWD}:/test nvcr.io/nvidia/pytorch:23.02-py3 bash

-

客户端Docker : sudo docker run -it --shm-size 8gb --rm --gpus=all -v ${PWD}:/test nvcr.io/nvidia/pytorch:23.02-py3 bash

-

pip install -U nvidia-pytriton -i https://mirrors.aliyun.com/pypi/simple/

官方文档与测试示例

- quick_start: https://triton-inference-server.github.io/pytriton/0.1.5/quick_start/

- wget https://github.com/triton-inference-server/pytriton/archive/refs/heads/main.zip

linear_random_pytorch

client

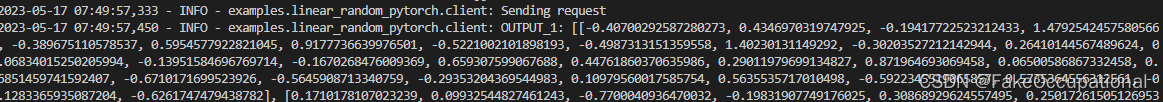

- client.infer_batch()函数用于请求server中的模型,函数参数名称在 server的triton.bind()中定义(shape隐含batch)

import argparse

import logging

import torch # pytype: disable=import-error

from pytriton.client import ModelClient

def main():

parser = argparse.ArgumentParser(description=__doc__)

parser.add_argument(

"--url",

default="localhost",

help=(

"Url to Triton server (ex. grpc://localhost:8001)."

"HTTP protocol with default port is used if parameter is not provided"

),

required=False,

)

args = parser.parse_args()

logging.basicConfig(level=logging.INFO, format="%(asctime)s - %(levelname)s - %(name)s: %(message)s")

logger = logging.getLogger("examples.linear_random_pytorch.client")

input1_batch = torch.randn(128, 20).cpu().detach().numpy()

logger.info(f"Input: {input1_batch.tolist()}")

with ModelClient(args.url, "Linear") as client:

logger.info("Sending request")

result_dict = client.infer_batch(input1_batch)

for output_name, output_batch in result_dict.items():

logger.info(f"{output_name}: {output_batch.tolist()}")

if __name__ == "__main__":

main()

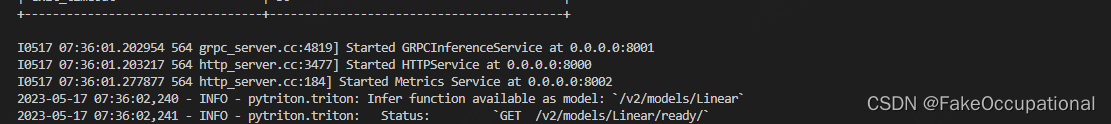

server

import logging

import numpy as np

import torch # pytype: disable=import-error

from pytriton.decorators import batch

from pytriton.model_config import ModelConfig, Tensor

from pytriton.triton import Triton

DEVICE = "cuda" if torch.cuda.is_available() else "cpu"

MODEL = torch.nn.Linear(20, 30).to(DEVICE).eval()

# 推理服务

@batch

def _infer_fn(**inputs):

(input1_batch,) = inputs.values()

input1_batch_tensor = torch.from_numpy(input1_batch).to(DEVICE)

output1_batch_tensor = MODEL(input1_batch_tensor)

output1_batch = output1_batch_tensor.cpu().detach().numpy()

return [output1_batch]

logging.basicConfig(level=logging.INFO, format="%(asctime)s - %(levelname)s - %(name)s: %(message)s")

logger = logging.getLogger("examples.linear_random_pytorch.server")

# 使用绑定方法在模型和 Triton 推理服务器之间创建连接

with Triton() as triton:

logger.info("Loading Linear model.")

triton.bind(

model_name="Linear",

infer_func=_infer_fn,

inputs=[

Tensor(dtype=np.float32, shape=(-1,)),

],

outputs=[

Tensor(dtype=np.float32, shape=(-1,)),

],

config=ModelConfig(max_batch_size=128),

)

logger.info("Serving models")

triton.serve()

cg

https://github.com/grimoire/amirstan_plugin

https://conan.io/

使用 NVIDIA TensorRT 使用 ONNX 模型和自定义层估计深度

使用gs将ReshapeShapeUnsqueezeMulAddInstance NormalizationGroupNormalizationPlugin组合替换为GN

https://developer.nvidia.com/blog/estimating-depth-beyond-2d-using-custom-layers-on-tensorrt-and-onnx-models/

https://stackoverflow.com/questions/71220867/pytorch-to-onnx-export-aten-operators-not-supported-onnxruntime-hangs-out

https://paulbridger.com/posts/tensorrt-object-detection-quantized/

TensorRT教程8:使用 Python API 从头创建网络(重点)https://blog.csdn.net/weixin_41562691/article/details/118278140

TensorRT教程18:使用DLA(深学习加速器) https://blog.csdn.net/weixin_41562691/article/details/119085054

矩阵乘法例 https://www.cnblogs.com/scut-fm/p/3756242.html

自己写的CUDA矩阵乘法能优化到多快?https://www.zhihu.com/question/41060378

【Cuda矩阵运算库】cuBLAS介绍 https://zhuanlan.zhihu.com/p/438551588

银河系CUDA编程指南(1)——用cuBLAS库进行一个简单矩阵乘法计算https://zhuanlan.zhihu.com/p/427262454

C++将返回值为引用有什么作用?https://www.zhihu.com/question/353263548

https://www.jianshu.com/p/da73f381f8bc

从 TensorRT 7.1 开始,您无需为单个插件编写特定的 ONNX 导入器。您可以使用 ,将插件创建者静态注册到插件注册表。在此示例中,用于注册 GN 插件。REGISTER_TENSORRT_PLUGINREGISTER_TENSORRT_PLUGIN(GroupNormalizationPluginCreator)

ONNX 图中插件层的名称应与类函数返回的名称相同。在解析过程中,TensorRT 会根据其名称将该层标识为插件。

在此示例中,图层的名称为 。getPluginNameGroupNormalizationPluginCreatorGroupNormalizationPlugin

在 ONNX 中为自定义层设置的属性必须与类的插件属性匹配。在此插件中,属性分别为 和 。GroupNormalizationPluginCreatorepsnum_groups

network_from_onnx_path(onnx_path,flags=[trt.OnnxParserFlag.NATIVE_INSTANCENORM]),

AttributeError: module 'tensorrt' has no attribute 'OnnxParserFlag'

pip install tensorrt==8.6.1 -i https://mirrors.aliyun.com/pypi/simple/

- 如果在一些转换处理中遇到问题可考虑换到23.02版本,然后手动更改其中的tensorrt版本

pip install tensorrt==8.6.1 -i https://mirrors.aliyun.com/pypi/simple/

23.04环境中存在的问题

Loading TensorRT engine E

Loading TensorRT engine: engine/clip.plan

[W] ‘colored’ module is not installed, will not use colors when logging. To enable colors, please install the ‘colored’ module: python3 -m pip install colored

[I] Loading bytes from engine/clip.plan

[E] 6: The engine plan file is not compatible with this version of TensorRT, expecting library version 8.6.1.2 got 8.6.1.6, please rebuild.

[E] 2: [engine.cpp::deserializeEngine::951] Error Code 2: Internal Error (Assertion engine->deserialize(start, size, allocator, runtime) failed. )

[!] Could not deserialize engine. See log for details.

- dpkg -l 命令会列出系统中所有已安装的软件包信息。结合grep,可以过滤出自己想要的内容。

root@407e1e76c8b1:/test/hzt_trt/TensorRT-release-8.6/demo/Diffusion# dpkg -l | grep nvinfer

ii libnvinfer-bin 8.6.1.2-1+cuda12.0 amd64 TensorRT binaries

ii libnvinfer-dev 8.6.1.2-1+cuda12.0 amd64 TensorRT development libraries

ii libnvinfer-dispatch-dev 8.6.1.2-1+cuda12.0 amd64 TensorRT development dispatch runtime libraries

ii libnvinfer-dispatch8 8.6.1.2-1+cuda12.0 amd64 TensorRT dispatch runtime library

ii libnvinfer-headers-dev 8.6.1.2-1+cuda12.0 amd64 TensorRT development headers

ii libnvinfer-headers-plugin-dev 8.6.1.2-1+cuda12.0 amd64 TensorRT plugin headers

ii libnvinfer-lean-dev 8.6.1.2-1+cuda12.0 amd64 TensorRT lean runtime libraries

ii libnvinfer-lean8 8.6.1.2-1+cuda12.0 amd64 TensorRT lean runtime library

ii libnvinfer-plugin-dev 8.6.1.2-1+cuda12.0 amd64 TensorRT plugin libraries

ii libnvinfer-plugin8 8.6.1.2-1+cuda12.0 amd64 TensorRT plugin libraries

ii libnvinfer-vc-plugin-dev 8.6.1.2-1+cuda12.0 amd64 TensorRT vc-plugin library

ii libnvinfer-vc-plugin8 8.6.1.2-1+cuda12.0 amd64 TensorRT vc-plugin library

ii libnvinfer8 8.6.1.2-1+cuda12.0 amd64 TensorRT runtime libraries

Onnx Exporting the operator E

- Exporting the operator ‘aten::scaled_dot_product_attention’ to ONNX opset version 17 is not supported. Please feel free to request support or submit a pull request on PyTorch GitHub: https://github.com/pytorch/pytorch/issues.

cudaError

- https://docs.nvidia.com/cuda/cuda-runtime-api/group__CUDART__TYPES.html#group__CUDART__TYPES_1g3f51e3575c2178246db0a94a430e0038

Custom化SD

https://github1s.com/NVIDIA/TensorRT/blob/release/8.6/demo/Diffusion/models.py#L71-L97

def get_path(version, inpaint=False):

if version == "1.4":

if inpaint:

return "runwayml/stable-diffusion-inpainting"

else:

return "CompVis/stable-diffusion-v1-4"

elif version == "1.5":

if inpaint:

return "runwayml/stable-diffusion-inpainting"

else:

return "runwayml/stable-diffusion-v1-5"

elif version == "2.0-base":

if inpaint:

return "stabilityai/stable-diffusion-2-inpainting"

else:

return "stabilityai/stable-diffusion-2-base"

elif version == "2.0":

if inpaint:

return "stabilityai/stable-diffusion-2-inpainting"

else:

return "stabilityai/stable-diffusion-2"

elif version == "2.1":

return "stabilityai/stable-diffusion-2-1"

elif version == "2.1-base":

return "stabilityai/stable-diffusion-2-1-base"

else:

raise ValueError(f"Incorrect version {version}")

def get_embedding_dim(version):

if version in ("1.4", "1.5"):

return 768

elif version in ("2.0", "2.0-base", "2.1", "2.1-base"):

return 1024

else:

raise ValueError(f"Incorrect version {version}")

https://github1s.com/NVIDIA/TensorRT/blob/release/8.6/demo/Diffusion/demo_txt2img.py#L67-L77

# Initialize demo

demo = Txt2ImgPipeline(

scheduler=args.scheduler,

denoising_steps=args.denoising_steps,

output_dir=args.output_dir,

version=args.version,# 如果客户化,这里需要指定,后续会从get_path返回路径,作为from_pretrained(https://github1s.com/NVIDIA/TensorRT/blob/release/8.6/demo/Diffusion/models.py#L279-L282)的参数

hf_token=args.hf_token,

verbose=args.verbose,

nvtx_profile=args.nvtx_profile,

max_batch_size=max_batch_size,

use_cuda_graph=args.use_cuda_graph)

-

Build onnx时用到

def get_dynamic_axes(self):

return {

‘latent’: {0: ‘B’, 2: ‘H’, 3: ‘W’},

‘images’: {0: ‘B’, 2: ‘8H’, 3: ‘8W’}

} -

Build engine时用到(profile会设置3个值)

def get_input_profile(self, batch_size, image_height, image_width, static_batch, static_shape):

Profiles的设置会影响推理的速度,实验中将maxbatch将16变为4,推理时间变为原来的1/3 -

在engine模型__loadResources时,调用get_shape_dict

def get_shape_dict(self, batch_size, image_height, image_width):

DS

- (preres,curindex,)

U8W/U8W-Mini使用与常见问题解决

U8W/U8W-Mini使用与常见问题解决 分享几个国内免费的ChatGPT镜像网址(亲测有效)

分享几个国内免费的ChatGPT镜像网址(亲测有效) stm32使用HAL库配置串口中断收发数据(保姆级教程)

stm32使用HAL库配置串口中断收发数据(保姆级教程) QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。...

QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。... SpringSecurity实现前后端分离认证授权

SpringSecurity实现前后端分离认证授权