您现在的位置是:首页 >技术教程 >资源配额(ResourceQuota) & 资源限制(LimitRange)网站首页技术教程

资源配额(ResourceQuota) & 资源限制(LimitRange)

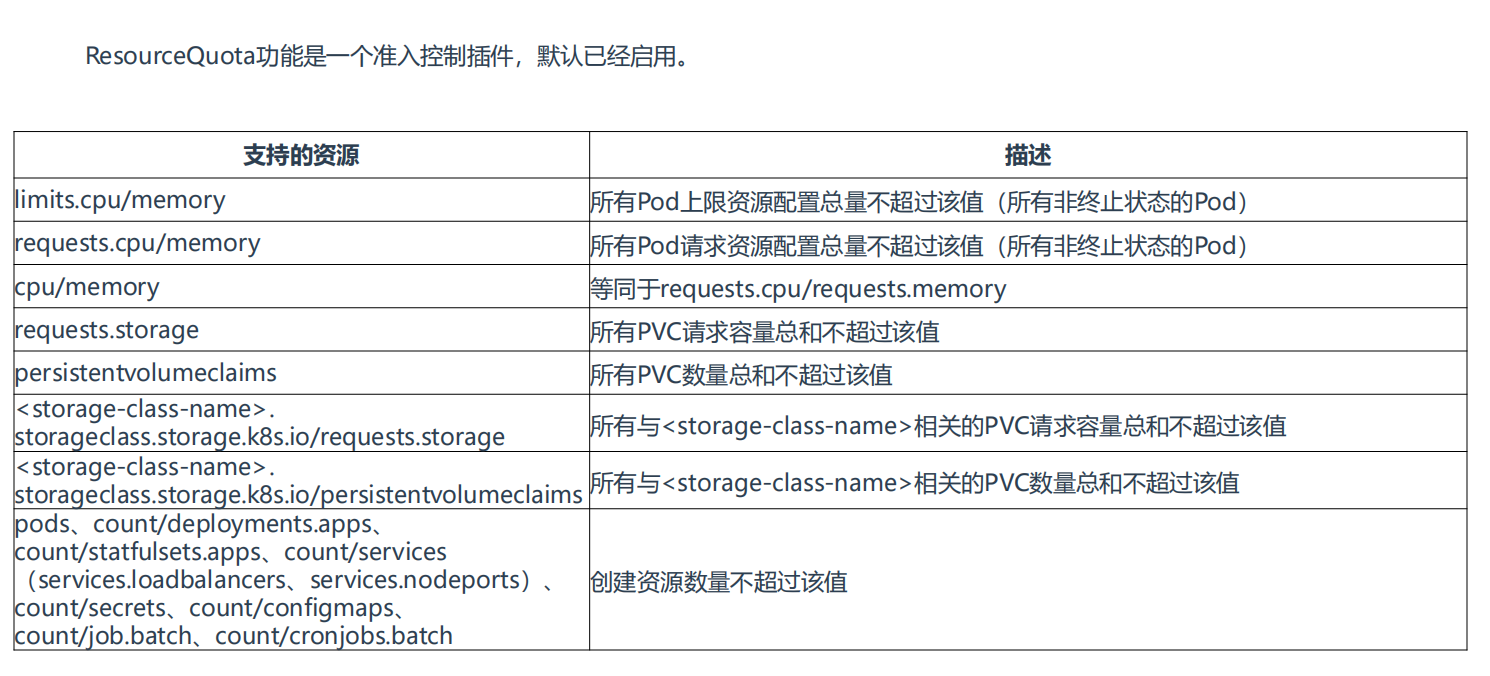

资源配额 ResourceQuota

资源配额 ResourceQuota:限制命名空间总容量。

当多个团队、多个用户共享使用K8s集群时,会出现不均匀资源使用,默认情况下先到先得,这时可以通过ResourceQuota来对命名空间资源使用总量做限制,从而解决这个问题。

使用流程:k8s管理员为每个命名空间创建一个或多个ResourceQuota对象,定义资源使用总量,K8s会跟踪命名空间资源使用情况,当超过定义的资源配额会返回拒绝。

? 实战:资源配额 ResourceQuota-2023.5.25(测试成功)

- 实验环境

实验环境:

1、win10,vmwrokstation虚机;

2、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

k8s version:v1.20.0

docker://20.10.7

- 实验软件

链接:https://pan.baidu.com/s/1-NzdrpktfaUOAl6QO_hqsA?pwd=0820

提取码:0820

2023.5.25-ResourceQuota-code

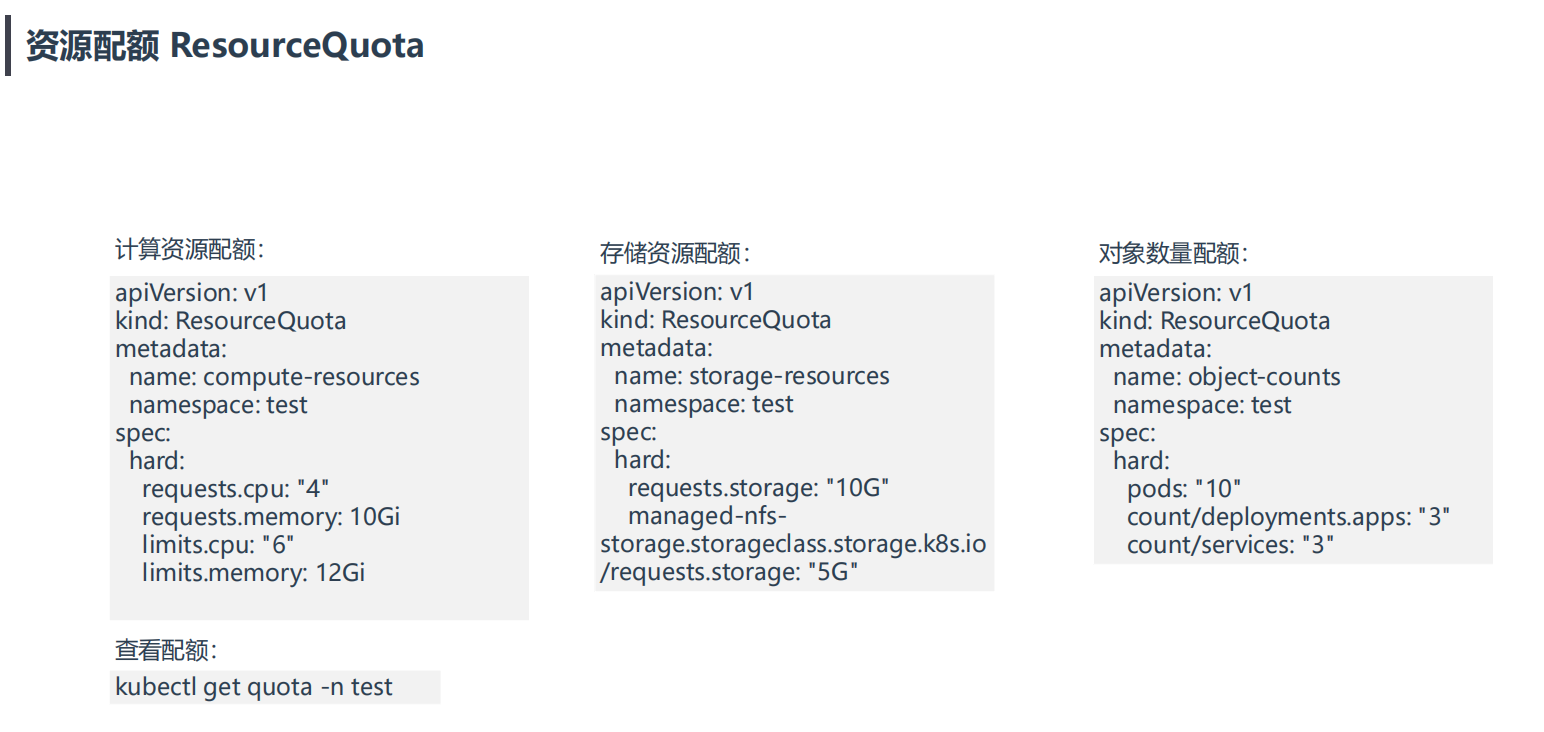

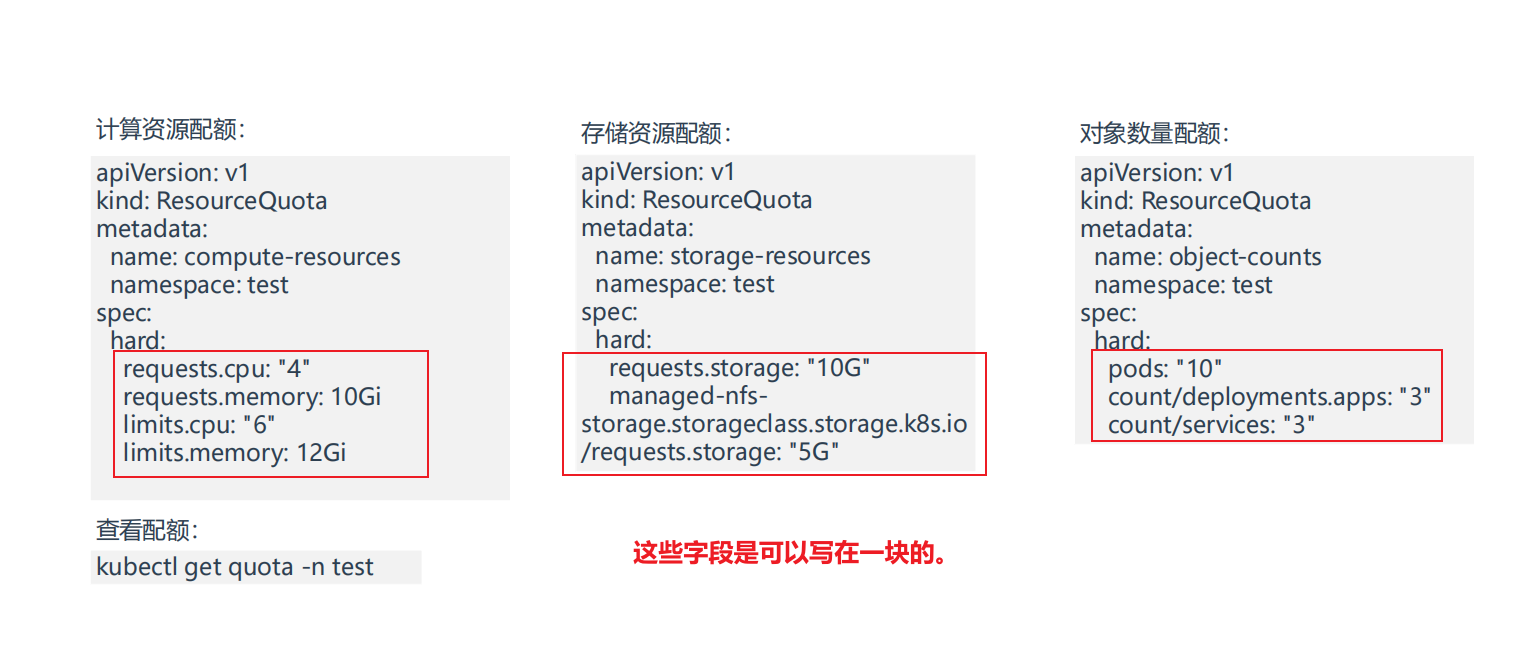

1.计算资源配额

- 自己虚机目前配置为2c,2g

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-mFzQVe8l-1685312821737)(https://bucket-hg.oss-cn-shanghai.aliyuncs.com/img/image-20230524213037637.png)]

为1master,2个node节点。

- 创建测试命名空间test

[root@k8s-master1 ~]#kubectl create ns test

namespace/test created

- 创建ResourceQuota资源

[root@k8s-master1 ~]#mkdir ResourceQuota

[root@k8s-master1 ~]#cd ResourceQuota/

[root@k8s-master1 ResourceQuota]#vim compute-resources.yaml

apiVersion: v1

kind: ResourceQuota

metadata:

name: compute-resources

namespace: test

spec:

hard:

requests.cpu: "1"

requests.memory: 1Gi

limits.cpu: "2"

limits.memory: 2Gi

#部署:

[root@k8s-master1 ResourceQuota]#kubectl apply -f compute-resources.yaml

resourcequota/compute-resources configured

#查看当前配置的ResourceQuota

[root@k8s-master1 ResourceQuota]#kubectl get quota -ntest

NAME AGE REQUEST LIMIT

compute-resources 2m37s requests.cpu: 0/1, requests.memory: 0/1Gi limits.cpu: 0/2, limits.memory: 0/2Gi

- 部署一个pod应用

[root@k8s-master1 ResourceQuota]#kubectl run web --image=nginx --dry-run=client -oyaml > pod.yaml

[root@k8s-master1 ResourceQuota]#vim pod.yaml

#删除没用的配置,并配置上resources

apiVersion: v1

kind: Pod

metadata:

labels:

run: web

name: web

namespace: test

spec:

containers:

- image: nginx

name: web

resources:

requests:

cpu: 0.5

memory: 0.5Gi

limits:

cpu: 1

memory: 1Gi

#部署pod

[root@k8s-master1 ResourceQuota]#kubectl apply -f pod.yaml

Error from server (Forbidden): error when creating "pod.yaml": pods "web" is forbidden: failed quota: compute-resources: must specify limits.cpu,limits.memory

#注意:在部署pod时会看到报错,提示"pods "web" is forbidden: failed quota: compute-resources: must specify limits.cpu,limits.memory",因为test命名空间配置了ResourceQuota,pod里只配置requests会报错;

#测试:如果不配置resource,看会否会报错?

cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: web

name: web

namespace: test

spec:

containers:

- image: nginx

name: web

#resources:

# requests:

# cpu: 0.5

# memory: 0.5Gi

[root@k8s-master1 ResourceQuota]#kubectl apply -f pod.yaml

Error from server (Forbidden): error when creating "pod.yaml": pods "web" is forbidden: failed quota: compute-resources: must specify limits.cpu,limits.memory,requests.cpu,requests.memory

[root@k8s-master1 ResourceQuota]#kubectl get po -ntest

No resources found in test namespace.

#现象:也是会报错的!!!

#结论:只要是配置了ResourceQuota的命名空间,pod里必须要配置上limits.cpu,limits.memory,requests.cpu,requests.memory,否则`会返回拒绝`,无法成功创建资源的。

#重新配置pod:补加limits配置

[root@k8s-master1 ResourceQuota]#vim pod.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: web

name: web

namespace: test

spec:

containers:

- image: nginx

name: web

resources:

requests:

cpu: 0.5

memory: 0.5Gi

limits:

cpu: 1

memory: 1Gi

#重新部署:

[root@k8s-master1 ResourceQuota]#kubectl apply -f pod.yaml

pod/web created

- 查看

#查看:

[root@k8s-master1 ResourceQuota]#kubectl get po -ntest

NAME READY STATUS RESTARTS AGE

web 1/1 Running 0 26s

[root@k8s-master1 ResourceQuota]#kubectl get quota -ntest

NAME AGE REQUEST LIMIT

compute-resources 8h requests.cpu: 500m/1, requests.memory: 512Mi/1Gi limits.cpu: 1/2, limits.memory: 1Gi/2Gi

#可以看到,此时ResourceQuota下可以清楚地看到requests.cpu,requests.memory,limits.cpu,limits.memory的当前使用量/总使用量。

- 这里测试下,若继续在test命名空间下新建pod,如果pod里cpu或者memory的requests值之和超过ResourceQuota里定义的,预计会报错。当然,pod里cpu或者memory的rlimits值之和超过ResourceQuota里定义的,同理也会报错。接下来,我们测试下看看。

测试:如果pod里cpu或者memory的requests值之和超过ResourceQuota里定义的,预计会报错。

[root@k8s-master1 ResourceQuota]#cp pod.yaml pod1.yaml

[root@k8s-master1 ResourceQuota]#vim pod1.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: web

name: web2

namespace: test

spec:

containers:

- image: nginx

name: web

resources:

requests:

cpu: 0.6

memory: 0.5Gi

limits:

cpu: 1

memory: 1Gi

#部署,并观察现象:

[root@k8s-master1 ResourceQuota]#kubectl apply -f pod1.yaml

Error from server (Forbidden): error when creating "pod1.yaml": pods "web2" is forbidden: exceeded quota: compute-resources, requested: requests.cpu=600m, used: requests.cpu=500m, limited: requests.cpu=1

[root@k8s-master1 ResourceQuota]#kubectl get quota -ntest

NAME AGE REQUEST LIMIT

compute-resources 8h requests.cpu: 500m/1, requests.memory: 512Mi/1Gi limits.cpu: 1/2, limits.memory: 1Gi/2Gi

结论:如果pod里cpu或者memory的requests值之和超过ResourceQuota里定义的,创建新的pod会报错。

测试:如果pod里cpu或者memory的linits值之和超过ResourceQuota里定义的,预计会报错。

[root@k8s-master1 ResourceQuota]#cp pod.yaml pod2.yaml

[root@k8s-master1 ResourceQuota]#vim pod2.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: web

name: web3

namespace: test

spec:

containers:

- image: nginx

name: web

resources:

requests:

cpu: 0.5

memory: 0.5Gi

limits:

cpu: 1.1

memory: 1Gi

#部署,并观察现象:

[root@k8s-master1 ResourceQuota]#kubectl apply -f pod2.yaml

Error from server (Forbidden): error when creating "pod2.yaml": pods "web3" is forbidden: exceeded quota: compute-resources, requested: limits.cpu=1100m, used: limits.cpu=1, limited: limits.cpu=2

[root@k8s-master1 ResourceQuota]#kubectl get quota -ntest

NAME AGE REQUEST LIMIT

compute-resources 8h requests.cpu: 500m/1, requests.memory: 512Mi/1Gi limits.cpu: 1/2, limits.memory: 1Gi/2Gi

结论:如果pod里cpu或者memory的limits值之和超过ResourceQuota里定义的,创建新的pod会报错。

因此:

如果某个命名空间下配置了ResourceQuota,pod里必须要配置上limits.cpu,limits.memory,requests.cpu,requests.memory,否则

会返回拒绝,无法成功创建资源的。另外,如果pod里cpu或者memory的requests&limits值之和超过ResourceQuota里定义的requests&limits,则

会返回拒绝,无法成功创建资源的。

[root@k8s-master1 ResourceQuota]#cp pod.yaml pod3.yaml

[root@k8s-master1 ResourceQuota]#vim pod3.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: web

name: web3

namespace: test

spec:

containers:

- image: nginx

name: web

resources:

requests:

cpu: 0.5

memory: 0.5Gi

limits:

cpu: 1

memory: 1Gi

#部署:

[root@k8s-master1 ResourceQuota]#kubectl apply -f pod3.yaml

pod/web3 created

#查看:

[root@k8s-master1 ResourceQuota]#kubectl get po -ntest

NAME READY STATUS RESTARTS AGE

web 1/1 Running 0 16m

web3 1/1 Running 0 27s

[root@k8s-master1 ResourceQuota]#kubectl get quota -ntest

NAME AGE REQUEST LIMIT

compute-resources 8h requests.cpu: 1/1, requests.memory: 1Gi/1Gi limits.cpu: 2/2, limits.memory: 2Gi/2Gi

测试结束。?

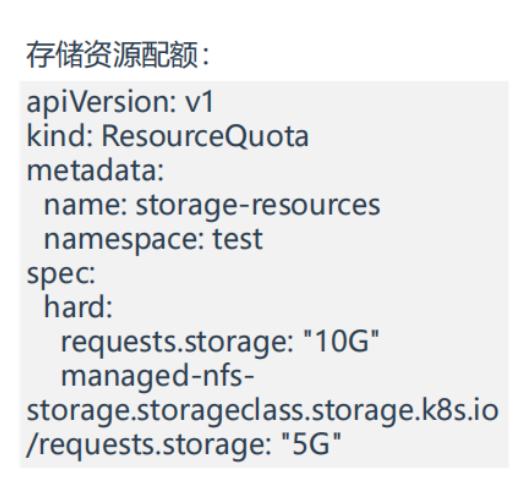

2.存储资源配额

- 部署ResourceQuota

[root@k8s-master1 ResourceQuota]#vim storage-resources.yaml

apiVersion: v1

kind: ResourceQuota

metadata:

name: storage-resources

namespace: test

spec:

hard:

requests.storage: "10G"

#部署:

[root@k8s-master1 ResourceQuota]#kubectl apply -f storage-resources.yaml

resourcequota/storage-resources created

#查看

[root@k8s-master1 ResourceQuota]#kubectl get quota -ntest

NAME AGE REQUEST LIMIT

compute-resources 8h requests.cpu: 1/1, requests.memory: 1Gi/1Gi limits.cpu: 2/2, limits.memory: 2Gi/2Gi

storage-resources 8s requests.storage: 0/10G

- 创建pvc测试

[root@k8s-master1 ResourceQuota]#vim pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc

namespace: test

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 8Gi

#部署:

[root@k8s-master1 ResourceQuota]#kubectl apply -f pvc.yaml

persistentvolumeclaim/pvc created

#查看:

[root@k8s-master1 ResourceQuota]#kubectl get pvc -ntest

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc Pending #这个pending不影响实验,这里是没有pv才导致pvc处于Pending状态

#部署成功后,可以看到ResourceQuota requests.storage这里已经发生了变化

[root@k8s-master1 ResourceQuota]#kubectl get quota -ntest

NAME AGE REQUEST LIMIT

compute-resources 8h requests.cpu: 1/1, requests.memory: 1Gi/1Gi limits.cpu: 2/2, limits.memory: 2Gi/2Gi

storage-resources 117s requests.storage: 8Gi/10G

- 我们继续在创建一个pvc,此时如果requests.storage之和超过ResourceQuota里定义的话,那么预计会报错的

[root@k8s-master1 ResourceQuota]#cp pvc.yaml pvc1.yaml

[root@k8s-master1 ResourceQuota]#vim pvc1.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc1

namespace: test

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2.1Gi

#部署:

[root@k8s-master1 ResourceQuota]#kubectl apply -f pvc1.yaml

Error from server (Forbidden): error when creating "pvc1.yaml": persistentvolumeclaims "pvc1" is forbidden: exceeded quota: storage-resources, requested: requests.storage=2254857831, used: requests.storage=8Gi, limited: requests.storage=10G

[root@k8s-master1 ResourceQuota]#kubectl get quota -ntest

NAME AGE REQUEST LIMIT

compute-resources 8h requests.cpu: 1/1, requests.memory: 1Gi/1Gi limits.cpu: 2/2, limits.memory: 2Gi/2Gi

storage-resources 6m29s requests.storage: 8Gi/10G

#可以看到,此时报错了,意料之中。

#我们重新部署一个pvc看看:

[root@k8s-master1 ResourceQuota]#cp pvc.yaml pvc2.yaml

[root@k8s-master1 ResourceQuota]#vim pvc2.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc2

namespace: test

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

#部署:注意,存储这里不能使用满!!!

[root@k8s-master1 ResourceQuota]#kubectl apply -f pvc2.yaml

Error from server (Forbidden): error when creating "pvc2.yaml": persistentvolumeclaims "pvc2" is forbidden: exceeded quota: storage-resources, requested: requests.storage=2Gi, used: requests.storage=8Gi, limited: requests.storage=10G

[root@k8s-master1 ResourceQuota]#kubectl get quota -ntest

NAME AGE REQUEST LIMIT

compute-resources 8h requests.cpu: 1/1, requests.memory: 1Gi/1Gi limits.cpu: 2/2, limits.memory: 2Gi/2Gi

storage-resources 6m29s requests.storage: 8Gi/10G

#我们再次创建下pvc3.yaml看下

[root@k8s-master1 ResourceQuota]#cp pvc.yaml pvc3.yaml

[root@k8s-master1 ResourceQuota]#vim pvc3.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc3

namespace: test

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

#部署:

[root@k8s-master1 ResourceQuota]#kubectl apply -f pvc3.yaml

persistentvolumeclaim/pvc3 created

#查看:符合预期,可以正常部署pvc。

[root@k8s-master1 ResourceQuota]#kubectl get pvc -ntest

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc Pending 9m27s

pvc3 Pending 9s

[root@k8s-master1 ResourceQuota]#kubectl get quota -ntest

NAME AGE REQUEST LIMIT

compute-resources 8h requests.cpu: 1/1, requests.memory: 1Gi/1Gi limits.cpu: 2/2, limits.memory: 2Gi/2Gi

storage-resources 11m requests.storage: 9Gi/10G

[root@k8s-master1 ResourceQuota]#

测试结束。?

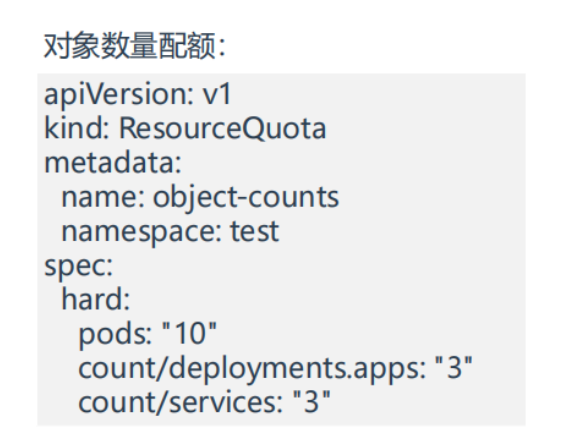

3.对象数量配额

- 我们来看下当前环境

[root@k8s-master1 ResourceQuota]#kubectl get po -ntest

NAME READY STATUS RESTARTS AGE

web 1/1 Running 0 41m

web3 1/1 Running 0 25m

- 部署ResourceQuota

[root@k8s-master1 ResourceQuota]#vim object-counts.yaml

apiVersion: v1

kind: ResourceQuota

metadata:

name: object-counts

namespace: test

spec:

hard:

pods: "4"

count/deployments.apps: "3"

count/services: "3"

#部署:

[root@k8s-master1 ResourceQuota]#kubectl apply -f object-counts.yaml

resourcequota/object-counts created

#查看:

[root@k8s-master1 ResourceQuota]#kubectl get quota -ntest

NAME AGE REQUEST LIMIT

compute-resources 9h requests.cpu: 1/1, requests.memory: 1Gi/1Gi limits.cpu: 2/2, limits.memory: 2Gi/2Gi

object-counts 15s count/deployments.apps: 0/3, count/services: 0/3, pods: 2/4

storage-resources 16m requests.storage: 9Gi/10G

[root@k8s-master1 ResourceQuota]#

- 测试

#此时已经存在2个pod了,ResourceQuota里限制的pod最大数量为4,那我们接下来创建下测试pod看下

#但此时为了测试方方便,我删除下前面的compute-resources.yaml,不然创建pod会报错的

[root@k8s-master1 ResourceQuota]#kubectl delete -f compute-resources.yaml

resourcequota "compute-resources" deleted

[root@k8s-master1 ResourceQuota]#kubectl get quota -ntest

NAME AGE REQUEST LIMIT

object-counts 3m23s count/deployments.apps: 0/3, count/services: 0/3, pods: 2/4

storage-resources 19m requests.storage: 9Gi/10G

#创建3个测试pod看下

[root@k8s-master1 ResourceQuota]#kubectl get po -ntest

NAME READY STATUS RESTARTS AGE

web 1/1 Running 0 47m

web3 1/1 Running 0 32m

[root@k8s-master1 ResourceQuota]#kubectl run web4 --image=nginx -ntest

pod/web4 created

[root@k8s-master1 ResourceQuota]#kubectl run web5 --image=nginx -ntest

Error from server (Forbidden): pods "web5" is forbidden: exceeded quota: object-counts, requested: pods=1, used: pods=4, limited: pods=4

#可以看到,这里报错了。

测试结束。?

汇总

- 注意事项

- 如果某个命名空间下配置了ResourceQuota,pod里必须要配置上limits.cpu,limits.memory,requests.cpu,requests.memory,否则

会返回拒绝,无法成功创建资源的。- 如果pod里cpu或者memory的requests&limits值之和超过ResourceQuota里定义的requests&limits,则

会返回拒绝,无法成功创建资源的。(需要注意:实际创建的request和limits pod之和是可以等于这个ResourceQuota定义的数值的,但是存储资源配额:requests.storage、对象数量配额是不能超过(必须小于)ResourceQuota定义的数值,否则会报错的。)

- 这些字段是可以写在一起的

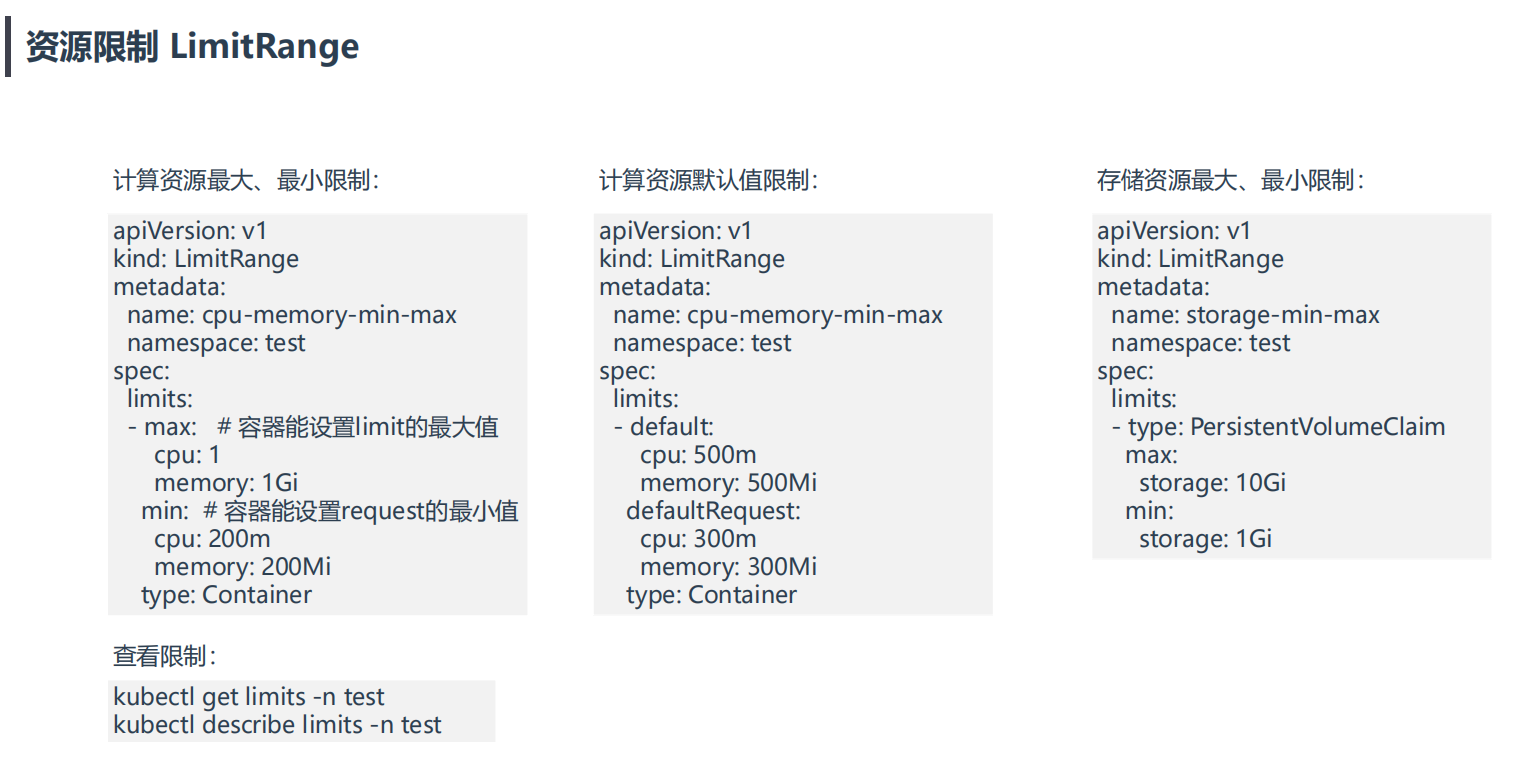

资源限制 LimitRange

资源限制 LimitRange:限制容器的最大最小。

默认情况下,K8s集群上的容器对计算资源没有任何限制,可能会导致个别容器资源过大导致影响其他容器正常工作,这时可以使用LimitRange定义容器默认CPU和内存请求值或者最大上限。

LimitRange限制维度:

• 限制容器配置requests.cpu/memory,limits.cpu/memory的最小、最大值

• 限制容器配置requests.cpu/memory,limits.cpu/memory的默认值

• 限制PVC配置requests.storage的最小、最大值

? 实战:资源限制 LimitRange-2023.5.25(测试成功)

- 实验环境

实验环境:

1、win10,vmwrokstation虚机;

2、k8s集群:3台centos7.6 1810虚机,1个master节点,2个node节点

k8s version:v1.20.0

docker://20.10.7

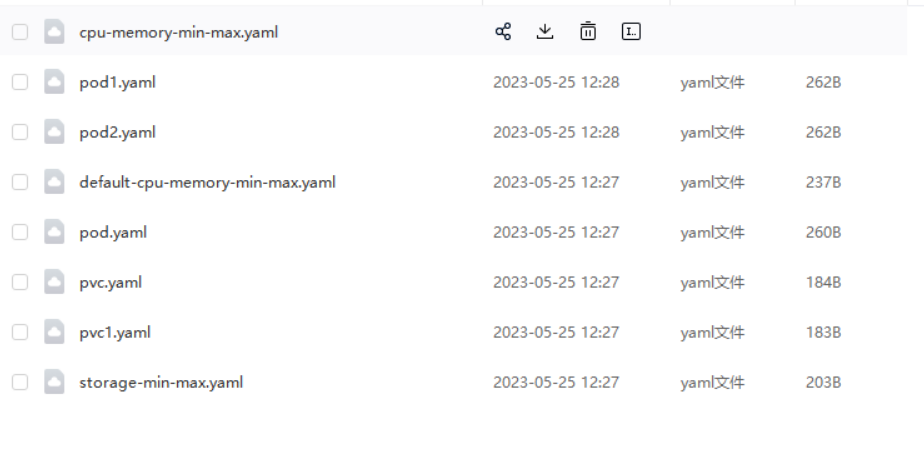

- 实验软件

链接:https://pan.baidu.com/s/1dTuFjqToJaCiHHvtYH9xiw?pwd=0820

提取码:0820

2023.5.25-LimitRange-cdoe

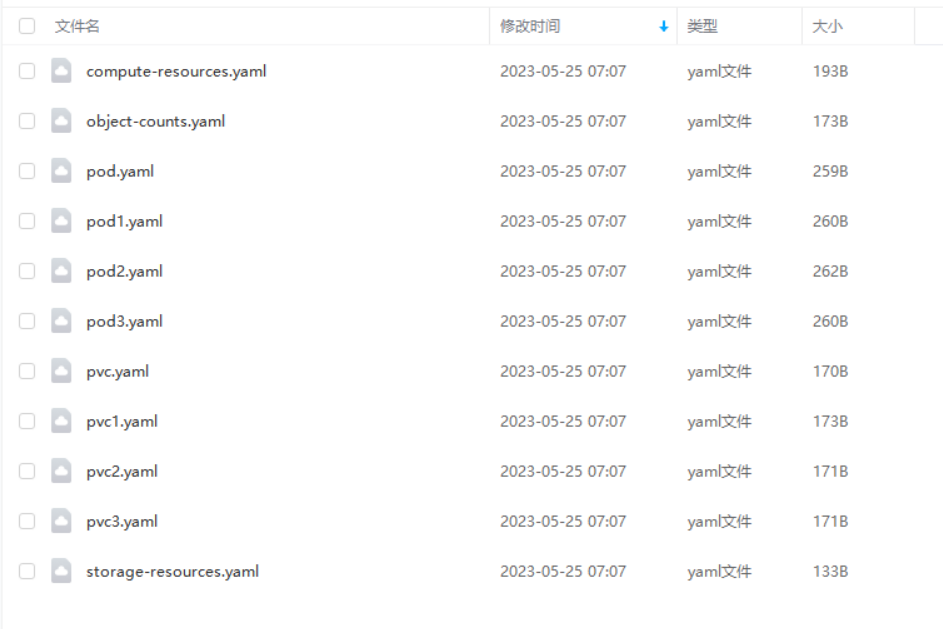

- 为了保持环境的纯洁性,这里删除上面的ResourceQuota配置

[root@k8s-master1 ~]#cd ResourceQuota/

[root@k8s-master1 ResourceQuota]#ls

compute-resources.yaml object-counts.yaml pod1.yaml pod2.yaml pod3.yaml pod.yaml pvc1.yaml pvc2.yaml pvc3.yaml pvc.yaml storage-resources.yaml

[root@k8s-master1 ResourceQuota]#kubectl delete -f .

resourcequota "object-counts" deleted

pod "web" deleted

pod "web3" deleted

pod "web3" deleted

persistentvolumeclaim "pvc" deleted

persistentvolumeclaim "pvc3" deleted

resourcequota "storage-resources" deleted

Error from server (NotFound): error when deleting "compute-resources.yaml": resourcequotas "compute-resources" not found

Error from server (NotFound): error when deleting "pod1.yaml": pods "web2" not found

Error from server (NotFound): error when deleting "pvc1.yaml": persistentvolumeclaims "pvc1" not found

Error from server (NotFound): error when deleting "pvc2.yaml": persistentvolumeclaims "pvc2" not found

[root@k8s-master1 ResourceQuota]#

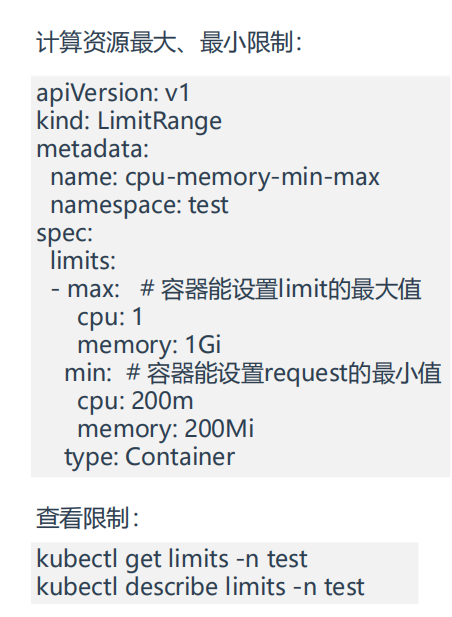

1.计算资源最大、最小限制

- 结论:

如果命名空间里配置了LimitRange,那么后续创建的pod里容器的request值不能小于LimitRange里定义的min(request的最小值),limits值不能大于LimitRange里定义的max(limits的最大值),否则会报错的。

接下来,我们验证下上面的结论。

- 部署LimitRange

[root@k8s-master1 ~]#mkdir LimitRange

[root@k8s-master1 ~]#cd LimitRange/

[root@k8s-master1 LimitRange]#vim cpu-memory-min-max.yaml

apiVersion: v1

kind: LimitRange

metadata:

name: cpu-memory-min-max

namespace: test

spec:

limits:

- max: # 容器能设置limit的最大值

cpu: 1

memory: 1Gi

min: # 容器能设置request的最小值

cpu: 200m

memory: 200Mi

type: Container

#部署

[root@k8s-master1 LimitRange]#kubectl apply -f cpu-memory-min-max.yaml

limitrange/cpu-memory-min-max created

#查看

[root@k8s-master1 LimitRange]#kubectl get limits -n test

NAME CREATED AT

cpu-memory-min-max 2023-05-24T23:26:13Z

[root@k8s-master1 LimitRange]#kubectl describe limits -ntest

Name: cpu-memory-min-max

Namespace: test

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container memory 200Mi 1Gi 1Gi 1Gi -

Container cpu 200m 1 1 1 -

#注意:这里是有request和limit默认值的

- 我们来创建一个小于request最小值,创建一个大于limit最大值的pod看下情况

创建一个小于request最小值:

[root@k8s-master1 LimitRange]#vim pod.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: web

name: web

namespace: test

spec:

containers:

- image: nginx

name: web

resources:

requests:

cpu: 100m

memory: 200Mi

limits:

cpu: 1

memory: 1Gi

#部署:(符合预期效果)

[root@k8s-master1 LimitRange]#kubectl apply -f pod.yaml

Error from server (Forbidden): error when creating "pod.yaml": pods "web" is forbidden: minimum cpu usage per Container is 200m, but request is 100m

[root@k8s-master1 LimitRange]#kubectl describe limits -ntest

Name: cpu-memory-min-max

Namespace: test

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container cpu 200m 1 1 1 -

Container memory 200Mi 1Gi 1Gi 1Gi -

创建一个大于limit最大值:

[root@k8s-master1 LimitRange]#cp pod.yaml pod1.yaml

[root@k8s-master1 LimitRange]#vim pod1.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: web

name: web

namespace: test

spec:

containers:

- image: nginx

name: web

resources:

requests:

cpu: 200m

memory: 200Mi

limits:

cpu: 1.1

memory: 1Gi

#部署:(符合预期效果)

[root@k8s-master1 LimitRange]#kubectl apply -f pod1.yaml

Error from server (Forbidden): error when creating "pod1.yaml": pods "web" is forbidden: maximum cpu usage per Container is 1, but limit is 1100m

[root@k8s-master1 LimitRange]#kubectl describe limits -ntest

Name: cpu-memory-min-max

Namespace: test

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container memory 200Mi 1Gi 1Gi 1Gi -

Container cpu 200m 1 1 1 -

创建一个合适的pod:

[root@k8s-master1 LimitRange]#cp pod.yaml pod2.yaml

[root@k8s-master1 LimitRange]#vim pod2.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: web

name: web

namespace: test

spec:

containers:

- image: nginx

name: web

resources:

requests:

cpu: 250m

memory: 200Mi

limits:

cpu: 0.9

memory: 1Gi

#部署:

[root@k8s-master1 LimitRange]#kubectl apply -f pod2.yaml

pod/web created

#查看:

[root@k8s-master1 LimitRange]#kubectl describe limits -ntest

Name: cpu-memory-min-max

Namespace: test

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container cpu 200m 1 1 1 -

Container memory 200Mi 1Gi 1Gi 1Gi -

[root@k8s-master1 LimitRange]#kubectl get po -ntest

NAME READY STATUS RESTARTS AGE

web 1/1 Running 0 20s

web4 1/1 Running 0 52m

测试结束。?

2.计算资源默认值限制

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-q2v0vH4B-1685312821739)(https://bucket-hg.oss-cn-shanghai.aliyuncs.com/img/image-20230525071058992.png)]

- 结论:

只要给某个命名空间配置了LimitRange,如果你的pod里不配置request、limit,也是可以创建成功pod的,则k8s会默认分配一个request、limit值的。

- 我们来看下default命名空间下的pod是否有默认request、limit值

root@k8s-master1 LimitRange]#kubectl get limits

No resources found in default namespace.

[root@k8s-master1 LimitRange]#kubectl get po

NAME READY STATUS RESTARTS AGE

busybox 1/1 Running 6 3d10h

busybox2 1/1 Running 6 3d10h

py-k8s 1/1 Running 1 24h

[root@k8s-master1 LimitRange]#kubectl describe po busybox

Name: busybox

Namespace: default

Priority: 0

Node: k8s-node2/172.29.9.33

Start Time: Sun, 21 May 2023 20:44:21 +0800

Labels: run=busybox

Annotations: cni.projectcalico.org/podIP: 10.244.169.162/32

……

#可以看到,default命名空间下的pod是没有默认request、limit值的,因此其pod可以用尽宿主机的资源

- 我们看下test命令空间下,创建一个新pod,是否会有默认request、limit值

[root@k8s-master1 LimitRange]#kubectl get limits -ntest

NAME CREATED AT

cpu-memory-min-max 2023-05-24T23:26:13Z

[root@k8s-master1 LimitRange]#kubectl describe limits -ntest

Name: cpu-memory-min-max

Namespace: test

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container cpu 200m 1 1 1 -

Container memory 200Mi 1Gi 1Gi 1Gi -

[root@k8s-master1 LimitRange]#kubectl run web520 --image=nginx -ntest

pod/web520 created

[root@k8s-master1 LimitRange]#kubectl describe pod web520 -ntest

Name: web520

Namespace: test

Priority: 0

Node: k8s-node2/172.29.9.33

Start Time: Thu, 25 May 2023 07:44:16 +0800

Labels: run=web520

Annotations: cni.projectcalico.org/podIP: 10.244.169.167/32

cni.projectcalico.org/podIPs: 10.244.169.167/32

kubernetes.io/limit-ranger: LimitRanger plugin set: cpu, memory request for container web520; cpu, memory limit for container web520

Status: Running

IP: 10.244.169.167

IPs:

IP: 10.244.169.167

Containers:

web520:

Container ID: docker://6cf523d8b462fdfcb44348e4af5247f8c35e5cb0cf4c7e5b0dadaeea76aa8bec

Image: nginx

Image ID: docker-pullable://nginx@sha256:af296b188c7b7df99ba960ca614439c99cb7cf252ed7bbc23e90cfda59092305

Port: <none>

Host Port: <none>

State: Running

Started: Thu, 25 May 2023 07:44:26 +0800

Ready: True

Restart Count: 0

Limits:

cpu: 1

memory: 1Gi

Requests:

cpu: 1

memory: 1Gi

Environment: <none>

#可以看到,是有默认值的

- 接下来,我们更改下这个默认值

[root@k8s-master1 LimitRange]#vim default-cpu-memory-min-max.yaml

apiVersion: v1

kind: LimitRange

metadata:

name: default-cpu-memory-min-max

namespace: test

spec:

limits:

- default:

cpu: 500m

memory: 500Mi

defaultRequest:

cpu: 300m

memory: 300Mi

type: Container

#部署:

[root@k8s-master1 LimitRange]#kubectl apply -f default-cpu-memory-min-max.yaml

limitrange/default-cpu-memory-min-max created

[root@k8s-master1 LimitRange]#kubectl describe limits -ntest

Name: cpu-memory-min-max

Namespace: test

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container cpu 200m 1 1 1 -

Container memory 200Mi 1Gi 1Gi 1Gi -

Name: default-cpu-memory-min-max

Namespace: test

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container cpu - - 300m 500m -

Container memory - - 300Mi 500Mi -

#可以看到,此时的默认值改变了

#我们再创建一个pod看下现象

[root@k8s-master1 LimitRange]#kubectl run web1314 --image=nginx -ntest

pod/web1314 created

[root@k8s-master1 LimitRange]#kubectl describe pod web1314 -ntest

Name: web1314

Namespace: test

Priority: 0

Node: k8s-node1/172.29.9.32

Start Time: Thu, 25 May 2023 07:51:28 +0800

Labels: run=web1314

Annotations: cni.projectcalico.org/podIP: 10.244.36.101/32

cni.projectcalico.org/podIPs: 10.244.36.101/32

kubernetes.io/limit-ranger: LimitRanger plugin set: cpu, memory request for container web1314; cpu, memory limit for container web1314

Status: Running

IP: 10.244.36.101

IPs:

IP: 10.244.36.101

Containers:

web1314:

Container ID: docker://9cd7d7d0ebf7adb9e71b33749c26dd9e9c0149c3c1e427b50dd733bd3989d75b

Image: nginx

Image ID: docker-pullable://nginx@sha256:af296b188c7b7df99ba960ca614439c99cb7cf252ed7bbc23e90cfda59092305

Port: <none>

Host Port: <none>

State: Running

Started: Thu, 25 May 2023 07:51:30 +0800

Ready: True

Restart Count: 0

Limits:

cpu: 1

memory: 1Gi

Requests:

cpu: 1

memory: 1Gi

Environment: <none>

#注意:测试出现了问题

#在test命名空间有2个LimitRange资源,其default配置有冲突,但根据测试现象看,默认匹配第一条规则。

#此时,我们直接在第一条规则上直接更改默认值,我们看下现象:

[root@k8s-master1 LimitRange]#vim cpu-memory-min-max.yaml

apiVersion: v1

kind: LimitRange

metadata:

name: cpu-memory-min-max

namespace: test

spec:

limits:

- max:

cpu: 1

memory: 1Gi

min:

cpu: 200m

memory: 200Mi

default:

cpu: 600m

memory: 600Mi

defaultRequest:

cpu: 400m

memory: 400Mi

type: Container

#部署并查看:

[root@k8s-master1 LimitRange]#kubectl apply -f cpu-memory-min-max.yaml

limitrange/cpu-memory-min-max configured

root@k8s-master1 LimitRange]#kubectl describe limits -ntest

Name: cpu-memory-min-max

Namespace: test

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container cpu 200m 1 400m 600m -

Container memory 200Mi 1Gi 400Mi 600Mi -

Name: default-cpu-memory-min-max

Namespace: test

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container cpu - - 300m 500m -

Container memory - - 300Mi 500Mi -

#再次部署一个新pod

[root@k8s-master1 LimitRange]#kubectl run web1315 --image=nginx -ntest

pod/web1315 created

[root@k8s-master1 LimitRange]#kubectl describe pod web1315 -ntest

Name: web1315

Namespace: test

Priority: 0

Node: k8s-node2/172.29.9.33

Start Time: Thu, 25 May 2023 07:57:39 +0800

Labels: run=web1315

Annotations: cni.projectcalico.org/podIP: 10.244.169.168/32

cni.projectcalico.org/podIPs: 10.244.169.168/32

kubernetes.io/limit-ranger: LimitRanger plugin set: cpu, memory request for container web1315; cpu, memory limit for container web1315

Status: Running

IP: 10.244.169.168

IPs:

IP: 10.244.169.168

Containers:

web1315:

Container ID: docker://a9e19c184eaa9513078b46d8d2d8ce5dd0a09dca00772052345d59040c57346e

Image: nginx

Image ID: docker-pullable://nginx@sha256:af296b188c7b7df99ba960ca614439c99cb7cf252ed7bbc23e90cfda59092305

Port: <none>

Host Port: <none>

State: Running

Started: Thu, 25 May 2023 07:57:42 +0800

Ready: True

Restart Count: 0

Limits:

cpu: 600m

memory: 600Mi

Requests:

cpu: 400m

memory: 400Mi

Environment: <none>

Mounts:

#此时,新建pod里的默认值被改变过来了,符合预期效果。

测试结束。?

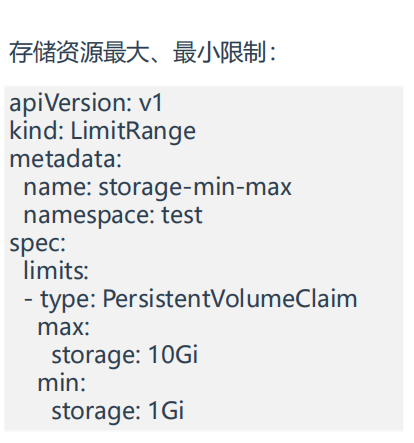

3.存储资源最大、最小限制

- 和前面的计算资源一样,接下来进行测试

[root@k8s-master1 LimitRange]#vim storage-min-max.yaml

apiVersion: v1

kind: LimitRange

metadata:

name: storage-min-max

namespace: test

spec:

limits:

- type: PersistentVolumeClaim

max:

storage: 10Gi

min:

storage: 1Gi

#部署:

[root@k8s-master1 LimitRange]#kubectl apply -f storage-min-max.yaml

limitrange/storage-min-max created

#创建一个pvc

[root@k8s-master1 LimitRange]#vim pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-storage-test

namespace: test

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 11Gi

#部署pvc:

[root@k8s-master1 LimitRange]#kubectl apply -f pvc.yaml

Error from server (Forbidden): error when creating "pvc.yaml": persistentvolumeclaims "pvc-storage-test" is forbidden: maximum storage usage per PersistentVolumeClaim is 10Gi, but request is 11Gi

[root@k8s-master1 LimitRange]#kubectl describe limits -ntest

Name: cpu-memory-min-max

Namespace: test

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container cpu 200m 1 400m 600m -

Container memory 200Mi 1Gi 400Mi 600Mi -

Name: default-cpu-memory-min-max

Namespace: test

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container cpu - - 300m 500m -

Container memory - - 300Mi 500Mi -

Name: storage-min-max

Namespace: test

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

PersistentVolumeClaim storage 1Gi 10Gi - - -

#可以看到,自己申请的存储为11Gi,但是LimitRange里max可申请存储为10Gi,因此报错。

#重新创建一个pvc

[root@k8s-master1 LimitRange]#cp pvc.yaml pvc1.yaml

[root@k8s-master1 LimitRange]#vim pvc1.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-storage-test

namespace: test

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 8Gi

#部署:

[root@k8s-master1 LimitRange]#kubectl apply -f pvc1.yaml

persistentvolumeclaim/pvc-storage-test created

[root@k8s-master1 LimitRange]#kubectl get pvc -ntest

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-storage-test Pending 30s

[root@k8s-master1 LimitRange]#kubectl describe limits -ntest

Name: cpu-memory-min-max

Namespace: test

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container memory 200Mi 1Gi 400Mi 600Mi -

Container cpu 200m 1 400m 600m -

Name: default-cpu-memory-min-max

Namespace: test

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container memory - - 300Mi 500Mi -

Container cpu - - 300m 500m -

Name: storage-min-max

Namespace: test

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

PersistentVolumeClaim storage 1Gi 10Gi - - -

#部署成功,符合预期。

测试结束。?

ibe limits -ntest

Name: cpu-memory-min-max

Namespace: test

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

Container memory 200Mi 1Gi 400Mi 600Mi -

Container cpu 200m 1 400m 600m -

Name: default-cpu-memory-min-max

Namespace: test

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

Container memory - - 300Mi 500Mi -

Container cpu - - 300m 500m -

Name: storage-min-max

Namespace: test

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

PersistentVolumeClaim storage 1Gi 10Gi - - -

#部署成功,符合预期。

测试结束。?

U8W/U8W-Mini使用与常见问题解决

U8W/U8W-Mini使用与常见问题解决 QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。...

QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。... stm32使用HAL库配置串口中断收发数据(保姆级教程)

stm32使用HAL库配置串口中断收发数据(保姆级教程) 分享几个国内免费的ChatGPT镜像网址(亲测有效)

分享几个国内免费的ChatGPT镜像网址(亲测有效) Allegro16.6差分等长设置及走线总结

Allegro16.6差分等长设置及走线总结