您现在的位置是:首页 >技术杂谈 >一些深度学习可解释性相关论文网站首页技术杂谈

一些深度学习可解释性相关论文

|2020 | CVPR | [Explaining Knowledge Distillation by Quantifying the Knowledge](https://arxiv.org/pdf/2003.03622.pdf)

| 2020 | CVPR | [High-frequency Component Helps Explain the Generalization of Convolutional Neural Networks](https://openaccess.thecvf.com/content_CVPR_2020/papers/Wang_High-Frequency_Component_Helps_Explain_the_Generalization_of_Convolutional_Neural_Networks_CVPR_2020_paper.pdf)

|2020|CVPRW|[Score-CAM: Score-Weighted Visual Explanations for Convolutional Neural Networks](https://openaccess.thecvf.com/content_CVPRW_2020/papers/w1/Wang_Score-CAM_Score-Weighted_Visual_Explanations_for_Convolutional_Neural_Networks_CVPRW_2020_paper.pdf)

|2020|ICLR|[Knowledge consistency between neural networks and beyond](https://arxiv.org/pdf/1908.01581.pdf)

|2020|ICLR|[Interpretable Complex-Valued Neural Networks for Privacy Protection](https://arxiv.org/pdf/1901.09546.pdf)

|2019|AI|[Explanation in artificial intelligence: Insights from the social sciences](https://arxiv.org/pdf/1706.07269.pdf)

|2019|NMI|[Stop Explaining Black Box Machine Learning Models for High Stakes Decisions and Use Interpretable Models Instead](https://arxiv.org/pdf/1811.10154.pdf)

|2019|NeurIPS|[Can you trust your model's uncertainty? Evaluating predictive uncertainty under dataset shift](https://papers.nips.cc/paper/9547-can-you-trust-your-models-uncertainty-evaluating-predictive-uncertainty-under-dataset-shift.pdf)

|2019|NeurIPS|[This looks like that: deep learning for interpretable image recognition](http://papers.nips.cc/paper/9095-this-looks-like-that-deep-learning-for-interpretable-image-recognition.pdf)|665|[Pytorch](https://github.com/cfchen-duke/ProtoPNet)|

|2019|NeurIPS|[A benchmark for interpretability methods in deep neural networks](https://papers.nips.cc/paper/9167-a-benchmark-for-interpretability-methods-in-deep-neural-networks.pdf)

|2019|NeurIPS|[Full-gradient representation for neural network visualization](http://papers.nips.cc/paper/8666-full-gradient-representation-for-neural-network-visualization.pdf)

|2019|NeurIPS|[On the (In) fidelity and Sensitivity of Explanations](https://papers.nips.cc/paper/9278-on-the-infidelity-and-sensitivity-of-explanations.pdf)

|2019|NeurIPS|[Towards Automatic Concept-based Explanations](http://papers.nips.cc/paper/9126-towards-automatic-concept-based-explanations.pdf)

|2019|NeurIPS|[CXPlain: Causal explanations for model interpretation under uncertainty](http://papers.nips.cc/paper/9211-cxplain-causal-explanations-for-model-interpretation-under-uncertainty.pdf)

|2019|CVPR|[Interpreting CNNs via Decision Trees](http://openaccess.thecvf.com/content_CVPR_2019/papers/Zhang_Interpreting_CNNs_via_Decision_Trees_CVPR_2019_paper.pdf)

|2019|CVPR|[From Recognition to Cognition: Visual Commonsense Reasoning](http://openaccess.thecvf.com/content_CVPR_2019/papers/Zellers_From_Recognition_to_Cognition_Visual_Commonsense_Reasoning_CVPR_2019_paper.pdf)

|2019|CVPR|[Attention branch network: Learning of attention mechanism for visual explanation](http://openaccess.thecvf.com/content_CVPR_2019/papers/Fukui_Attention_Branch_Network_Learning_of_Attention_Mechanism_for_Visual_Explanation_CVPR_2019_paper.pdf)

|2019|CVPR|[Interpretable and fine-grained visual explanations for convolutional neural networks](http://openaccess.thecvf.com/content_CVPR_2019/papers/Wagner_Interpretable_and_Fine-Grained_Visual_Explanations_for_Convolutional_Neural_Networks_CVPR_2019_paper.pdf)

|2019|CVPR|[Learning to Explain with Complemental Examples](http://openaccess.thecvf.com/content_CVPR_2019/papers/Kanehira_Learning_to_Explain_With_Complemental_Examples_CVPR_2019_paper.pdf)

|2019|CVPR|[Revealing Scenes by Inverting Structure from Motion Reconstructions](http://openaccess.thecvf.com/content_CVPR_2019/papers/Pittaluga_Revealing_Scenes_by_Inverting_Structure_From_Motion_Reconstructions_CVPR_2019_paper.pdf)

|2019|CVPR|[Multimodal Explanations by Predicting Counterfactuality in Videos](http://openaccess.thecvf.com/content_CVPR_2019/papers/Kanehira_Multimodal_Explanations_by_Predicting_Counterfactuality_in_Videos_CVPR_2019_paper.pdf)

|2019|CVPR|[Visualizing the Resilience of Deep Convolutional Network Interpretations](http://openaccess.thecvf.com/content_CVPRW_2019/papers/Explainable%20AI/Vasu_Visualizing_the_Resilience_of_Deep_Convolutional_Network_Interpretations_CVPRW_2019_paper.pdf)

|2019|ICCV|[U-CAM: Visual Explanation using Uncertainty based Class Activation Maps](http://openaccess.thecvf.com/content_ICCV_2019/papers/Patro_U-CAM_Visual_Explanation_Using_Uncertainty_Based_Class_Activation_Maps_ICCV_2019_paper.pdf)

|2019|ICCV|[Towards Interpretable Face Recognition](https://arxiv.org/pdf/1805.00611.pdf)

|2019|ICCV|[Taking a HINT: Leveraging Explanations to Make Vision and Language Models More Grounded](http://openaccess.thecvf.com/content_ICCV_2019/papers/Selvaraju_Taking_a_HINT_Leveraging_Explanations_to_Make_Vision_and_Language_ICCV_2019_paper.pdf)

|2019|ICCV|[Understanding Deep Networks via Extremal Perturbations and Smooth Masks](http://openaccess.thecvf.com/content_ICCV_2019/papers/Fong_Understanding_Deep_Networks_via_Extremal_Perturbations_and_Smooth_Masks_ICCV_2019_paper.pdf)

|2019|ICCV|[Explaining Neural Networks Semantically and Quantitatively](http://openaccess.thecvf.com/content_ICCV_2019/papers/Chen_Explaining_Neural_Networks_Semantically_and_Quantitatively_ICCV_2019_paper.pdf)

|2019|ICLR|[Hierarchical interpretations for neural network predictions](https://arxiv.org/pdf/1806.05337.pdf)

|2019|ICLR|[How Important Is a Neuron?](https://arxiv.org/pdf/1805.12233.pdf)

|2019|ICLR|[Visual Explanation by Interpretation: Improving Visual Feedback Capabilities of Deep Neural Networks](https://arxiv.org/pdf/1712.06302.pdf)

|2018|ICML|[Extracting Automata from Recurrent Neural Networks Using Queries and Counterexamples](https://arxiv.org/pdf/1711.09576.pdf)

|2019|ICML|[Towards A Deep and Unified Understanding of Deep Neural Models in NLP](http://proceedings.mlr.press/v97/guan19a/guan19a.pdf)

|2019|ICAIS|[Interpreting black box predictions using fisher kernels](https://arxiv.org/pdf/1810.10118.pdf)

|2019|ACMFAT|[Explaining explanations in AI]

|2019|AAAI|[Interpretation of neural networks is fragile](https://machine-learning-and-security.github.io/papers/mlsec17_paper_18.pdf)

|2019|AAAI|[Classifier-agnostic saliency map extraction](https://arxiv.org/pdf/1805.08249.pdf)

|2019|AAAI|[Can You Explain That? Lucid Explanations Help Human-AI Collaborative Image Retrieval](https://arxiv.org/pdf/1904.03285.pdf)

|2019|AAAIW|[Unsupervised Learning of Neural Networks to Explain Neural Networks](https://arxiv.org/pdf/1805.07468.pdf)

|2019|AAAIW|[Network Transplanting](https://arxiv.org/pdf/1804.10272.pdf)

|2019|CSUR|[A Survey of Methods for Explaining Black Box Models](https://kdd.isti.cnr.it/sites/kdd.isti.cnr.it/files/csur2018survey.pdf)

|2019|JVCIR|[Interpretable convolutional neural networks via feedforward design](https://arxiv.org/pdf/1810.02786)

|2019|ExplainAI|[The (Un)reliability of saliency methods](https://arxiv.org/pdf/1711.00867.pdf)

|2019|ACL|[Attention is not Explanation](https://arxiv.org/pdf/1902.10186.pdf)

|2019|EMNLP|[Attention is not not Explanation](https://arxiv.org/pdf/1908.04626.pdf)

|2019|arxiv|[Attention Interpretability Across NLP Tasks](https://arxiv.org/pdf/1909.11218.pdf)

|2019|arxiv|[Interpretable CNNs](https://arxiv.org/pdf/1901.02413.pdf)

|2018|ICLR|[Towards better understanding of gradient-based attribution methods for deep neural networks](https://arxiv.org/pdf/1711.06104.pdf)

|2018|ICLR|[Learning how to explain neural networks: PatternNet and PatternAttribution](https://arxiv.org/pdf/1705.05598.pdf)

|2018|ICLR|[On the importance of single directions for generalization](https://arxiv.org/pdf/1803.06959.pdf)

|2018|ICLR|[Detecting statistical interactions from neural network weights](https://arxiv.org/pdf/1705.04977.pdf)

|2018|ICLR|[Interpretable counting for visual question answering](https://arxiv.org/pdf/1712.08697.pdf)

|2018|CVPR|[Interpretable Convolutional Neural Networks](http://openaccess.thecvf.com/content_cvpr_2018/papers/Zhang_Interpretable_Convolutional_Neural_CVPR_2018_paper.pdf)

|2018|CVPR|[Tell me where to look: Guided attention inference network](http://openaccess.thecvf.com/content_cvpr_2018/papers/Li_Tell_Me_Where_CVPR_2018_paper.pdf)

|2018|CVPR|[Multimodal Explanations: Justifying Decisions and Pointing to the Evidence](http://openaccess.thecvf.com/content_cvpr_2018/papers/Park_Multimodal_Explanations_Justifying_CVPR_2018_paper.pdf)

|2018|CVPR|[Transparency by design: Closing the gap between performance and interpretability in visual reasoning](http://openaccess.thecvf.com/content_cvpr_2018/papers/Mascharka_Transparency_by_Design_CVPR_2018_paper.pdf)

|2018|CVPR|[Net2vec: Quantifying and explaining how concepts are encoded by filters in deep neural networks](http://openaccess.thecvf.com/content_cvpr_2018/papers/Fong_Net2Vec_Quantifying_and_CVPR_2018_paper.pdf)

|2018|CVPR|[What have we learned from deep representations for action recognition?](http://openaccess.thecvf.com/content_cvpr_2018/papers/Feichtenhofer_What_Have_We_CVPR_2018_paper.pdf)

|2018|CVPR|[Learning to Act Properly: Predicting and Explaining Affordances from Images](http://openaccess.thecvf.com/content_cvpr_2018/papers/Chuang_Learning_to_Act_CVPR_2018_paper.pdf)

|2018|CVPR|[Teaching Categories to Human Learners with Visual Explanations](http://openaccess.thecvf.com/content_cvpr_2018/papers/Aodha_Teaching_Categories_to_CVPR_2018_paper.pdf)|64|[Pytorch](https://github.com/macaodha/explain_teach)|

|2018|CVPR|[What do deep networks like to see?](http://openaccess.thecvf.com/content_cvpr_2018/papers/Palacio_What_Do_Deep_CVPR_2018_paper.pdf)

|2018|CVPR|[Interpret Neural Networks by Identifying Critical Data Routing Paths](http://openaccess.thecvf.com/content_cvpr_2018/papers/Wang_Interpret_Neural_Networks_CVPR_2018_paper.pdf)

|2018|ECCV|[Deep clustering for unsupervised learning of visual features](http://openaccess.thecvf.com/content_ECCV_2018/papers/Mathilde_Caron_Deep_Clustering_for_ECCV_2018_paper.pdf)

|2018|ECCV|[Explainable neural computation via stack neural module networks](http://openaccess.thecvf.com/content_ECCV_2018/papers/Ronghang_Hu_Explainable_Neural_Computation_ECCV_2018_paper.pdf)

|2018|ECCV|[Grounding visual explanations](http://openaccess.thecvf.com/content_ECCV_2018/papers/Lisa_Anne_Hendricks_Grounding_Visual_Explanations_ECCV_2018_paper.pdf)

|2018|ECCV|[Textual explanations for self-driving vehicles](http://openaccess.thecvf.com/content_ECCV_2018/papers/Jinkyu_Kim_Textual_Explanations_for_ECCV_2018_paper.pdf)

|2018|ECCV|[Interpretable basis decomposition for visual explanation](http://openaccess.thecvf.com/content_ECCV_2018/papers/Antonio_Torralba_Interpretable_Basis_Decomposition_ECCV_2018_paper.pdf)

|2018|ECCV|[Convnets and imagenet beyond accuracy: Understanding mistakes and uncovering biases](http://openaccess.thecvf.com/content_ECCV_2018/papers/Pierre_Stock_ConvNets_and_ImageNet_ECCV_2018_paper.pdf)

|2018|ECCV|[Vqa-e: Explaining, elaborating, and enhancing your answers for visual questions](http://openaccess.thecvf.com/content_ECCV_2018/papers/Qing_Li_VQA-E_Explaining_Elaborating_ECCV_2018_paper.pdf)

|2018|ECCV|[Choose Your Neuron: Incorporating Domain Knowledge through Neuron-Importance](http://openaccess.thecvf.com/content_ECCV_2018/papers/Ramprasaath_Ramasamy_Selvaraju_Choose_Your_Neuron_ECCV_2018_paper.pdf)

|2018|ECCV|[Diverse feature visualizations reveal invariances in early layers of deep neural networks](http://openaccess.thecvf.com/content_ECCV_2018/papers/Santiago_Cadena_Diverse_feature_visualizations_ECCV_2018_paper.pdf)

|2018|ECCV|[ExplainGAN: Model Explanation via Decision Boundary Crossing Transformations](http://openaccess.thecvf.com/content_ECCV_2018/papers/Nathan_Silberman_ExplainGAN_Model_Explanation_ECCV_2018_paper.pdf)

|2018|ICML|[Interpretability beyond feature attribution: Quantitative testing with concept activation vectors](https://arxiv.org/pdf/1711.11279.pdf)

|2018|ICML|[Learning to explain: An information-theoretic perspective on model interpretation](https://arxiv.org/pdf/1802.07814.pdf)

|2018|ACL|[Did the Model Understand the Question?](https://arxiv.org/pdf/1805.05492.pdf)

|2018|FITEE|[Visual interpretability for deep learning: a survey](https://arxiv.org/pdf/1802.00614)

|2018|NeurIPS|[Sanity Checks for Saliency Maps](http://papers.nips.cc/paper/8160-sanity-checks-for-saliency-maps.pdf)

|2018|NeurIPS|[Explanations based on the missing: Towards contrastive explanations with pertinent negatives](http://papers.nips.cc/paper/7340-explanations-based-on-the-missing-towards-contrastive-explanations-with-pertinent-negatives.pdf)

|2018|NeurIPS|[Towards robust interpretability with self-explaining neural networks](http://papers.nips.cc/paper/8003-towards-robust-interpretability-with-self-explaining-neural-networks.pdf)

|2018|NeurIPS|[Attacks meet interpretability: Attribute-steered detection of adversarial samples](https://papers.nips.cc/paper/7998-attacks-meet-interpretability-attribute-steered-detection-of-adversarial-samples.pdf)

|2018|NeurIPS|[DeepPINK: reproducible feature selection in deep neural networks](https://papers.nips.cc/paper/8085-deeppink-reproducible-feature-selection-in-deep-neural-networks.pdf)

|2018|NeurIPS|[Representer point selection for explaining deep neural networks](https://papers.nips.cc/paper/8141-representer-point-selection-for-explaining-deep-neural-networks.pdf)

|2018|NeurIPS Workshop|[Interpretable convolutional filters with sincNet](https://arxiv.org/pdf/1811.09725)|97|

|2018|AAAI|[Anchors: High-precision model-agnostic explanations](https://dm-gatech.github.io/CS8803-Fall2018-DML-Papers/anchors.pdf)

|2018|AAAI|[Improving the adversarial robustness and interpretability of deep neural networks by regularizing their input gradients](https://asross.github.io/publications/RossDoshiVelez2018.pdf)

|2018|AAAI|[Deep learning for case-based reasoning through prototypes: A neural network that explains its predictions](https://arxiv.org/pdf/1710.04806.pdf)

|2018|AAAI|[Interpreting CNN Knowledge via an Explanatory Graph](https://arxiv.org/pdf/1708.01785.pdf)

|2018|AAAI|[Examining CNN Representations with respect to Dataset Bias](http://www.stat.ucla.edu/~sczhu/papers/Conf_2018/AAAI_2018_DNN_Learning_Bias.pdf)

|2018|WACV|[Grad-cam++: Generalized gradient-based visual explanations for deep convolutional networks](https://www.researchgate.net/profile/Aditya_Chattopadhyay2/publication/320727679_Grad-CAM_Generalized_Gradient-based_Visual_Explanations_for_Deep_Convolutional_Networks/links/5a3aa2e5a6fdcc3889bd04cb/Grad-CAM-Generalized-Gradient-based-Visual-Explanations-for-Deep-Convolutional-Networks.pdf)

|2018|IJCV|[Top-down neural attention by excitation backprop](https://arxiv.org/pdf/1608.00507)

|2018|TPAMI|[Interpreting deep visual representations via network dissection](https://arxiv.org/pdf/1711.05611)

|2018|DSP|[Methods for interpreting and understanding deep neural networks](http://iphome.hhi.de/samek/pdf/MonDSP18.pdf)

|2018|Access|[Peeking inside the black-box: A survey on Explainable Artificial Intelligence (XAI)](https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=8466590)

|2018|JAIR|[Learning Explanatory Rules from Noisy Data](https://www.ijcai.org/Proceedings/2018/0792.pdf)

|2018|MIPRO|[Explainable artificial intelligence: A survey](https://www.researchgate.net/profile/Mario_Brcic/publication/325398586_Explainable_Artificial_Intelligence_A_Survey/links/5b0bec90a6fdcc8c2534d673/Explainable-Artificial-Intelligence-A-Survey.pdf)

|2018|BMVC|[Rise: Randomized input sampling for explanation of black-box models](https://arxiv.org/pdf/1806.07421.pdf)

|2018|arxiv|[Distill-and-Compare: Auditing Black-Box Models Using Transparent Model Distillation](https://arxiv.org/pdf/1710.06169.pdf)

|2018|arxiv|[Manipulating and measuring model interpretability](https://arxiv.org/pdf/1802.07810.pdf)

|2018|arxiv|[How convolutional neural network see the world-A survey of convolutional neural network visualization methods](https://arxiv.org/pdf/1804.11191.pdf)

|2018|arxiv|[Revisiting the importance of individual units in cnns via ablation](https://arxiv.org/pdf/1806.02891.pdf)

|2018|arxiv|[Computationally Efficient Measures of Internal Neuron Importance](https://arxiv.org/pdf/1807.09946.pdf)

|2017|ICML|[Understanding Black-box Predictions via Influence Functions](https://dm-gatech.github.io/CS8803-Fall2018-DML-Papers/influence-functions.pdf)

|2017|ICML|[Axiomatic attribution for deep networks](https://mit6874.github.io/assets/misc/sundararajan.pdf)

|2017|ICML|[Learning Important Features Through Propagating Activation Differences](https://mit6874.github.io/assets/misc/shrikumar.pdf)

|2017|ICLR|[Visualizing deep neural network decisions: Prediction difference analysis](https://arxiv.org/pdf/1702.04595.pdf)

|2017|ICLR|[Exploring LOTS in Deep Neural Networks](https://openreview.net/pdf?id=SkCILwqex)

|2017|NeurIPS|[A Unified Approach to Interpreting Model Predictions](http://papers.nips.cc/paper/7062-a-unified-approach-to-interpreting-model-predictions.pdf)

|2017|NeurIPS|[Real time image saliency for black box classifiers](https://papers.nips.cc/paper/7272-real-time-image-saliency-for-black-box-classifiers.pdf)

|2017|NeurIPS|[SVCCA: Singular Vector Canonical Correlation Analysis for Deep Learning Dynamics and Interpretability](http://papers.nips.cc/paper/7188-svcca-singular-vector-canonical-correlation-analysis-for-deep-learning-dynamics-and-interpretability.pdf)

|2017|CVPR|[Mining Object Parts from CNNs via Active Question-Answering](http://openaccess.thecvf.com/content_cvpr_2017/papers/Zhang_Mining_Object_Parts_CVPR_2017_paper.pdf)

|2017|CVPR|[Network dissection: Quantifying interpretability of deep visual representations](http://openaccess.thecvf.com/content_cvpr_2017/papers/Bau_Network_Dissection_Quantifying_CVPR_2017_paper.pdf)

|2017|CVPR|[Improving Interpretability of Deep Neural Networks with Semantic Information](http://openaccess.thecvf.com/content_cvpr_2017/papers/Dong_Improving_Interpretability_of_CVPR_2017_paper.pdf)

|2017|CVPR|[MDNet: A Semantically and Visually Interpretable Medical Image Diagnosis Network](http://openaccess.thecvf.com/content_cvpr_2017/papers/Zhang_MDNet_A_Semantically_CVPR_2017_paper.pdf)

|2017|CVPR|[Making the V in VQA matter: Elevating the role of image understanding in Visual Question Answering](http://openaccess.thecvf.com/content_cvpr_2017/papers/Goyal_Making_the_v_CVPR_2017_paper.pdf)

|2017|CVPR|[Knowing when to look: Adaptive attention via a visual sentinel for image captioning](http://openaccess.thecvf.com/content_cvpr_2017/papers/Lu_Knowing_When_to_CVPR_2017_paper.pdf)

|2017|CVPRW|[Interpretable 3d human action analysis with temporal convolutional networks](http://openaccess.thecvf.com/content_cvpr_2017_workshops/w20/papers/Kim_Interpretable_3D_Human_CVPR_2017_paper.pdf)

|2017|ICCV|[Grad-cam: Visual explanations from deep networks via gradient-based localization](http://openaccess.thecvf.com/content_ICCV_2017/papers/Selvaraju_Grad-CAM_Visual_Explanations_ICCV_2017_paper.pdf)

|2017|ICCV|[Interpretable Explanations of Black Boxes by Meaningful Perturbation](http://openaccess.thecvf.com/content_ICCV_2017/papers/Fong_Interpretable_Explanations_of_ICCV_2017_paper.pdf)

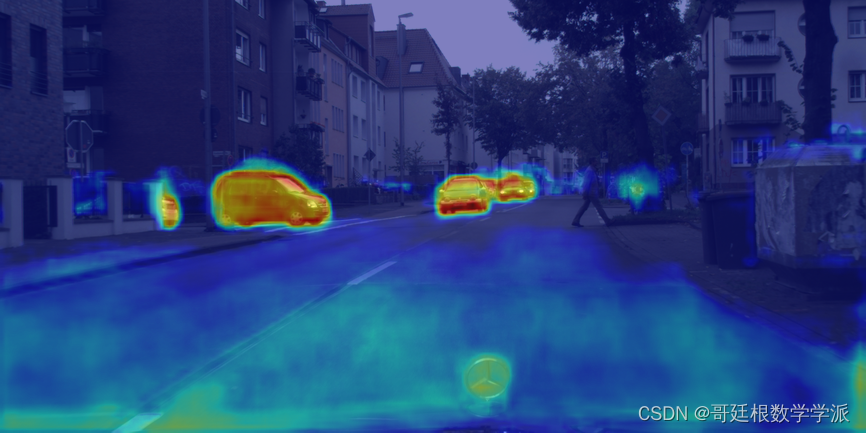

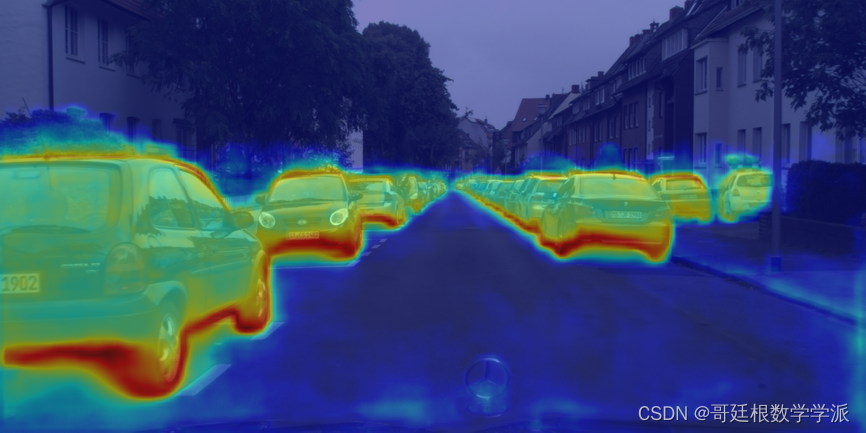

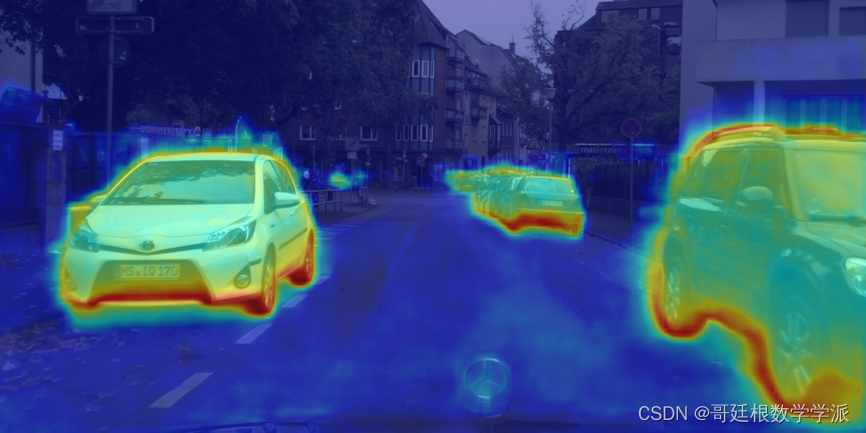

|2017|ICCV|[Interpretable Learning for Self-Driving Cars by Visualizing Causal Attention](http://openaccess.thecvf.com/content_ICCV_2017/papers/Kim_Interpretable_Learning_for_ICCV_2017_paper.pdf)

|2017|ICCV|[Understanding and comparing deep neural networks for age and gender classification](http://openaccess.thecvf.com/content_ICCV_2017_workshops/papers/w23/Lapuschkin_Understanding_and_Comparing_ICCV_2017_paper.pdf)

|2017|ICCV|[Learning to disambiguate by asking discriminative questions](http://openaccess.thecvf.com/content_ICCV_2017/papers/Li_Learning_to_Disambiguate_ICCV_2017_paper.pdf)

|2017|IJCAI|[Right for the right reasons: Training differentiable models by constraining their explanations](https://arxiv.org/pdf/1703.03717.pdf)

|2017|IJCAI|[Understanding and improving convolutional neural networks via concatenated rectified linear units](http://www.jmlr.org/proceedings/papers/v48/shang16.pdf)

|2017|AAAI|[Growing Interpretable Part Graphs on ConvNets via Multi-Shot Learning](https://arxiv.org/pdf/1611.04246.pdf)

|2017|ACL|[Visualizing and Understanding Neural Machine Translation](https://www.aclweb.org/anthology/P17-1106.pdf)

|2017|EMNLP|[A causal framework for explaining the predictions of black-box sequence-to-sequence models](https://arxiv.org/pdf/1707.01943.pdf)

|2017|CVPR Workshop|[Looking under the hood: Deep neural network visualization to interpret whole-slide image analysis outcomes for colorectal polyps](http://openaccess.thecvf.com/content_cvpr_2017_workshops/w8/papers/Korbar_Looking_Under_the_CVPR_2017_paper.pdf)

|2017|survey|[Interpretability of deep learning models: a survey of results](https://discovery.ucl.ac.uk/id/eprint/10059575/1/Chakraborty_Interpretability%20of%20deep%20learning%20models.pdf)

|2017|arxiv|[SmoothGrad: removing noise by adding noise](https://arxiv.org/pdf/1706.03825.pdf)

|2017|arxiv|[Interpretable & explorable approximations of black box models](https://arxiv.org/pdf/1707.01154.pdf)

|2017|arxiv|[Distilling a neural network into a soft decision tree](https://arxiv.org/pdf/1711.09784.pdf)

|2017|arxiv|[Towards interpretable deep neural networks by leveraging adversarial examples](https://arxiv.org/pdf/1708.05493.pdf)

|2017|arxiv|[Explainable artificial intelligence: Understanding, visualizing and interpreting deep learning models](https://arxiv.org/pdf/1708.08296.pdf)

|2017|arxiv|[Contextual Explanation Networks](https://arxiv.org/pdf/1705.10301.pdf)

|2017|arxiv|[Challenges for transparency](https://arxiv.org/pdf/1708.01870.pdf)

|2017|ACMSOPP|[Deepxplore: Automated whitebox testing of deep learning systems](https://machine-learning-and-security.github.io/papers/mlsec17_paper_1.pdf)

|2017|CEURW|[What does explainable AI really mean? A new conceptualization of perspectives](https://arxiv.org/pdf/1710.00794.pdf)

|2017|TVCG|[ActiVis: Visual Exploration of Industry-Scale Deep Neural Network Models](https://arxiv.org/pdf/1704.01942.pdf)

|2016|NeurIPS|[Synthesizing the preferred inputs for neurons in neural networks via deep generator networks](http://papers.nips.cc/paper/6519-synthesizing-the-preferred-inputs-for-neurons-in-neural-networks-via-deep-generator-networks.pdf)

|2016|NeurIPS|[Understanding the effective receptive field in deep convolutional neural networks](https://papers.nips.cc/paper/6203-understanding-the-effective-receptive-field-in-deep-convolutional-neural-networks.pdf)

知乎咨询:哥廷根数学学派

算法代码:mbd.pub/o/GeBENHAGEN

擅长现代信号处理(改进小波分析系列,改进变分模态分解,改进经验小波变换,改进辛几何模态分解等等),改进机器学习,改进深度学习,机械故障诊断,改进时间序列分析(金融信号,心电信号,振动信号等)

QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。...

QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。... U8W/U8W-Mini使用与常见问题解决

U8W/U8W-Mini使用与常见问题解决 stm32使用HAL库配置串口中断收发数据(保姆级教程)

stm32使用HAL库配置串口中断收发数据(保姆级教程) 分享几个国内免费的ChatGPT镜像网址(亲测有效)

分享几个国内免费的ChatGPT镜像网址(亲测有效) Allegro16.6差分等长设置及走线总结

Allegro16.6差分等长设置及走线总结