您现在的位置是:首页 >学无止境 >【运维知识进阶篇】集群架构-Nginx高可用Keepalived网站首页学无止境

【运维知识进阶篇】集群架构-Nginx高可用Keepalived

高可用是指2台机器启动着完全相同的业务系统,一台机器宕机后,另一台可以快速启用,用户是无感知的。高可用硬件通常使用F5,软件通常使用keepalived。keepalived软件是基于VRRP协议实现的,VRRP虚拟路由冗余协议,主要用于解决单点故障。

VRRP实现原理

咱们拿公司路由器举例,路由器故障后,网关无法转发报文,所有人无法上网了怎么办?

一般我们会选择增加一台路由器,但是我们主路由器故障后,用户需要手动指向备用路由器,如果用户多的话修改起来会非常麻烦,另外我们的主路由器修好后,主路由器用不用;主路由器故障后我们把备用路由器的网关配置改成主路由器是否可以,等等,涉及问题很多。

实际上,我们如果单纯上修改网关配置,是行不通的,我们的PC第一次通过ARP广播寻找到主路由器的MAC地址和IP地址,会将信息写到ARP的缓存表,那么PC在之后的连接中都是根据缓存表信息去连接,在进行数据包转发,即使我们修改了IP,但是Mac地址是唯一的,PC的数据包依旧会发给主路由器(除非PC的ARP缓存表过期,再次发起ARP广播的时候才能获取新的备用路由器的MAC的地址和IP地址)

那么我们就需要VRRP了,通过软件或硬件的形式在主路由器和副路由器外面增加一个虚拟的MAC地址(VMAC)和虚拟IP地址(VIP),那么在这种情况下,PC请求VIP的时候,不管是主路由器处理还是备用路由器处理,PC只是在ARP缓存表中记录VMAC和VIP的信息。

Keepalived核心概念

要掌握Keepalived之前,我们需要先知道它的核心概念。

1、如何确定谁是主节点谁是备用节点(谁的效率高,速度快就用谁,类似选举投票;手动干预是通过优先级的方式)

2、如果主节点故障,备用节点自动接管,如果主节点恢复了,那么抢占式的方式主节点会自动接管,类似于夺权,而非抢占式的方式,主节点恢复了,并不会自动接管。

3、主节点和备用节点在1个小组,主节点正常时,1秒钟向小组内发送一次心跳(时间可以自定义),表示它还正常,如果没有发送心跳,则备用节点自动接管,如果主节点和备用节点都没发送心跳,则两台服务器都会认为自己是主节点,从而形成脑裂

Keepalived安装配置

1、我们准备一台LB01(10.0.0.5)和一台LB02(10.0.0.6)两台虚拟主机

2、两台主机都安装keepalived

[root@LB01 ~]# yum -y install keepalived

[root@LB02 ~]# yum -y install keepalived3、配置LB01

[root@LB01 ~]# rpm -qc keepalived #查询keepalived的配置文件

/etc/keepalived/keepalived.conf

/etc/sysconfig/keepalived

[root@LB01 ~]# cat /etc/keepalived/keepalived.conf

global_defs { #全局配置

router_id LB01 #标识身份->名称

}

vrrp_instance VI_1 {

state MASTER #标识角色状态

interface eth0 #网卡绑定接口

virtual_router_id 50 #虚拟路由id

priority 150 #优先级

advert_int 1 #监测间隔时间

authentication { #认证

auth_type PASS #认证方式

auth_pass 1111 #认证密码

}

virtual_ipaddress {

10.0.0.3 #虚拟的VIP地址

}

}4、配置LB02

[root@LB02 ~]# cat /etc/keepalived/keepalived.conf global_defs {

router_id LB02 #与主结点区别1:唯一标识

}

vrrp_instance VI_1 {

state BACKUP #与主节点区别2:角色状态

interface eth0

virtual_router_id 50

priority 100 #与主节点区别3:竞选优先级

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.3

}

}5、启动两个节点的keepalived

[root@LB01 ~]# systemctl start keepalived

[root@LB01 ~]# systemctl enable keepalived

[root@LB02 ~]# systemctl start keepalived

[root@LB02 ~]# systemctl enable keepalivedKeepalived测试抢占式和非抢占式

1、LB01的优先级高于LB02,所以VIP在LB01上面

[root@LB01 ~]# ip add | grep 10.0.0.3

inet 10.0.0.3/32 scope global eth02、关闭LB01的keepalived,发现LB02自动接管

[root@LB01 ~]# systemctl stop keepalived

[root@LB01 ~]# ip add | grep 10.0.0.3

[root@LB02 ~]# ip add | grep 10.0.0.3

inet 10.0.0.3/32 scope global eth03、重启LB01的keepalived,发现VIP被强行抢占

[root@LB01 ~]# systemctl start keepalived

[root@LB01 ~]# ip add | grep 10.0.0.3

inet 10.0.0.3/32 scope global eth0

[root@LB02 ~]# ip add | grep 10.0.0.3

4、配置非抢占式

两个节点的state都必须配置为BACKUP,都必须加上配置nopreempt,其中一个节点的优先级必须高于另外一个节点的优先级。

[root@LB01 ~]# cat /etc/keepalived/keepalived.conf

global_defs { #全局配置

router_id LB01 #标识身份->名称

}

vrrp_instance VI_1 {

state BACKUP #标识角色状态

nopreempt

interface eth0 #网卡绑定接口

virtual_router_id 50 #虚拟路由id

priority 150 #优先级

advert_int 1 #监测间隔时间

authentication { #认证

auth_type PASS #认证方式

auth_pass 1111 #认证密码

}

virtual_ipaddress {

10.0.0.3 #虚拟的VIP地址

}

}

[root@LB01 ~]# systemctl restart keepalived

[root@LB02 ~]# cat /etc/keepalived/keepalived.conf

global_defs {

router_id LB02

}

vrrp_instance VI_1 {

state BACKUP

nopreempt

interface eth0

virtual_router_id 50

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.3

}

}

[root@LB02 ~]# systemctl restart keepalived5、通过windows的arp去验证,是否会切换MAC地址

[root@LB01 ~]# ip add | grep 10.0.0.3

inet 10.0.0.3/32 scope global eth0Windows本地hosts到10.0.0.3,浏览器访问blog.koten.com(LB01分配到Web01里面的域名)

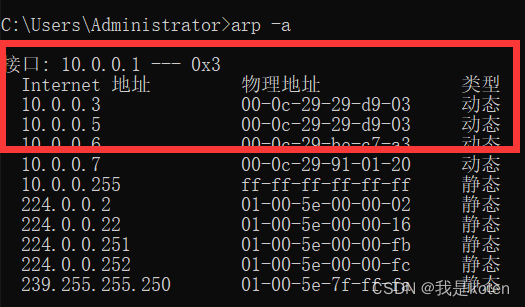

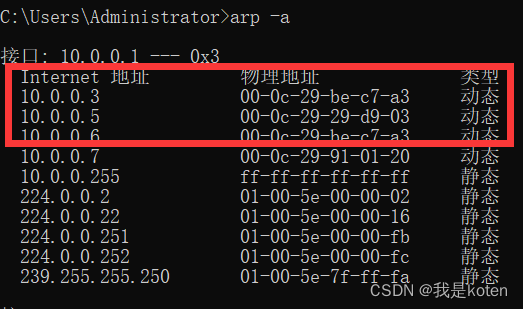

WIN+R调用运行窗口,输入cmd打开命令提示符arp -a,查看arp缓存区,此时物理地址与LB01上10.0.0.3MAC地址一致

将节点1的keepalived停掉

[root@LB01 ~]# systemctl stop keepalived节点2接管VIP

[root@LB02 ~]# ip add | grep 10.0.0.3

inet 10.0.0.3/32 scope global eth0再次查看mac地址,此时物理地址与LB02上10.0.0.3MAC地址一致

Keepalived故障脑裂

由于某些原因,导致两台keepalived服务器在指定的时间内,无法检测到对方的心跳,但是两台服务器都可以正常使用。

常见故障原因

1、服务器网线松动等网络故障

2、服务器硬件故障发生损坏现象而崩溃

3、主备都开启了firewalld防火墙

脑裂故障测试

1、将主备主机的防火墙都打开

[root@LB01 ~]# systemctl start firewalld

[root@LB02 ~]# systemctl start firewalld2、将刚刚的配置文件改回去

[root@LB01 ~]# vim /etc/keepalived/keepalived.conf

global_defs {

router_id LB01

}

vrrp_instance VI_1 {

state MASTER

#nopreempt

interface eth0

virtual_router_id 50

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.3

}

}

[root@LB01 ~]# systemctl restart keepalived

[root@LB02 ~]# cat /etc/keepalived/keepalived.conf

global_defs {

router_id LB02

}

vrrp_instance VI_1 {

state BACKUP

#nopreempt

interface eth0

virtual_router_id 50

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.3

}

}

[root@LB02 ~]# systemctl restart keepalived

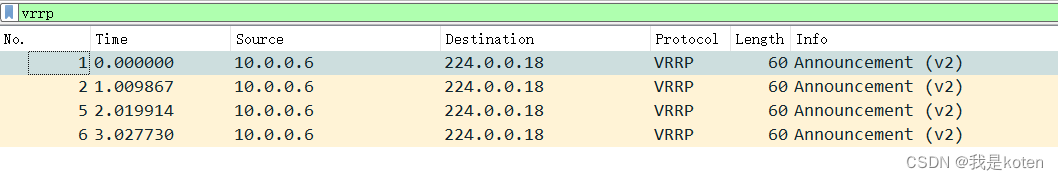

3、通过抓包查看信息

4、查看LB01和LB02中的IP,发现都有10.0.0.3

[root@LB01 ~]# ip add | grep 10.0.0.3

inet 10.0.0.3/32 scope global eth0

[root@LB02 ~]# ip add | grep 10.0.0.3

inet 10.0.0.3/32 scope global eth0脑裂故障解决方案

解决思路:发生了脑裂,我们随便kill掉一台即可,可以通过编写脚本的方式,我们认为两边的ip add都有10.0.0.3,则发生了脑裂。我们在LB01上写脚本。

做免密钥方便获取LB02的ip信息:

[root@LB01 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:+NyOCiY7aBX8nEPwGeNQHjTLY2EXPKU1o33LTBrm1zk root@LB01

The key's randomart image is:

+---[RSA 2048]----+

| oB.oo= |

| o+o*o= o |

| . =*+o.+ o |

| o.=..o B o . |

| = o So = E |

| . = o .. . |

| .o o . o . |

|...+ . o |

|. .. ... . |

+----[SHA256]-----+

[root@LB01 ~]#

[root@LB01 ~]# ssh-copy-id -i .ssh/id_rsa 10.0.0.6

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@10.0.0.6's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '10.0.0.6'"

and check to make sure that only the key(s) you wanted were added.

[root@LB01 ~]# ssh '10.0.0.6' ip add | grep 10.0.0.3 | wc -l #免密钥测试

1脚本编写并执行:

[root@LB01 ~]# cat check_split_brain.sh

LB01_VIP_Number=`ip add | grep 10.0.0.3 | wc -l`

LB02_VIP_Number=`ssh '10.0.0.6' ip add | grep 10.0.0.3 | wc -l`

if [ $LB01_VIP_Number -eq 1 -a $LB02_VIP_Number -eq 1 ]

then

systemctl stop keepalived

fi

[root@LB01 ~]# sh check_split_brain.sh

[root@LB01 ~]# ip add | grep 10.0.0.3

[root@LB02 ~]# ip add | grep 10.0.0.3

inet 10.0.0.3/32 scope global eth0Keepalived与Nginx

Nginx默认监听在所有的IP地址上,VIP飘到一台节点上,相当于Nginx多了VIP这个网卡,所以可以访问到Nginx所在的机器,但是如果Nginx宕机,会导致用户请求失败,但是keepalived没有挂掉不会进行切换,就需要编写脚本检测Nginx存活状态,如果不存活则kill掉keepalived,让VIP自动飘到备用服务器。

1、脚本编写并增加权限

[root@LB01 ~]# cat check_nginx.sh

nginxpid=`ps -C nginx --no-header|wc -l`

if [ $nginxpid -eq 0 ]

then

systemctl restart nginx &>/etc/null

if [ $? -ne 0 ]

then

systemctl stop keepalived

fi

fi

[root@LB01 ~]# chmod +x check_nginx.sh

[root@LB01 ~]# ll check_nginx.sh

-rwxr-xr-x 1 root root 150 Apr 12 17:37 check_nginx.sh

2、脚本测试

[root@LB02 ~]# ip add|grep 10.0.0.3 #当前VIP不在LB02

[root@LB01 ~]# ip add|grep 10.0.0.3 #当前VIP在LB01上

inet 10.0.0.3/32 scope global eth0

[root@LB01 ~]# systemctl stop nginx #关闭Nginx

[root@LB01 ~]# ip add|grep 10.0.0.3 #VIP依旧在LB0上,因为Nginx对keepalived没有影响

inet 10.0.0.3/32 scope global eth0

[root@LB01 ~]# vim /etc/nginx/nginx.conf #修改Nginx配置文件,让其无法重启,查看是否会飘到LB02上

ser nginx;

[root@LB01 ~]# sh check_nginx.sh #执行脚本

[root@LB01 ~]# ip add|grep 10.0.0.3 #发现VIP已经不在LB02了

[root@LB02 ~]# ip add | grep 10.0.0.3 #VIP飘移到LB02上了

inet 10.0.0.3/32 scope global eth03、在配置文件内中调用此脚本

[root@LB01 ~]# cat /etc/keepalived/keepalived.conf

global_defs {

router_id LB01

}

#每5秒执行一次脚本,脚本执行内容不能超过5秒,否则会中断再次重新执行脚本

vrrp_script check_nginx {

script "/root/check_nginx.sh"

interval 5

}

vrrp_instance VI_1 {

state MASTER

#nopreempt

interface eth0

virtual_router_id 50

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.3

}

#调用并运行脚本

track_script {

check_nginx

}

}

注意:在Master的keepalived中调用脚本,抢占式,仅需在Master配置即可。如果配置为非抢占式,那么需要两台服务器都使用该脚本。我是koten,10年运维经验,持续分享运维干货,感谢大家的阅读和关注!

U8W/U8W-Mini使用与常见问题解决

U8W/U8W-Mini使用与常见问题解决 分享几个国内免费的ChatGPT镜像网址(亲测有效)

分享几个国内免费的ChatGPT镜像网址(亲测有效) stm32使用HAL库配置串口中断收发数据(保姆级教程)

stm32使用HAL库配置串口中断收发数据(保姆级教程) QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。...

QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。... SpringSecurity实现前后端分离认证授权

SpringSecurity实现前后端分离认证授权