您现在的位置是:首页 >技术交流 >基于M1芯片的Mac的k8s搭建网站首页技术交流

基于M1芯片的Mac的k8s搭建

简介基于M1芯片的Mac的k8s搭建

基础环境

centos8

macbook pro M1

vm

vm安装centos8参考:MacBook M1芯片 安装Centos8 教程(无界面安装)_m1安装centos 8.4_Mr_温少的博客-CSDN博客

步骤

参考:

MacOS M1芯片CentOS8部署搭建k8s集群_Liu_Shihao的博客-CSDN博客

所有机器前置配置

1.设置对应的hostname

# 设置hostname hostnamectl set-hostname k8s-node2 # 配置对应的集群hosts cat >> /etc/hosts << EOF 172.16.237.134 k8s-master 172.16.237.135 k8s-node1 172.16.237.136 k8s-node2 EOF

2.关闭防火墙

systemctl stop firewalld systemctl disable firewalld firewall-cmd --state

3.关闭selinux

#将 SELinux 设置为 permissive 模式(相当于将其禁用) sudo setenforce 0 sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

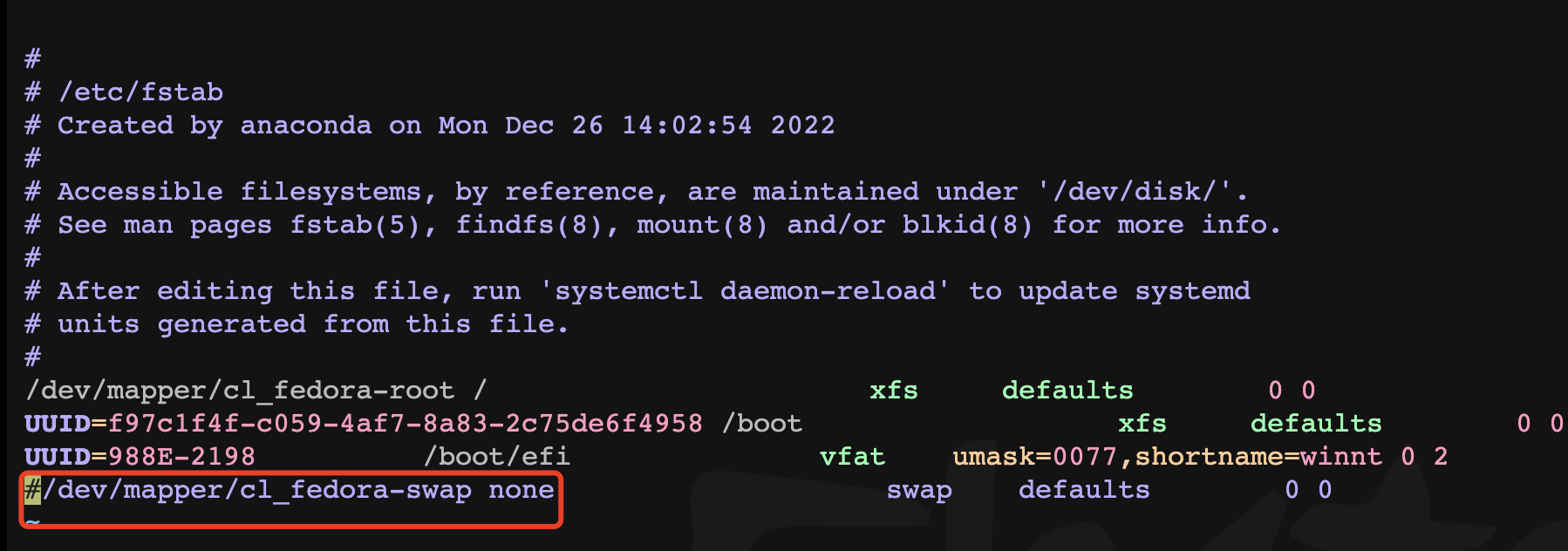

4.关闭swap

swapoff -a # 临时关闭,主机重启后k8s无法自动重启,需要重新关闭swap vim /etc/fstab # 永久关闭

5.允许 iptables 检查桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf br_netfilter EOF cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF sudo sysctl --system

6.更新yum源

# 进入 /etc/yum.repos.d/ 目录 cd /etc/yum.repos.d/ # 运行以下命令 sudo sed -i 's/mirrorlist/#mirrorlist/g' /etc/yum.repos.d/CentOS-* sed -i 's|#baseurl=http://mirror.centos.org|baseurl=http://vault.centos.org|g' /etc/yum.repos.d/CentOS-* curl https://download.docker.com/linux/centos/docker-ce.repo -o /etc/yum.repos.d/docker-ce.repo

7.处理冲突问题

yum erase podman buildah # or dnf remove podman buildah dnf clean all && dnf check && dnf check-update yum erase buildah # or dnf remove buildah dnf clean all && dnf check && dnf check-update dnf remove -y containers-common-2:1-2.module_el8.5.0+890+6b136101 dnf clean all && dnf check && dnf check-update

7.部署docker环境

yum -y install docker-ce systemctl enable docker && systemctl start docker

8.配置镜像源

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://iedolof4.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

部署k8s集群

所有机器执行

9.配置k8s的yum源

#添加阿里的yum软件源,这里需要注意baseurl的地址是否是你虚拟机对应的版本 # 以下为mac m1 CentOS8 对应的地址 cat > /etc/yum.repos.d/kubernetes.repo << EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-aarch64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

10.安装组件

#安装k8s(注意版本号,后面的版本需要对应) yum install -y kubelet-1.21.0 kubeadm-1.21.0 kubectl-1.21.0 systemctl enable kubelet

主节点配置

11.初始化master

kubeadm init

--apiserver-advertise-address=172.16.237.134

--image-repository registry.aliyuncs.com/google_containers

--kubernetes-version v1.21.0

--service-cidr=10.96.0.0/12

--pod-network-cidr=10.244.0.0/16

--ignore-preflight-errors=all

#-–apiserver-advertise-address 集群通告地址(master内网) 注意修改为master节点的address

#–-image-repository 由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址

#–-kubernetes-version K8s版本,与上面安装的一致

#–-service-cidr 集群内部虚拟网络,Pod统一访问入口

#-–pod-network-cidr Pod网络,与下面部署的CNI网络组件yaml中保持一致

安装完后提示:

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.16.237.134:6443 --token lndyz6.73fpex2iqyhmrnly

--discovery-token-ca-cert-hash sha256:07411b0de4320ce16918555f033cc42ce9aba398a6d1089ef4b442227e3b590b

12.master上执行:

# 在主节点执行 mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config #如果是root用户 export KUBECONFIG=/etc/kubernetes/admin.conf

如果token 24小时后过期需要重新生成:

kubeadm token create --print-join-command

master安装网络组件

13.安装calico

curl https://docs.projectcalico.org/manifests/calico.yaml -O kubectl apply -f calico.yaml

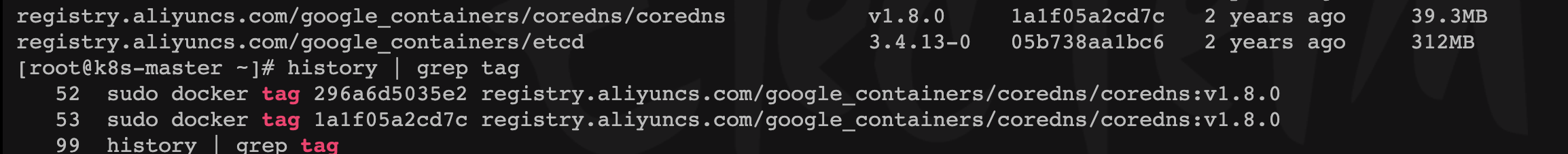

可能存在coredns镜像拉取不到的问题

参考: k8s的 coredns 的ImagePullBackOff 和ErrImagePull 问题解决_doker一直imagepullbackoff_之诚的博客-CSDN博客

docker pull coredns/coredns:1.8.0 docker tag [拉取的镜像id] registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0

node节点运行加入master

14.加入集群

kubeadm join 172.16.237.134:6443 --token lndyz6.73fpex2iqyhmrnly

--discovery-token-ca-cert-hash sha256:07411b0de4320ce16918555f033cc42ce9aba398a6d1089ef4b442227e3b590b

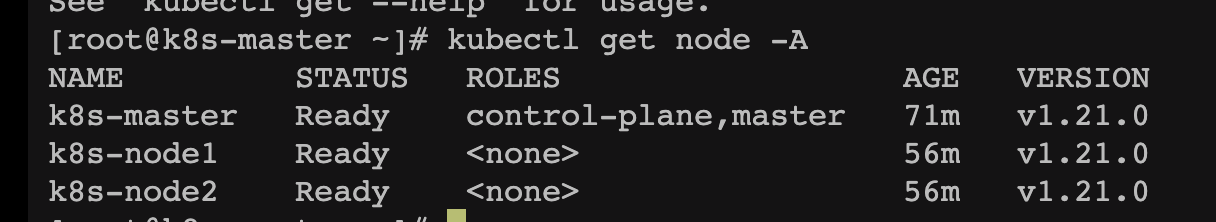

在master查看node状态:

kubectl get nodes

Dashboard部署

15.master执行

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

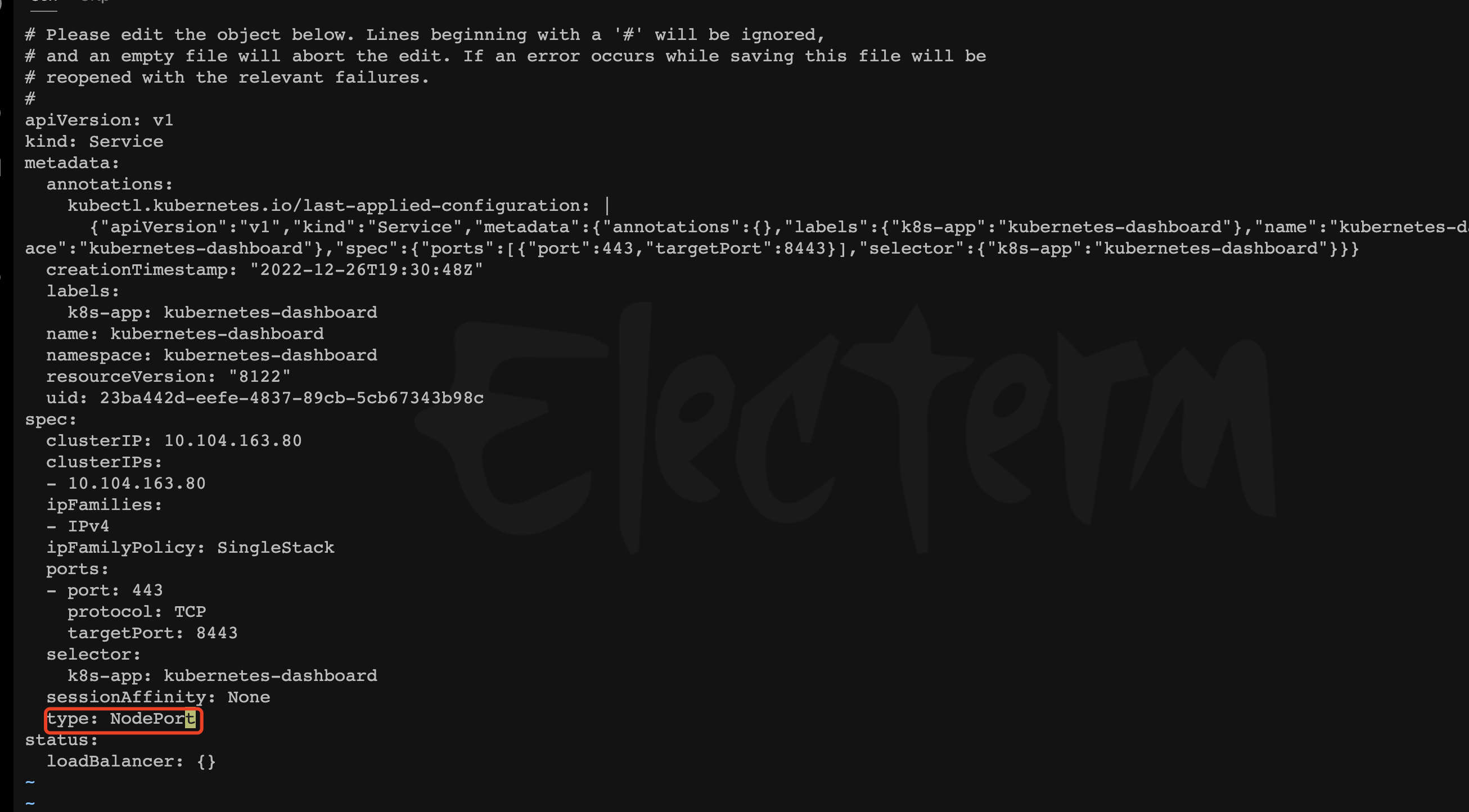

修改ClusterIP为NodePort

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard #type: ClusterIP 改为 type: NodePort

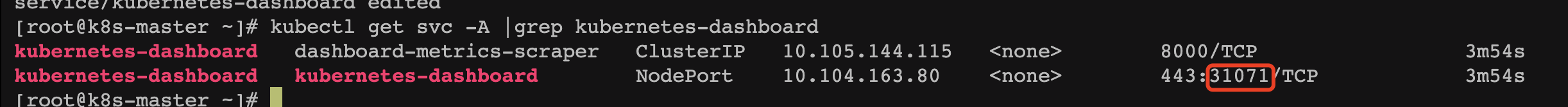

查看端口:

kubectl get svc -A |grep kubernetes-dashboard

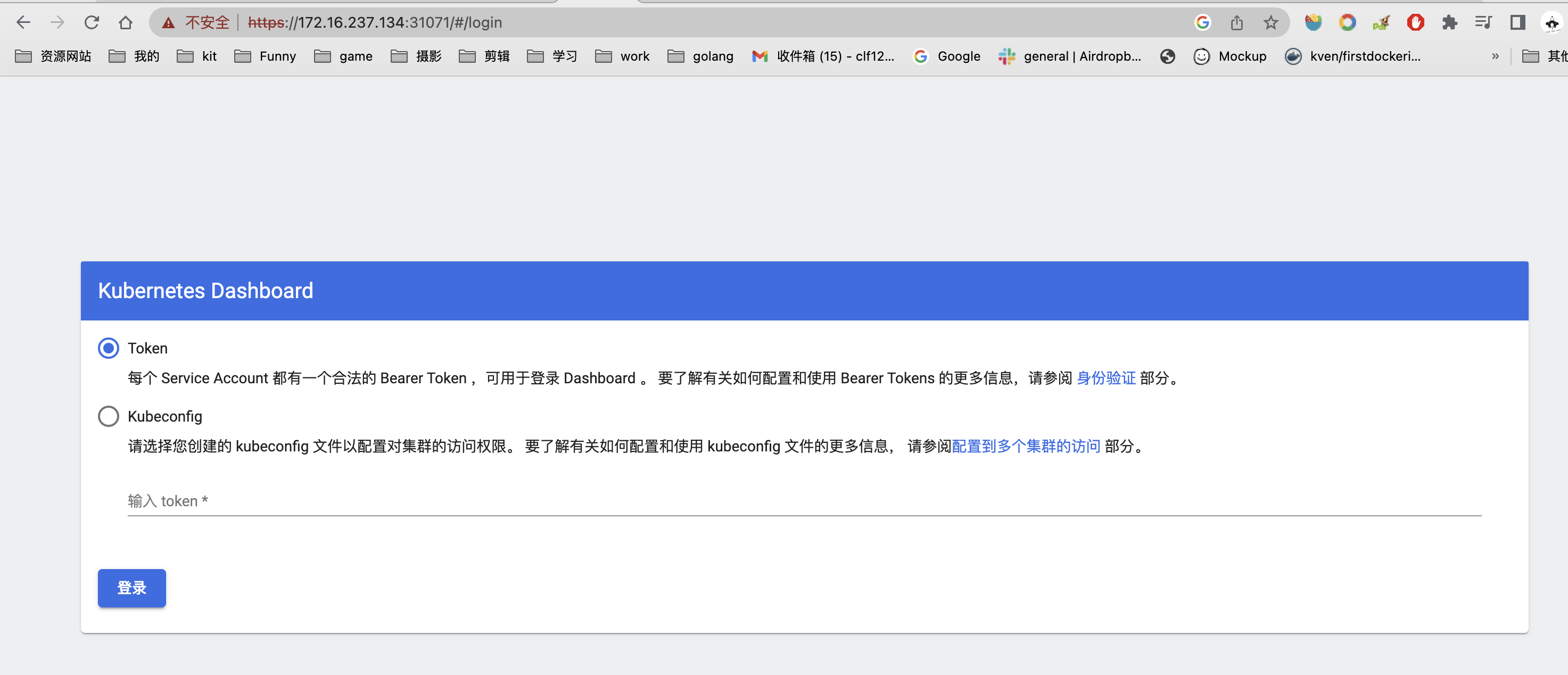

访问dashboard

键盘输入:thisisunsafe,页面即可显示登录页面:

创建对应的账号:

#创建访问账号,准备一个yaml文件; vi dashaccount.yaml apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kubernetes-dashboard

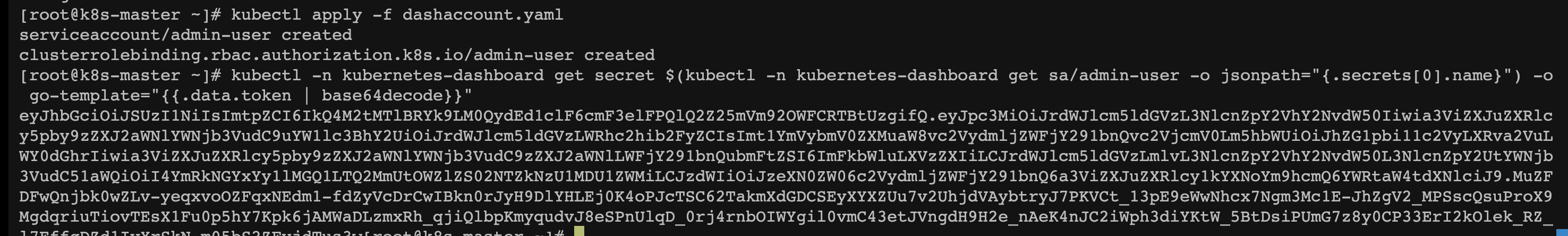

kubectl apply -f dashaccount.yaml

生成token:

#获取访问令牌

kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"

记录自己的token:

eyJhbGciOiJSUzI1NiIsImtpZCI6IkQ4M2tMTlBRYk9LM0QydEd1clF6cmF3elFPQlQ2Z25mVm92OWFCRTBtUzgifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLWY0dGhrIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI4YmRkNGYxYy1lMGQ1LTQ2MmUtOWZlZS02NTZkNzU1MDU1ZWMiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.MuZFDFwQnjbk0wZLv-yeqxvoOZFqxNEdm1-fdZyVcDrCwIBkn0rJyH9DlYHLEj0K4oPJcTSC62TakmXdGDCSEyXYXZUu7v2UhjdVAybtryJ7PKVCt_13pE9eWwNhcx7Ngm3Mc1E-JhZgV2_MPSscQsuProX9MgdqriuTiovTEsX1Fu0p5hY7Kpk6jAMWaDLzmxRh_qjiQlbpKmyqudvJ8eSPnUlqD_0rj4rnbOIWYgil0vmC43etJVngdH9H2e_nAeK4nJC2iWph3diYKtW_5BtDsiPUmG7z8y0CP33ErI2kOlek_RZ_l7EffgDZd1IvXrSkN-m05bS2ZFyjdTus3w

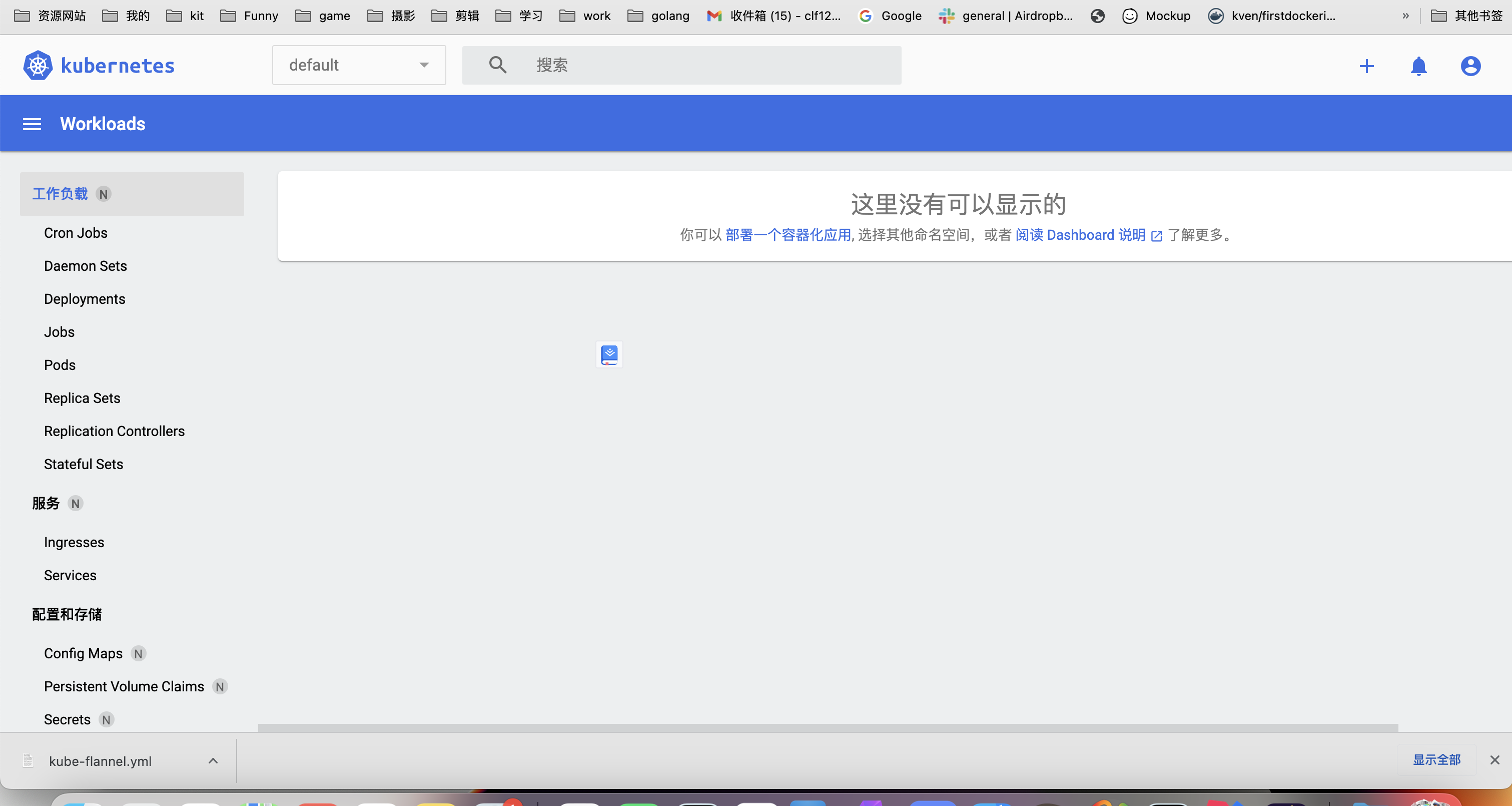

输入token后登录:

k8s常用命令

# 查看所有节点 kubectl get nodes #查看集群服务器信息 kubectl get nodes -o wide # 查看kube-system命名空间下的pod kubectl get pods -n kube-system #部署资源 kubectl apply -f calico.yaml #删除部署 kubectl delete -f calico.yaml #强制删除pod 命名空间为kube-system pod为name为coredns-545d6fc579-s2j64 kubectl delete pod coredns-545d6fc579-s2j64 -n kube-system --grace-period=0 --force #查看所有名称空间 namespace简称ns kubectl get ns #创建名称空间 kubectl create ns 名称空间 #删除名称空间 kubectl delete ns 名称空间 #查看默认default名称空间下的应用 kubectl get pods #监控查看Pod kubectl get pod -w #监控查看Pod watch -n 1 kubectl get pods #查看所有应用 kubectl get pods -A #查看该名称空间下的应用 kubectl get pods -n 名称空间 #查看默认名称空间下更详细的应用信息 kubectl get pod -owide # 查看所有pod使用的内存 kubectl top pod -A #查看容器描述 默认是default命名空间 kubectl describe pod myk8snginx kubectl describe pod -n ruoyi-cloud ry-cloud-mysql-0 # 查看Pod运行日志 kubectl logs mynginx #进入容器 kubectl exec -it mynginx -- /bin/bash kubectl exec -it redis -- redis-cli

其他配置

安装oh-my-zsh

yum install zsh -y yum install git -y sh -c "$(curl -fsSL https://raw.githubusercontent.com/ohmyzsh/ohmyzsh/master/tools/install.sh)" # 重新导入k8s环境配置 export KUBECONFIG=/etc/kubernetes/admin.conf # or vim ~/.zshrc # 在最后一行添加 export KUBECONFIG=/etc/kubernetes/admin.conf source ~/.zshrc

ipvs配置

- 在kubernetes中service有两种代理模型,一种是基于iptables,另一种是基于ipvs的。ipvs的性能要高于iptables的,但是如果要使用它,需要手动载入ipvs模块。

- 在每个节点安装ipset和ipvsadm:

yum -y install ipset ipvsadm

- 在所有节点执行如下脚本:

cat > /etc/sysconfig/modules/ipvs.modules <<EOF #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack EOF

- 授权、运行、检查是否加载:

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

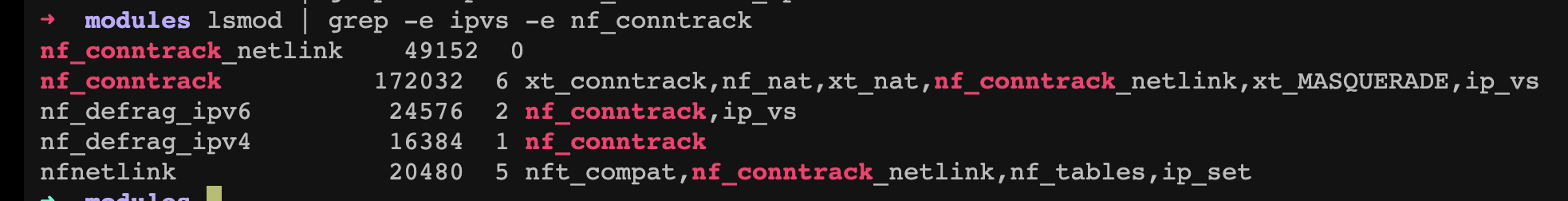

- 检查是否加载:

lsmod | grep -e ipvs -e nf_conntrack

风语者!平时喜欢研究各种技术,目前在从事后端开发工作,热爱生活、热爱工作。

QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。...

QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。... U8W/U8W-Mini使用与常见问题解决

U8W/U8W-Mini使用与常见问题解决 stm32使用HAL库配置串口中断收发数据(保姆级教程)

stm32使用HAL库配置串口中断收发数据(保姆级教程) 分享几个国内免费的ChatGPT镜像网址(亲测有效)

分享几个国内免费的ChatGPT镜像网址(亲测有效) Allegro16.6差分等长设置及走线总结

Allegro16.6差分等长设置及走线总结