您现在的位置是:首页 >技术杂谈 >nvidia-smi 失效解决网站首页技术杂谈

nvidia-smi 失效解决

服务器重启后,跑模型发现:

RuntimeError: Attempting to deserialize object on a CUDA device but torch.cuda.is_available() is False. If you are running on a CPU-only machine, please use torch.load with map_location=torch.device('cpu') to map your storages to the CPU.

然后使用 nvidia-smi来查看:

>>nvidia-smi

NVIDIA-SMI has failed because it couldn't communicate with the NVIDIA driver. Make sure that the latest NVIDIA driver is installed and running.

很明显,驱动掉了。

然后查看cuda:

>> nvcc -V

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2022 NVIDIA Corporation

Built on Tue_May__3_18:49:52_PDT_2022

Cuda compilation tools, release 11.7, V11.7.64

Build cuda_11.7.r11.7/compiler.31294372_0

再看驱动:

>> ls /usr/src | grep nvidia

nvidia-525.89.02

还好,都在。应该是ubuntu 重启时自动更新了。那就好解决了~

>> sudo apt-get install dkms

>>sudo dkms install -m nvidia -v 525.89.02

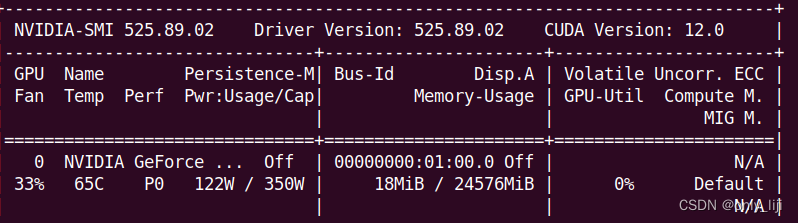

再运行 nvidia-smi ,然后熟悉的界面出现了

QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。...

QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。... U8W/U8W-Mini使用与常见问题解决

U8W/U8W-Mini使用与常见问题解决 stm32使用HAL库配置串口中断收发数据(保姆级教程)

stm32使用HAL库配置串口中断收发数据(保姆级教程) 分享几个国内免费的ChatGPT镜像网址(亲测有效)

分享几个国内免费的ChatGPT镜像网址(亲测有效) Allegro16.6差分等长设置及走线总结

Allegro16.6差分等长设置及走线总结