您现在的位置是:首页 >技术交流 >使用HDFS底层文件进行HBase跨集群数据迁移网站首页技术交流

使用HDFS底层文件进行HBase跨集群数据迁移

简介使用HDFS底层文件进行HBase跨集群数据迁移

目录

一、概述

客户集群机房迁移,我们部署的集群也要完成跨集群迁移hbase 表,这里选择迁移Hadoop底层数据来实现hbase的表迁移。

迁移Hadoop底层文件的方式有两种:

- distcp

- 从旧集群get 获取文件到本地在 put到新集群上。

因为,我们这个A集群是kerberos 环境,B集群是不带kerberos环境,这里使用Distcp的方式。这里仅是为了迁移底层数据,所以选择那种方式都可以。

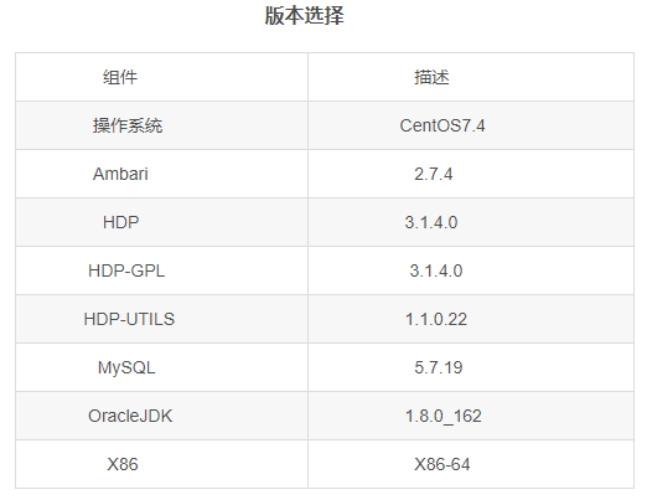

二、环境信息

HBase2.0.2

三、HBCK2下载和编译

GitHub 上下载

GitHub - apache/hbase-operator-tools: Apache HBase Operator Tools

编译

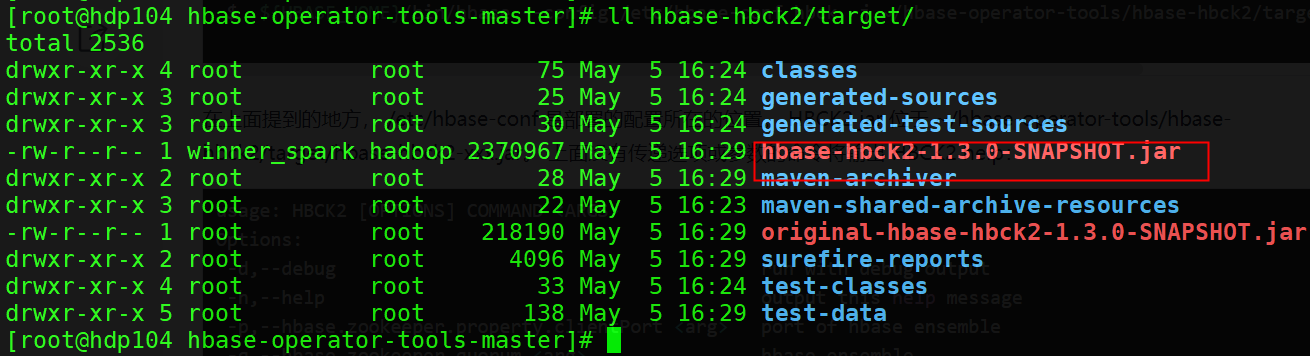

mvn clean install -DskipTests如下图 我编译成功的 hbck2 jar包

命令行执行 可以看具体参数的用法

sudo -u hbase hbase --config /etc/hbase/ hbck -j /home/jz/hbase-hbck2-1.3.0-SNAPSHOT.jar

usage: HBCK2 [OPTIONS] COMMAND <ARGS>

Options:

-d,--debug run with debug output

-h,--help output this help message

-p,--hbase.zookeeper.property.clientPort <arg> port of hbase ensemble

-q,--hbase.zookeeper.quorum <arg> hbase ensemble

-s,--skip skip hbase version check

(PleaseHoldException)

-v,--version this hbck2 version

-z,--zookeeper.znode.parent <arg> parent znode of hbase

ensemble

Command:

addFsRegionsMissingInMeta [<NAMESPACE|NAMESPACE:TABLENAME>...|-i

<INPUTFILES>...]

Options:

-i,--inputFiles take one or more files of namespace or table names

To be used when regions missing from hbase:meta but directories

are present still in HDFS. Can happen if user has run _hbck1_四、具体操作步骤

两个步骤:

(1)拷贝底层表hdfs数据

(2)使用hbck工具恢复表数据

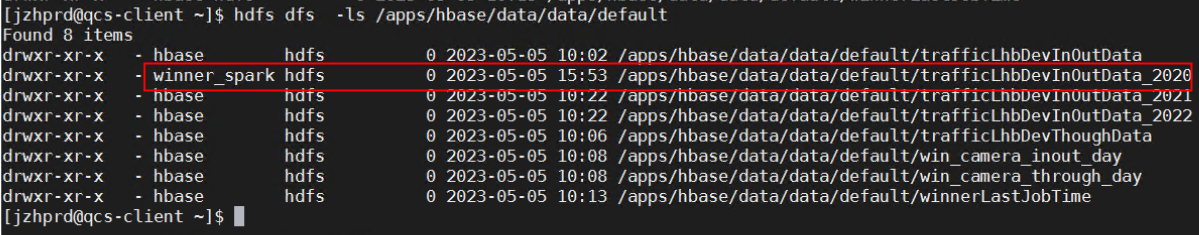

4.1 数据同步

hadoop distcp -Dipc.client.fallback-to-simple-auth-allowed=true -Dmapreduce.map.memory.mb=1024 -D mapred.map.max.attempts=3 -m 3 -numListstatusThreads 3

hdfs://10.82.28.171:8020/apps/hbase/data/data/default/trafficLhbDevInOutData_2020

hdfs://10.82.50.191:8020/apps/hbase/data/data/default修改数据表目录权限

sudo -u hdfs hdfs dfs -chown -R hbase:hdfs /apps/hbase/data/data/default/trafficLhbDevInOutData_2020

4.2 添加元数据

hbase --config /usr/hdp/3.1.4.0-315/hbase/ hbck -j /home/jz/hbase-hbck2-1.3.0-SNAPSHOT.jar -z /hbase-unsecure addFsRegionsMissingInMeta default:trafficLhbDevInOutData_2020

## 执行

[xxxprd@qcs-client ~]$ hbase --config /usr/hdp/3.1.4.0-315/hbase/conf/ hbck -j /home/jz/hbase-hbck2-1.3.0-SNAPSHOT.jar -z /hbase-unsecure addFsRegionsMissingInMeta default:trafficLhbDevInOutData_2020

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/jzhprd/hbase-hbck2-1.3.0-SNAPSHOT.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hdp/3.1.4.0-315/phoenix/phoenix-5.0.0.3.1.4.0-315-server.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hdp/3.1.4.0-315/hadoop/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

18:16:30.311 [main] INFO org.apache.hadoop.hbase.zookeeper.ReadOnlyZKClient - Connect 0x74f7d1d2 to qcs-namenode:2181,qcs-client:2181,qcs-snamenode:2181 with session timeout=90000ms, retries 6, retry interval 1000ms, keepAlive=60000ms

18:16:31.284 [main] INFO org.apache.hadoop.hbase.zookeeper.ReadOnlyZKClient - Close zookeeper connection 0x74f7d1d2 to qcs-namenode:2181,qcs-client:2181,qcs-snamenode:2181

Regions re-added into Meta: 6

WARNING:

6 regions were added to META, but these are not yet on Masters cache.

You need to restart Masters, then run hbck2 'assigns' command below:

assigns 034307e7c4ca3f0f65c806077d4bb123 3302faa35da3877220c1b49589208b89 3fcd8d4755d9ddd2d1a7af7e309dd049 4c306f12b329a78928e1439b48e257f7 56213be6765d63585ed4f3c1c9d48f9f 887bbf63e7f905bc84ba1bcd4fd8681c

[xxxprd@qcs-client ~]$You need to restart Masters, then run hbck2 'assigns' command below(重启Master)

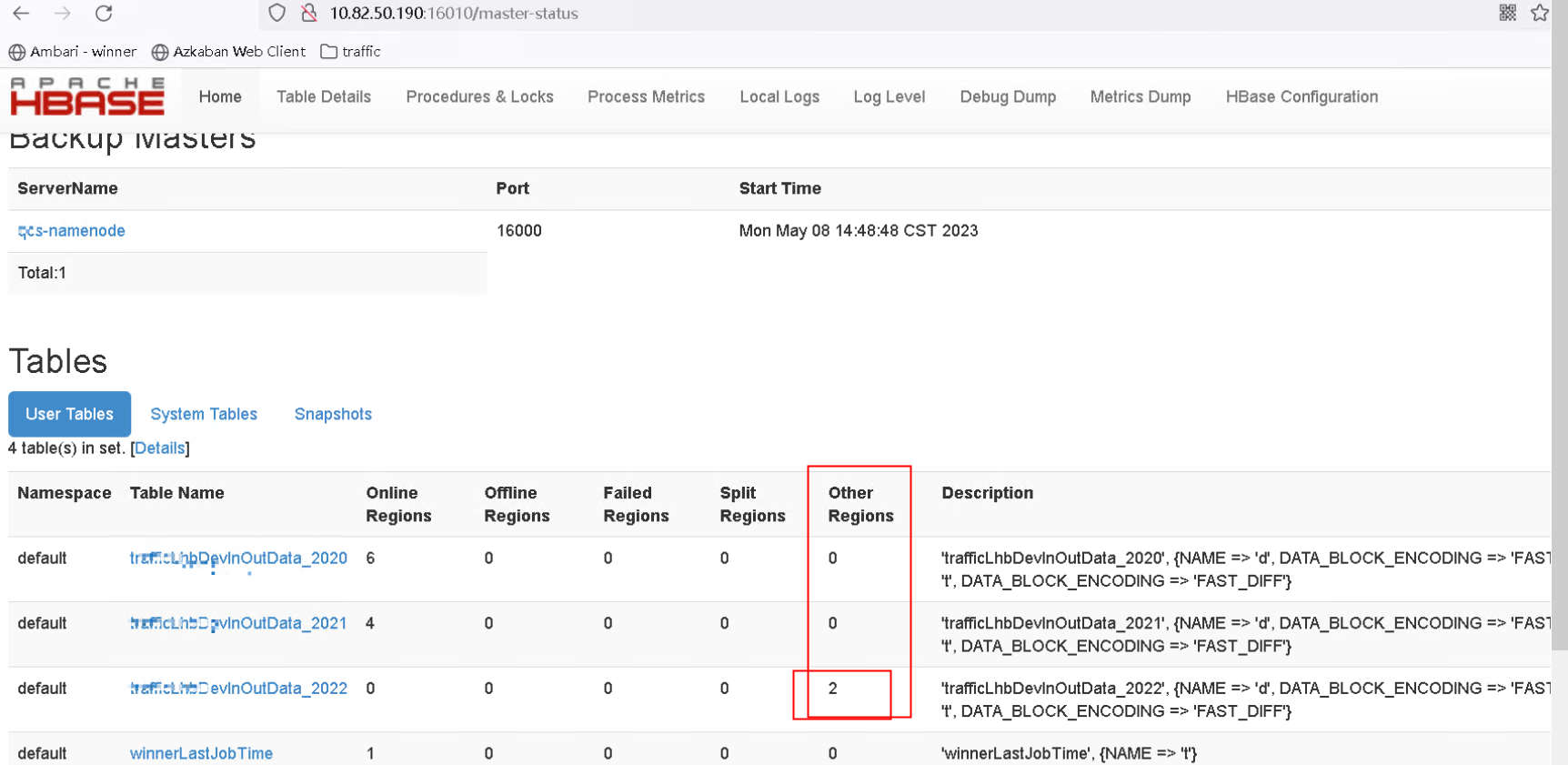

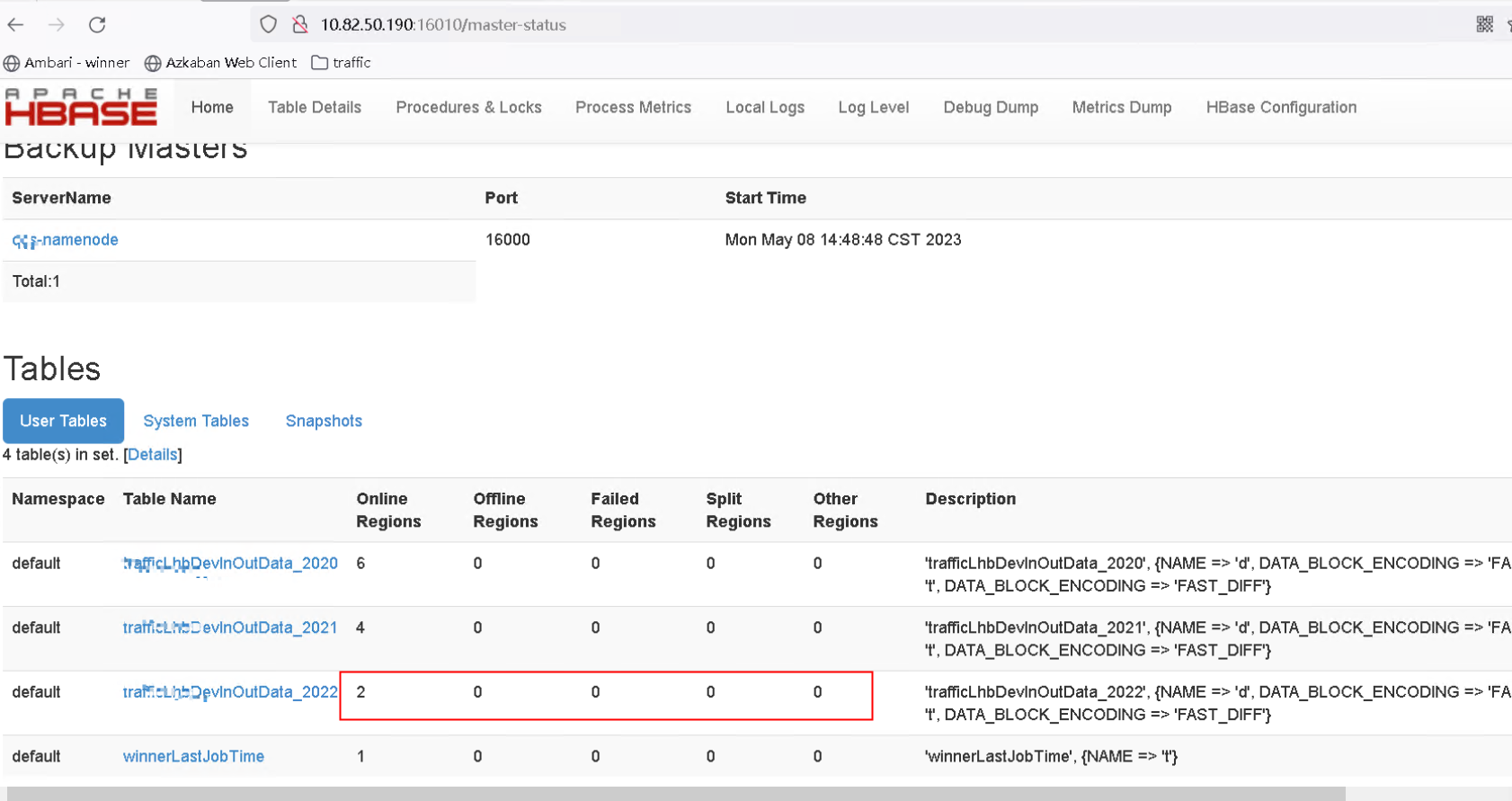

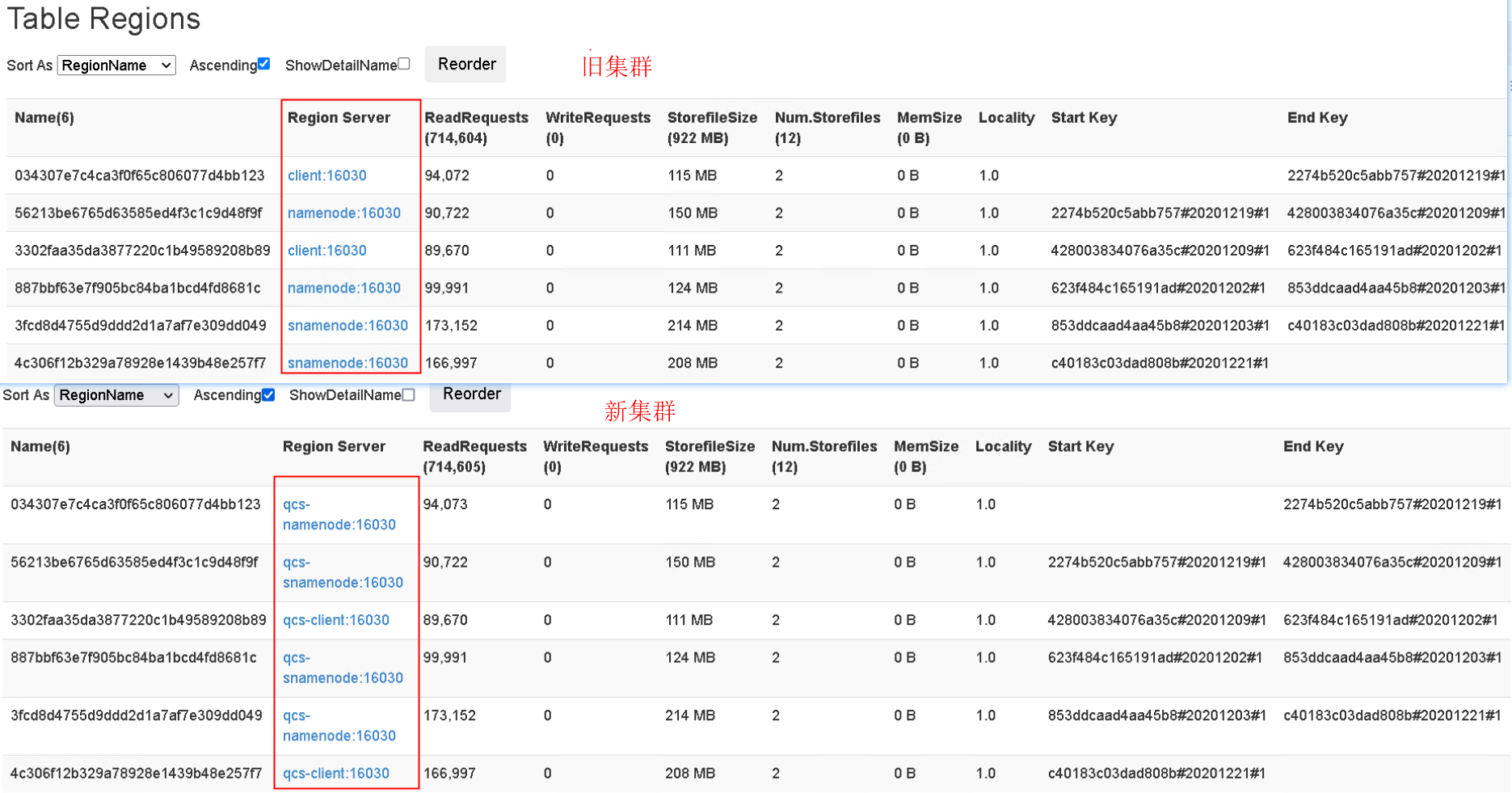

从HMaster -web 可以看到表的 2 个Region 的状态为 Other Regions

4.3 重新分配region

hbase --config /usr/hdp/3.1.4.0-315/hbase/ hbck -j /home/jz/hbase-hbck2-1.3.0-SNAPSHOT.jar -z /hbase-unsecure assigns 063844973716717e1ddf6a705dd02907 afad0ca9c4587a3a26a778f2b79929d4执行 返回ID, 如果返回 -1 则表示分配不成功

[jz@qcs-client ~]$ hbase --config /usr/hdp/3.1.4.0-315/hbase/ hbck -j /home/jz/hbase-hbck2-1.3.0-SNAPSHOT.jar -z /hbase-unsecure assigns 063844973716717e1ddf6a705dd02907 afad0ca9c4587a3a26a778f2b79929d4

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/jzhprd/hbase-hbck2-1.3.0-SNAPSHOT.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hdp/3.1.4.0-315/phoenix/phoenix-5.0.0.3.1.4.0-315-server.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hdp/3.1.4.0-315/hadoop/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

17:58:15.614 [main] INFO org.apache.hadoop.hbase.zookeeper.ReadOnlyZKClient - Connect 0x3fc79729 to localhost:2181 with session timeout=90000ms, retries 30, retry interval 1000ms, keepAlive=60000ms

[114, 115]

17:58:16.450 [main] INFO org.apache.hadoop.hbase.zookeeper.ReadOnlyZKClient - Close zookeeper connection 0x3fc79729 to localhost:2181

[jz@qcs-client ~]$执行完上面的 命令后可以看到 2 个Region 的状态由 Other Regions 变为 Online Regions

trafficLhbDevInOutData_2020 表迁移前后的Region 个数一样

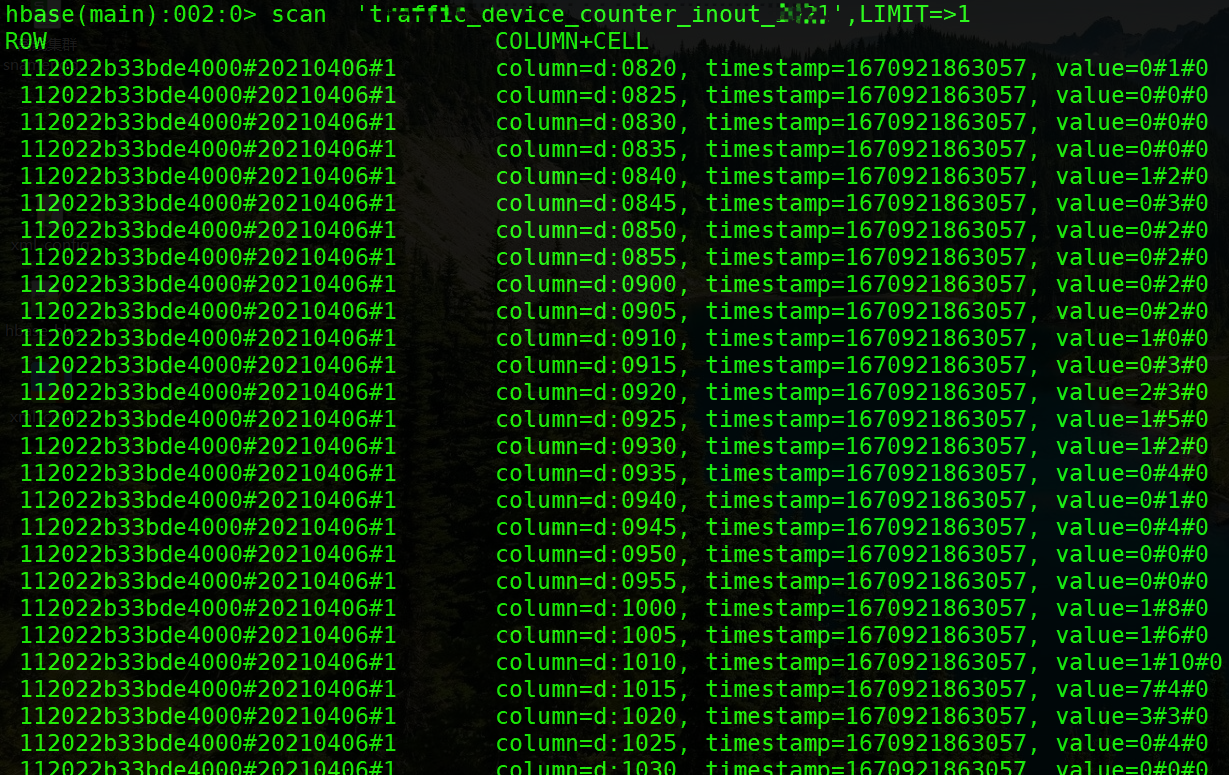

查询数据

如果是带kerberos 认证的集群我们需要在jar包中 加入 集群的 xml 配置文件即可。

参考:

Hbase跨集群迁移_hbase跨集群数据迁移_喧嚣已默,往事非昨的博客-CSDN博客

感谢点赞和关注 !

风语者!平时喜欢研究各种技术,目前在从事后端开发工作,热爱生活、热爱工作。

U8W/U8W-Mini使用与常见问题解决

U8W/U8W-Mini使用与常见问题解决 分享几个国内免费的ChatGPT镜像网址(亲测有效)

分享几个国内免费的ChatGPT镜像网址(亲测有效) stm32使用HAL库配置串口中断收发数据(保姆级教程)

stm32使用HAL库配置串口中断收发数据(保姆级教程) QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。...

QT多线程的5种用法,通过使用线程解决UI主界面的耗时操作代码,防止界面卡死。... SpringSecurity实现前后端分离认证授权

SpringSecurity实现前后端分离认证授权